The Eschatian Hypothesis: Why Our First Contact From Aliens May Be Particularly Bleak, And Nothing Like The Movies

A new study published in Evolutionary Psychological Science identifies five distinct strategies that women employ to detect or prevent deception from potential romantic partners. The findings indicate that introducing partners to family members and taking relationships slowly are the most common methods women use to verify a man’s honesty. These behaviors appear to function as evolutionary counter-measures against the risks of sexual exploitation in mating contexts.

Humans face a fundamental adaptive challenge in the realm of mating known as exploitation. One individual might attempt to enhance their own reproductive success at the expense of another’s fitness. This dynamic often involves deception, where a person misrepresents their intentions or background to gain sexual access.

Evolutionary theory suggests that women have historically faced higher costs from such deception than men have. This disparity stems from biological realities regarding parental investment. Women are obligated to invest substantial metabolic resources into offspring through gestation and lactation.

Men, conversely, can theoretically achieve reproduction with a minimal investment of time and resources. This asymmetry means that a man could walk away after a sexual encounter with few immediate consequences. A woman in the same situation would be left with the burdens of pregnancy and child-rearing without partner support.

This discrepancy likely created strong selection pressures for women to develop specific defenses. Researchers view this interaction as a form of evolutionary arms race. As men developed deceptive tactics to secure short-term mating opportunities, women likely co-evolved detection strategies to protect themselves.

“The core concept draws from the evolutionary arms race between measures of exploitation and counter-exploitation, as previously examined in studies of rape avoidance mechanisms that mitigate the high costs of rape as an exploitative strategy. A milder form of intersexual conflict manifests in sexual deception, yet a key research gap persisted regarding women’s specific counter-strategies to this form of exploitation,” said study author Peyman Sayyad of the Shams Higher Education Institute.

The researchers sought to catalog women’s specific anti-deception tactics. They aimed to understand how these behaviors are structured and what personality traits influence their use. The researchers conducted two separate investigations to explore this topic.

The first study utilized a qualitative approach to generate a broad list of potential behaviors. The research team recruited 147 female undergraduate students from a large public university in the Southeastern United States. The average age of these participants was approximately 19 years old.

Participants answered open-ended questions about what actions they or other women take to avoid being deceived in dating contexts. They were asked to describe specific things they might do, such as asking friends for verification. They also listed things they might avoid doing, such as rushing into intimacy.

The researchers and a graduate student independently analyzed these written responses. They worked to eliminate vague or redundant answers to create a consolidated list. This process resulted in the identification of 43 distinct anti-deception acts that women might perform.

The second study involved a new group of 249 female participants recruited from the same university setting. The sample was predominantly White, though it included participants from various ethnic backgrounds. Approximately 44 percent of the sample reported being in a relationship at the time of the study.

These participants reviewed the list of 43 behaviors identified in the first phase. They rated how likely they would be to perform each action on a scale ranging from “to no extent” to “to a very high extent.” This allowed the researchers to quantify which strategies are most prevalent.

The researchers also administered standard psychological questionnaires to assess individual personality differences. Participants completed the Mate Value Scale to rate their own self-perceived desirability as a partner.

They also completed the revised Sociosexual Orientation Inventory. This inventory measures an individual’s willingness to engage in uncommitted sexual activity. It assesses past sexual behavior, attitudes toward casual sex, and sexual desire. Higher scores on this measure indicate a more unrestricted sociosexuality, meaning a preference for short-term mating.

The researchers also measured attachment styles using the Experiences in Close Relationships Scale. This specifically looked at avoidant attachment, which involves discomfort with intimacy. Finally, the researchers assessed neuroticism using a short form of the Big Five Inventory.

Statistical analysis of the survey responses revealed that the anti-deception tactics clustered into five main categories. The researchers labeled the first and most frequently considered category as “Integration.” This domain involves introducing a potential partner to family members or meeting his family.

Integration serves as a robust vetting mechanism. Involving family allows a woman to verify a partner’s background and intentions through the scrutiny of kin. This finding aligns with historical patterns where families played a central role in mate selection.

The second most common domain was labeled “Reticence.” This strategy focuses on slowing down the pace of the relationship to prevent premature emotional attachment. Tactics in this category include avoiding rushing into commitment or delaying sexual intimacy until trust is firmly established.

By maintaining distance, a woman can observe a partner’s behavior over time. This reduces the risk of overlooking red flags due to the blinding effects of infatuation. It provides a longer window for deceptive signals to become apparent.

The third domain identified was “Social Media.” This involves researching a partner’s online presence or checking the profiles of his friends. Women might look for inconsistencies between what a man says and what his digital footprint reveals.

The fourth category was “Religion Matching.” This entails seeking partners with shared religious beliefs or ensuring a partner is a practicing believer. This strategy relies on the heuristic that religious individuals may adhere to stricter moral codes regarding honesty and fidelity.

The least common strategy was labeled “Distrust.” This category includes more active and confrontational tactics. For example, a woman might ask questions to which she already knows the answer to test a partner’s honesty.

“Women might employ diverse strategies to counter sexual deception in mating and dating contexts,” Sayyad told PsyPost. “These include familial oversight, religion, and modern cultural mechanisms like social media.”

The researchers also found associations between these strategies and individual personality traits. Women who were more open to short-term mating were less likely to use Integration or Religion Matching tactics. This suggests that women focused on casual relationships may prioritize these long-term vetting mechanisms less.

For women pursuing short-term mating, the goal is often immediate sexual access rather than long-term resource provisioning. As a result, the deep vetting provided by family integration or religious alignment may be viewed as unnecessary obstacles.

Additionally, the researchers found a link between attachment style and behavior. Women with higher levels of avoidant attachment were more likely to use Reticence tactics. These individuals often feel uncomfortable with intimacy and may use distance as a protective mechanism.

This tendency to hold back serves a dual purpose for avoidantly attached women. It protects them from the emotional risks of intimacy while simultaneously guarding against deception. By not committing quickly, they minimize their vulnerability to exploitation.

Contrary to the researchers’ expectations, a woman’s self-perceived mate value did not predict which tactics she used. High mate value is often associated with being a target for deception. The authors hypothesized that these women would be more vigilant, but the data did not support this link.

Similarly, neuroticism did not show a significant connection to any specific anti-deception domain. Neuroticism is characterized by higher sensitivity to threat and negative emotion. The researchers expected this trait to correlate with increased vigilance, but the results were null.

There are some limitations to consider. The sample consisted entirely of undergraduate women. This demographic is relatively young and may have limited mating experience compared to older adult populations.

The specific context of the study also matters. The research focused on a modern Western environment where women have free choice in mating. This differs from ancestral environments or cultures where family members play a dominant role in arranging marriages.

The study also relied on self-reported intentions rather than observed behaviors. Participants indicated what they would do, which may not perfectly align with their actions in a real-world scenario. Future research is needed to determine the actual effectiveness of these tactics in detecting lies.

“This study investigates women’s counter-strategies to sexual deception within a free-choice mating context that minimizes parental involvement, diverging from ancestral conditions prevalent across much of human history,” Sayyad noted. “Moreover, such defenses may rely on domain-general adaptations to exploitation rather than deception-specific mechanisms, warranting more tests in future research. These caveats highlight opportunities for extensions.”

It is also possible that men have evolved counter-counter-strategies. If women use these specific tactics to detect deception, men may have developed ways to bypass these checks. This ongoing co-evolutionary dynamic suggests that the repertoire of deception and detection is likely complex.

The findings provide a structured framework for understanding how women navigate the risks of modern dating. They highlight that skepticism is not a singular trait but manifests through diverse behavioral strategies. These strategies appear to be deployed selectively based on a woman’s mating goals and attachment style.

“Assessing the role of parents in offspring intersexual conflicts offers a promising avenue for future research,” Sayyad added.

The study, “Women’s Anti-Deception Tactics in Mating: A Preliminary Investigation,” was authored by Peyman Sayyad, Mazyar Bagherian, Farid Pazhoohi, and Mitch Brown.

Recent research suggests that the consumption of caffeinated beverages is linked to a measurable increase in positive feelings, particularly during the morning hours. While caffeine reliably lifts spirits, its ability to reduce negative emotions appears less consistent and does not depend on the time of day. These findings were detailed in a paper published in the journal Scientific Reports.

Caffeine is the most widely consumed psychoactive substance in the world. Estimates suggest that nearly 80 percent of the global population ingests it in some form. Common sources include coffee, tea, soda, and chocolate. Consumers often rely on these products to combat fatigue or improve their focus. Many also anecdotally report that a cup of coffee improves their general disposition.

Researchers have studied the effects of caffeine extensively in laboratory settings. These controlled environments have confirmed that the substance acts as a stimulant for the central nervous system. However, laboratories are artificial environments. They strip away the messy variables of daily life. They cannot easily account for social interactions, work stress, or the natural fluctuations of the biological clock.

Justin Hachenberger, a researcher at Bielefeld University in Germany, led a team to investigate these effects in the real world. The team sought to understand how caffeine interacts with an individual’s emotional state outside of the laboratory. They also wanted to see if factors like the time of day or social setting changed the outcome.

To understand the study, it is helpful to distinguish between “mood” and “affect.” In psychology, mood typically refers to a sustained emotional state that lasts for a long period. Affect refers to short-term, reactive emotional states. These are the immediate feelings a person experiences in response to a stimulus. The researchers focused specifically on momentary affect.

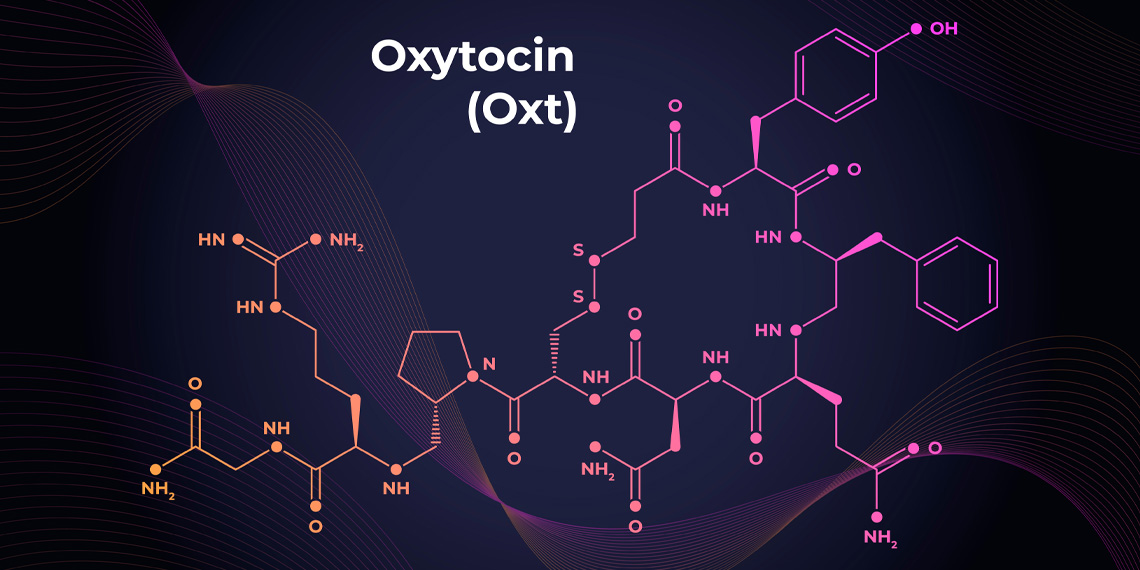

The biological mechanism behind caffeine is well understood. The substance acts as an adenosine antagonist. Adenosine is a chemical that accumulates in the brain throughout the day. It binds to specific receptors and slows down nerve cell activity. This process creates the sensation of drowsiness.

Caffeine mimics the shape of adenosine. It binds to the same receptors but does not activate them. This blocks the real adenosine from doing its job. By preventing this slowdown, caffeine allows stimulating neurotransmitters like dopamine to remain active. This leads to increased alertness and potentially improved feelings of well-being.

The researchers employed a technique known as the Experience Sampling Method. This approach involves asking participants to report on their experiences repeatedly throughout the day in their natural environments. This method reduces memory errors. Participants report what they are feeling right now rather than what they remember feeling yesterday.

The investigation consisted of two separate studies involving young adults. The first study tracked 115 participants for two weeks. The second tracked 121 participants for four weeks. Participants ranged in age from 18 to 29. They used smartphones to answer short surveys seven times a day.

In each survey, participants reported whether they had consumed any caffeinated beverages in the past 90 minutes. They also rated their current feelings. They used a sliding scale to indicate how enthusiastic, happy, or content they felt. These items combined to form a score for positive affect. They also rated how sad, upset, or worried they felt. These items formed a score for negative affect.

The data showed a clear association between caffeine and positive feelings. In both studies, participants reported higher levels of enthusiasm and happiness after consuming caffeine. The statistical analysis accounted for sleep duration and sleep quality. This suggests the mood boost was not simply a result of being well-rested.

The timing of consumption played a major role in the intensity of this effect. The association between caffeine and positive affect was strongest in the first few hours after waking up. Specifically, the boost was most pronounced within 2.5 hours of rising.

This morning peak aligns with the concept of sleep inertia. This is the groggy transition period between sleep and full wakefulness. The researchers propose that caffeine may help individuals overcome this state more effectively. It helps jump-start the sympathetic nervous system. As the day progressed, the link between caffeine and positive feelings weakened.

The results regarding negative affect were different. The researchers hypothesized that caffeine would reduce feelings of sadness or worry. The data only partially supported this. A reduction in negative affect was observed in the second, longer study. It was not observed in the first study.

Unlike positive feelings, the reduction in negative feelings did not change based on the time of day. If caffeine helped mitigate sadness, it did so regardless of whether it was morning or evening. This suggests that the mechanisms driving positive and negative affect may differ.

The study also examined whether the context of consumption mattered. The researchers looked at whether participants were alone or with others. They also asked about levels of tiredness.

Tiredness acted as a moderator for the effect. Participants who felt more tired than usual experienced a greater increase in positive affect after consuming caffeine. This supports the common use of caffeine as a countermeasure against fatigue.

Social context also influenced the results. The link between caffeine and positive affect was weaker when participants were around other people. This finding is somewhat counterintuitive. One might expect socializing over coffee to boost mood further.

The authors suggest a “ceiling effect” might be at play. Social interaction often increases positive affect on its own. If a person is already feeling good because they are with friends, caffeine may not be able to push their positive feelings much higher. The chemical effect becomes less noticeable amidst the social stimulation.

The researchers also looked for differences based on individual traits. They collected data on participants’ habitual caffeine intake. They also screened for symptoms of anxiety and depression using standardized questionnaires.

Surprisingly, these individual differences did not alter the results. The relationship between caffeine and mood remained consistent across the board. Frequent consumers did not show a different pattern of emotional response compared to lighter users.

This challenges the “withdrawal reversal” hypothesis. Some scientists argue that caffeine only makes people feel better because it cures withdrawal symptoms. If that were the only factor, heavy users would experience a massive boost while light users would feel little. The consistency across groups suggests there may be a direct mood-enhancing effect beyond just fixing withdrawal.

Hachenberger noted this consistency in the press materials. He stated, ‘We were somewhat surprised to find no differences between individuals with varying levels of caffeine consumption or differing degrees of depressive symptoms, anxiety, or sleep problems. The links between caffeine intake and positive or negative emotions were fairly consistent across all groups.’

However, there are caveats to consider. The study relied on self-reports. While the sampling method is robust, it still depends on participant honesty and accuracy. The sample consisted entirely of young adults. The way an 18-year-old metabolizes caffeine may differ from that of an older adult.

Additionally, the study is observational. It shows a correlation but cannot prove causation. It is possible that people who are already in a good mood are more likely to seek out coffee. However, the use of within-person analysis helps control for this to some degree.

There is also the question of anxiety. High doses of caffeine can induce jitteriness and anxiety. The study did not find a link between caffeine and increased worry. However, the researchers note that individuals prone to caffeine-induced anxiety might avoid the substance entirely. These people would naturally exclude themselves from a study on caffeine consumption.

The researchers recommend future studies use more objective measures. Wearable technology could track heart rate and skin temperature. This would provide precise physiological data to match the psychological reports. Tracking the exact moment of consumption, rather than a 90-minute window, would also improve precision.

Understanding these daily fluctuations helps paint a clearer picture of human behavior. It moves the science of nutrition and psychology out of the lab and into the rhythm of daily life. For now, the data supports the habit of the morning coffee. It appears to be an effective tool for boosting positive engagement with the day, particularly in those first groggy hours.

The study, “The association of caffeine consumption with positive affect but not with negative affect changes across the day,” was authored by Justin Hachenberger, Yu-Mei Li, Anu Realo, and Sakari Lemola.

A new study published in Social Psychological and Personality Science challenges the conventional wisdom regarding the relationship between self-discipline and happiness. The findings suggest that psychological well-being acts as a precursor to self-control rather than a result of it. This research indicates that individuals who prioritize their emotional health may be better equipped to pursue long-term goals than those who rely solely on willpower.

Psychology has traditionally viewed self-control as a prized human capacity that is essential for a successful life. The general assumption holds that the ability to resist short-term temptations in favor of long-term goals leads to better health, career success, and financial security. By extension, scholars and the public alike often assume that exercising high self-control leads to increased happiness and life satisfaction.

Despite the popularity of this belief, the scientific evidence supporting a direct causal link from self-control to well-being has been inconclusive. Many previous studies relied on correlational data, which can show that two things are related but cannot determine which one causes the other. Other studies that attempted to track these variables over time faced methodological issues that made it difficult to draw firm conclusions about directionality.

“Our work was driven by a significant gap in the existing research. For years, psychologists have operated under the strong assumption that self-control is a key driver of well-being,” said study author Lile Jia, an associate professor at the National University of Singapore and director of the Situated Goal Pursuit (SPUR) Lab.

“The narrative is that if you are more disciplined, you will be happier and more satisfied with life. However, when we examined the scientific literature, the causal evidence for this claim was surprisingly weak and fraught with issues. Most studies were correlational, and the few longitudinal studies attempting to establish causality had methodological limitations that made their conclusions ambiguous.”

“At the same time, there are strong theoretical reasons to suspect the causal arrow might point in the opposite direction,” Jia explained. “For example, Barbara Fredrickson’s ‘broaden-and-build’ theory suggests that positive emotions—a core component of well-being—broaden our mindset and help us build personal resources. We reasoned that these resources could, in turn, facilitate better self-control.”

“So, the central motivation was to rigorously test these competing causal pathways. We wanted to clarify the directionality of this important relationship between self-control and well-being using more robust statistical methods (the RI-CLPM) and a three-wave longitudinal design, which is better suited for making causal inferences than the two-wave designs used in prior work.”

The researchers conducted two separate longitudinal studies. Study 1 involved 377 working adults recruited from an Asian country. The participants were part of a larger project regarding career development and lifelong learning.

The researchers collected data from these participants at three distinct time points, with each wave separated by a six-month interval. This design allowed the team to track changes within the same individuals over a period of one year. To measure self-control, the participants completed a 20-item scale that assessed their ability to inhibit impulses, initiate work, and continue good behaviors.

For the assessment of well-being, the participants responded to a scale designed to be culturally appropriate for the population. This measure included items asking about their levels of happiness, self-worth, and appreciation for life. The team also utilized a statistical technique known as the random intercept cross-lagged panel model.

This specific analytical approach is significant because it separates stable personality traits from temporary fluctuations within a person. It allowed the researchers to determine if a specific increase in well-being at one time point predicted a subsequent increase in self-control at the next time point. By isolating these within-person changes, the model provides a stronger test for potential causal influence than traditional methods.

The results from the first study revealed a pattern that contradicted the traditional narrative. Earlier levels of self-control did not reliably predict improvements in well-being six months later. Simply exercising discipline did not appear to make participants happier in the future.

In contrast, the data supported the reverse hypothesis. Participants who reported higher levels of well-being at one time point exhibited greater self-control at the next measurement wave. Feeling well appeared to function as a precursor to functioning well.

To ensure these findings were not specific to one culture or time interval, the researchers conducted a second study. Study 2 recruited a larger sample of 1,299 working adults in the United States. This study followed a similar three-wave design but utilized a shorter time frame to capture more immediate effects.

Participants in the American sample completed surveys once a month for three consecutive months. They answered the same self-control questions used in the first study. To measure well-being, they completed a scale assessing positive feelings, optimism, and vitality.

The analysis of the American data yielded results that mirrored those of the Asian sample. High levels of self-control at the start of a month did not lead to increased well-being the following month. The anticipated reward of happiness following disciplined behavior did not materialize in the short term.

However, the reverse relationship remained significant and positive. Individuals who felt more optimistic and energetic at the beginning of the month demonstrated better self-control a month later. This replication across two different cultures and timeframes provides robust evidence that the primary direction of influence flows from well-being to self-control.

“The most surprising result was the consistent lack of evidence for the popular belief that self-control predicts later well-being,” Jia told PsyPost. “Given how deeply this idea is embedded in both scientific thinking and popular culture, we expected to see at least a small effect in that direction. To find that the data from two separate studies so clearly supported only the path from well-being to self-control was quite striking. It really challenges a foundational assumption and underscores the need to re-evaluate how we think about these two critical aspects of a good life.”

The researchers conducted supplementary analyses to further check these patterns. In the first study, participants also provided daily reports of their mood and behavior for a week. These daily records showed that while positive emotions predicted self-control months later, self-control did not uniquely predict daily positive emotions when general well-being was taken into account.

The researchers propose that positive emotions may help replenish the mental energy required to resist temptations and stick to difficult tasks. When people feel good, they may be more open to challenges and better at managing conflicting goals. This aligns with the idea that well-being acts as fuel for the engine of self-control.

“The most important takeaway for the average person is to reconsider how they approach self-improvement,” Jia said. “The common advice is often to ‘just try harder’ or to focus on building discipline through sheer willpower. Our findings suggest a potentially more effective, and certainly more pleasant, alternative: prioritize your well-being to build your self-control.”

“Instead of viewing happiness as a reward you get after achieving your goals through discipline, think of well-being as the fuel that powers the engine of self-control. If you want to get better at resisting temptations, starting new projects, or sticking with good habits, a great first step is to invest in activities that make you feel happy, energetic, optimistic, and appreciative of life. Our research indicates that feeling well precedes functioning well.”

The study’s strength lies in its use of a three-wave longitudinal design across two diverse cultural samples. But as with all research, there are some limitations. The statistical framework used relies on the assumption that the relationships between variables remain constant over time. It is also possible that unmeasured third variables, such as changes in sleep, stress, or social support, could influence both well-being and self-control simultaneously.

It is also important to note that the absence of a short-term effect does not mean self-control has no relationship with happiness. “A crucial caveat is that ‘absence of evidence is not evidence of absence,'” Jia explained. “Our study failed to find a within-person causal effect of self-control on well-being, but this does not mean that self-control is unimportant for happiness altogether.

“It’s possible that having high self-control as a stable, long-term trait contributes to a person’s overall life satisfaction (a between-persons effect), even if short-term fluctuations in self-control don’t cause short-term fluctuations in well-being.”

“So, the misinterpretation to avoid is thinking ‘self-control doesn’t matter for happiness.’ A more accurate interpretation is that if you are looking for a positive change, our evidence suggests that boosting your well-being is a more direct and effective way to improve your self-control, rather than the other way around.”

Future research could explore the specific mechanisms that allow well-being to improve self-control. It may be that positive moods accelerate habit formation or enhance cognitive flexibility. Understanding these processes could lead to better interventions for people struggling with self-regulation.

“The path to greater self-control doesn’t have to be a grim, effortful struggle,” Jia added. “Instead, it can be paved with positive experiences. By actively cultivating joy, engagement, and meaning in our lives, we are not just making ourselves feel better in the moment; we are also building the psychological resources we need to be more effective and successful in the future. It places the pursuit of well-being at the very center of personal growth.”

The study, “Feeling Well, Functioning Well: How Psychological Well-Being Predicts Later Self-Control, but Not the Other Way Around,” was authored by Shuna Shiann Khoo, Lile Jia, Ismaharif Ismail, Ying Li, Liangyu Xing, and Jolynn Pek.

The brain has its own waste disposal system – known as the glymphatic system – that’s thought to be more active when we sleep.

But disrupted sleep might hinder this waste disposal system and slow the clearance of waste products or toxins from the brain. And researchers are proposing a build-up of these toxins due to lost sleep could increase someone’s risk of dementia.

There is still some debate about how this glymphatic system works in humans, with most research so far in mice.

But it raises the possibility that better sleep might boost clearance of these toxins from the human brain and so reduce the risk of dementia.

Here’s what we know so far about this emerging area of research.

All cells in the body create waste. Outside the brain, the lymphatic system carries this waste from the spaces between cells to the blood via a network of lymphatic vessels.

But the brain has no lymphatic vessels. And until about 12 years ago, how the brain clears its waste was a mystery. That’s when scientists discovered the “glymphatic system” and described how it “flushes out” brain toxins.

Let’s start with cerebrospinal fluid, the fluid that surrounds the brain and spinal cord. This fluid flows in the areas surrounding the brain’s blood vessels. It then enters the spaces between the brain cells, collecting waste, then carries it out of the brain via large draining veins.

Scientists then showed in mice that this glymphatic system was most active – with increased flushing of waste products – during sleep.

One such waste product is amyloid beta (Aβ) protein. Aβ that accumulates in the brain can form clumps called plaques. These, along with tangles of tau protein found in neurons (brain cells), are a hallmark of Alzheimer’s disease, the most common type of dementia.

In humans and mice, studies have shown that levels of Aβ detected in the cerebrospinal fluid increase when awake and then rapidly fall during sleep.

But more recently, another study (in mice) showed pretty much the opposite – suggesting the glymphatic system is more active in the daytime. Researchers are debating what might explain the findings.

So we still have some way to go before we can say exactly how the glymphatic system works – in mice or humans – to clear the brain of toxins that might otherwise increase the risk of dementia.

We know sleeping well is good for us, particularly our brain health. We are all aware of the short-term effects of sleep deprivation on our brain’s ability to function, and we know sleep helps improve memory.

In one experiment, a single night of complete sleep deprivation in healthy adults increased the amount of Aβ in the hippocampus, an area of the brain implicated in Alzheimer’s disease. This suggests sleep can influence the clearance of Aβ from the human brain, supporting the idea that the human glymphatic system is more active while we sleep.

This also raises the question of whether good sleep might lead to better clearance of toxins such as Aβ from the brain, and so be a potential target to prevent dementia.

What is less clear is what long-term disrupted sleep, for instance if someone has a sleep disorder, means for the body’s ability to clear Aβ from the brain.

Sleep apnoea is a common sleep disorder when someone’s breathing stops multiple times as they sleep. This can lead to chronic (long-term) sleep deprivation, and reduced oxygen in the blood. Both may be implicated in the accumulation of toxins in the brain.

Sleep apnoea has also been linked with an increased risk of dementia. And we now know that after people are treated for sleep apnoea more Aβ is cleared from the brain.

Insomnia is when someone has difficulty falling asleep and/or staying asleep. When this happens in the long term, there’s also an increased risk of dementia. However, we don’t know the effect of treating insomnia on toxins associated with dementia.

So again, it’s still too early to say for sure that treating a sleep disorder reduces your risk of dementia because of reduced levels of toxins in the brain.

Collectively, these studies suggest enough good quality sleep is important for a healthy brain, and in particular for clearing toxins associated with dementia from the brain.

But we still don’t know if treating a sleep disorder or improving sleep more broadly affects the brain’s ability to remove toxins, and whether this reduces the risk of dementia. It’s an area researchers, including us, are actively working on.

For instance, we’re investigating the concentration of Aβ and tau measured in blood across the 24-hour sleep-wake cycle in people with sleep apnoea, on and off treatment, to better understand how sleep apnoea affects brain cleaning.

Researchers are also looking into the potential for treating insomnia with a class of drugs known as orexin receptor antagonists to see if this affects the clearance of Aβ from the brain.

This is an emerging field and we don’t yet have all the answers about the link between disrupted sleep and dementia, or whether better sleep can boost the glymphatic system and so prevent cognitive decline.

So if you are concerned about your sleep or cognition, please see your doctor.![]()

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Is there a better approach?

Is there a better approach?

Decades of assumptions could be wrong.

Decades of assumptions could be wrong.

That's not even a worst-case scenario.

That's not even a worst-case scenario.

Going to school helps children learn how to read and solve math problems, but it also appears to upgrade the fundamental operating system of their brains. A new analysis suggests that the structured environment of formal education leads to improvements in executive functions, which are the cognitive skills required to control behavior and achieve goals. These findings were published in the Journal of Experimental Child Psychology.

To understand why this research matters, one must first understand what executive functions are. Psychologists use this term to describe a specific set of mental abilities that allow people to manage their thoughts and actions. These skills act like an air traffic control system for the brain. They help a person pay attention, switch focus between tasks, and remember instructions.

There are three main components to this system. The first is working memory, which is the ability to hold information in your mind and use it over a short period. The second is inhibitory control. This is the ability to ignore distractions and resist the urge to do something impulsive. The third is cognitive flexibility. This allows a person to shift their thinking when the rules change or when a new problem arises.

Researchers have known for a long time that these skills get better as children get older. A seven-year-old is almost always better at sitting still and following directions than a four-year-old. The difficult question for scientists has been determining what causes this change. It is hard to tell if children improve simply because their brains are biologically maturing or if the experience of going to school actually speeds up the process.

This is the question that Jamie Donenfeld and her colleagues sought to answer. Donenfeld is a researcher at the University of Massachusetts Boston. She worked alongside Mahita Mudundi, Erik Blaser, and Zsuzsa Kaldy, who are also affiliated with the Department of Psychology at the same university. The team wanted to isolate the specific impact of the classroom environment from the natural effects of aging.

To do this, the researchers relied on a clever quirk of the educational system known as the school entry cutoff date. In many school districts, a child must turn five by a specific date, such as September 1, to enter kindergarten. This creates a natural experiment.

Consider two children who are practically the same age. One was born on August 31, and the other was born on September 2. The child born in August enters kindergarten. The child born in September must wait another year. By comparing these two groups, scientists can look at children who are virtually identical in biological maturity but have vastly different experiences with formal schooling.

The research team did not conduct a single new experiment with a specific group of children. Instead, they performed a meta-analysis. This is a statistical method that allows scientists to combine the results of many previous studies to find a common trend. They searched through databases for studies published between 1995 and 2023.

They started with over 400 potential studies. They screened these records to find ones that met strict criteria. The studies had to compare children of similar ages who had different levels of schooling. They also had to use objective measures of executive function.

The team ultimately identified 12 studies that fit all their requirements. These studies included data from approximately 1,611 children. The participants ranged in age from about four and a half to nine years old. The studies covered various locations, including the United States, Germany, Israel, and Scotland.

By pooling the data from these different sources, the researchers calculated a standardized mean difference. This number represents the size of the “schooling effect.” The analysis revealed a small but consistent positive effect. The data showed that attending school does improve a child’s executive functions.

The improvement was not massive, but it was reliable. The researchers described the effect as modest. It suggests that the experience of school provides a unique boost to cognitive development that goes beyond just getting older.

The researchers also conducted a secondary analysis using the longitudinal studies in their set. These were studies that followed children over time. They compared two types of groups. The first group consisted of children who did not advance a grade level during the study period, such as those remaining in preschool. This group provided a baseline for how much executive function improves due to natural maturation alone.

The second group consisted of children who completed a grade, such as first grade, during the same timeframe. This group represented the combined effect of biological maturation plus the experience of schooling.

The results showed a clear difference. The children who experienced a year of schooling showed greater gains in executive functions than those who only grew a year older. The estimated effect size for the schooling group was higher than for the maturation-only group. This supports the idea that the classroom environment acts as a training ground for the brain.

It is important to consider why school has this effect. The authors argue that formal education places heavy demands on a child. Students must sit still for extended periods. They must listen to instructions from teachers. They have to wait their turn to speak. They must remember rules and complete tasks even when they are tired or bored.

This daily routine serves as an intense practice session for inhibitory control and working memory. The state of Massachusetts, for example, requires 900 hours of structured learning time per year. That is a massive amount of practice.

The authors compared this to commercial “brain training” games. Many companies sell video games that claim to improve cognitive skills. However, research has largely shown that these games do not work very well. Players get better at the specific game, but the skills do not transfer to real life.

The researchers suggest that school succeeds where these games fail because of the intensity and duration of the experience. A few hours of gaming cannot compare to hundreds of hours of managing one’s behavior in a social classroom setting. The context of school is immersive. It requires children to use their executive functions in real-world situations to achieve social and academic goals.

There are limitations to this study that should be noted. The number of studies included in the final analysis was relatively small. Finding research that strictly followed the cutoff-date design is difficult. This means the total pool of participants was not as large as it is in some medical meta-analyses.

The studies also used a wide variety of tasks to measure executive functions. Some used memory games involving numbers. Others used tasks where children had to sort cards by changing rules. Some tested inhibitory control by asking children to touch their toes when told to touch their head.

This variety makes it harder to compare results perfectly across different papers. The educational systems in the different countries also vary. Kindergarten in Switzerland might focus more on play than kindergarten in the United States. This could influence how much “training” the children actually receive.

The authors also noted that they could not examine specific transitions in detail. It is possible that the jump from preschool to kindergarten has a bigger impact than the move from first to second grade. The current data did not allow them to break down the results by specific grade levels with high precision.

Future research is needed to understand which parts of schooling are the most effective. It might be the structured curriculum. It might be the social interaction with peers. It might be the relationship with the teacher. Understanding the specific mechanisms could help educators design classrooms that better support cognitive development.

The researchers also point out that the tests used in these studies are laboratory tasks. They are artificial by nature. Future studies should try to measure how children use these skills in real-world scenarios. We need to know if better scores on a memory test translate to better behavior on the playground or at home.

The study, “School changes minds: A meta-analysis shows that schooling modestly improves children’s executive functions,” was authored by Jamie Donenfeld, Mahita Mudundi, Erik Blaser, and Zsuzsa Kaldy.

It's not just a hallucinogen.

It's not just a hallucinogen.

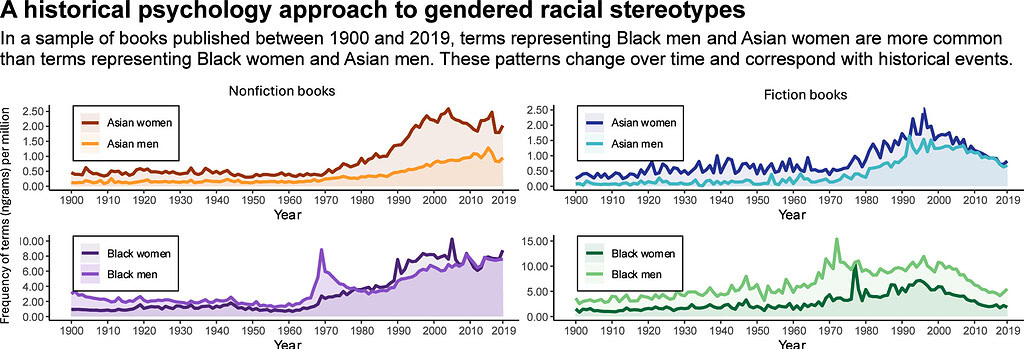

A comprehensive analysis of English-language literature published over the last century reveals distinct patterns in how race and gender intersect within written text. The findings suggest that Black women and Asian men have historically appeared less frequently in books compared to Black men and Asian women, a phenomenon that aligns with psychological theories regarding social invisibility.

The research also provides evidence that these representational trends are not static and appear to shift in response to major historical events. These findings were published in the journal Current Research in Ecological and Social Psychology.

Joanna Schug, an associate professor at William & Mary, led the research team. She collaborated with Monika Gosin from the University of California San Diego and Nicholas P. Alt from Occidental College to investigate these long-term cultural trends. The study aimed to apply a historical lens to psychological theories that have typically been tested in laboratory settings.

Scholars have previously developed the concept of gendered race theory to explain how society perceives different groups. This framework suggests that the racial category “Black” is often cognitively associated with masculinity. Conversely, the racial category “Asian” is frequently associated with femininity.

These mental associations can lead to a phenomenon known as intersectional invisibility. This theory posits that individuals who do not fit the prototypical stereotypes—specifically Black women and Asian men—are often overlooked or marginalized. Because they do not align with the dominant gendered stereotypes of their racial groups, they may become less visible in cultural representations.

Prior experiments have supported these theories by showing that people are more likely to forget statements made by Black women or Asian men compared to other groups. Schug and her colleagues sought to determine if this psychological bias extended to cultural artifacts. They investigated whether these patterns of invisibility could be quantified in millions of books published over a 120-year period.

To conduct this analysis, the researchers utilized the Google Books Ngram dataset. This massive digital archive contains word frequency data from over 15 million books published between 1900 and 2019. The team examined two specific collections within this dataset: a general corpus of English-language books and a specific corpus containing only fiction texts.

The investigators tracked the frequency of specific phrases, known as “ngrams,” that combine racial and gender identifiers. They searched for terms such as “Black woman,” “Black man,” “Asian woman,” and “Asian man.” To ensure the search was comprehensive, they included various synonyms and historical terms relevant to different time periods.

For the category of Black individuals, the search included terms like “African American” and older designations that were common in the early 20th century. For Asian individuals, the researchers included specific ethnic groups such as Chinese, Japanese, Korean, and Vietnamese. They calculated the raw frequency of these terms to compare their prevalence in fiction versus nonfiction works.

The results from the first part of the study provided evidence supporting the existence of representational invisibility in literature. Throughout the majority of the 20th century, terms referring to Black men appeared more often than terms referring to Black women. This gap was present in both fiction and nonfiction texts.

Similarly, the analysis showed a consistent disparity in representations of Asian identities. References to Asian women generally outnumbered references to Asian men. This pattern persisted across the studied time period, although the gap was particularly pronounced in nonfiction books starting in the 1990s.

The researchers argue that these patterns reflect deep-seated historical stereotypes. For example, historical labor laws and immigration policies often restricted Asian men to domestic roles, which may have contributed to feminized stereotypes. In contrast, historical narratives surrounding Black identity have often focused on men, particularly in the context of labor and political struggle.

The study also included a comparison with White gender categories. The data showed that references to White men far exceeded references to White women. This finding aligns with the concept of androcentrism, where men are treated as the default representation of a group.

While the general patterns supported the theory of intersectional invisibility, the researchers observed a notable shift beginning in the late 20th century. In nonfiction books, references to Black women began to increase substantially around 1980. Eventually, the frequency of terms for Black women surpassed those for Black men in nonfiction texts.

To understand the drivers behind these shifts, the authors conducted a second study. They hypothesized that specific social movements might be influencing how often these groups were mentioned in print. They focused on the Civil Rights Movement and the Black Feminist movement.

The team identified key terms associated with these movements. For the Civil Rights Movement, they tracked phrases like “Civil Rights Movement” and “Black Power.” For the Black Feminist movement, they tracked terms such as “Black feminist” and “womanist.”

They then used statistical models to analyze the relationship between these movement-related terms and the frequency of race-gender categories over time. The analysis examined whether a rise in social movement terminology corresponded with a rise in the visibility of specific groups.

The findings indicated a strong link between the Civil Rights Movement and the representation of Black men. Increases in terms related to Civil Rights were positively associated with increases in references to Black men in both fiction and nonfiction. This suggests that the discourse of this era primarily elevated the visibility of Black men.

In contrast, the Civil Rights terminology did not show a significant positive association with references to Black women. This aligns with critiques from scholars like Kimberlé Crenshaw. Crenshaw has argued that antiracist efforts during that era often focused on the experiences of Black men, while feminist efforts often focused on White women.

However, the data revealed a different pattern regarding the Black Feminist movement. The rise in terms associated with Black Feminism was a significant predictor of increased references to Black women. This effect was particularly strong in nonfiction texts.

This suggests that the Black Feminist movement played a role in correcting the historical invisibility of Black women in literature. As scholars and activists began to produce more work centered on the experiences of Black women, the language in published books shifted to reflect this focus.

The study did observe some differences between fiction and nonfiction. For instance, while Black Feminism terms predicted more mentions of Black women in nonfiction, they were negatively associated with mentions of Black men in fiction. This indicates that different genres may respond to cultural shifts in distinct ways.

The researchers note that the patterns for Asian men and women remained relatively stable compared to the shifts seen for Black men and women. The representation of Asian men remained lower than that of Asian women throughout most of the period. The authors suggest that future research could investigate if specific Asian American social movements have had similar effects on representation.

But there are some limitations to to consider. The Google Books dataset, while vast, is not a perfect representation of all culture. It tends to overrepresent academic and scientific publications, which might skew the results toward scholarly discourse rather than everyday language.

Additionally, the study is correlational. This means that while the rise in social movement terms coincides with changes in representation, it does not definitively prove that the movements caused the changes. Other unmeasured societal factors could have contributed to these trends.

The researchers also point out the complexity of the term “Asian” in their analysis. The study primarily utilized terms related to East Asian identities. This focus means the findings may not fully capture the experiences of South Asian or Southeast Asian groups.

Despite these limitations, the study offers new insights into how cultural stereotypes are preserved and challenged over time. It provides empirical evidence that the “invisibility” of certain groups is not just a theoretical concept but a measurable phenomenon in the written record.

The findings also highlight the potential of social movements to alter widespread cultural narratives. The increase in references to Black women following the rise of Black Feminism suggests that concerted intellectual and political efforts can successfully challenge representational biases.

Future research could build on this work by using more advanced text analysis methods. Newer techniques could examine the context in which these words appear, rather than just their frequency. This would allow for a deeper understanding of the quality of representation, beyond just the quantity.

The study, “A historical psychology approach to gendered racial stereotypes: An examination of a multi-million book sample of 20th century texts,” was authored by Joanna Schug, Monika Gosin, and Nicholas P. Alt.

Recent analysis of federal health data suggests that the recreational use of LSD is associated with a lower likelihood of alcohol use disorder. This finding stands in contrast to the use of other psychedelic substances, which did not show a similar protective link in the past year. The results were published recently in the Journal of Psychoactive Drugs.

Alcohol use disorder affects millions of adults and stands as one of the most persistent public health challenges in the United States. The condition involves a pattern of alcohol consumption that leads to clinically detectable distress or impairment. Individuals with this disorder often find themselves unable to control their intake despite knowing it causes physical or social harm. Standard treatments exist, but relapse rates remain high. Consequently, medical researchers are exploring alternative therapeutic avenues.

In recent years, attention has shifted toward the potential utility of psychedelic compounds. Substances such as psilocybin and MDMA have shown promise in controlled clinical trials for treating various psychiatric conditions. However, there is a substantial distinction between administering a drug in a hospital with trained therapists and taking a drug recreationally. James M. Zech, a researcher at Florida State University, sought to investigate this difference. Zech collaborated with Jérémie Richard from Johns Hopkins School of Medicine and Grant M. Jones from Harvard University.

The team aimed to determine if the therapeutic signals seen in small clinical trials would appear in the general population. They utilized data from the National Survey on Drug Use and Health. This government project recruits a representative group of American citizens to answer detailed questions about their lifestyle and health. The researchers pooled data collected from 2021 through 2023. The final dataset included responses from 139,524 adults.

To ensure accuracy, the investigators did not simply look at who used drugs and who drank alcohol. They employed statistical models designed to account for confounding factors. They adjusted their calculations for variables such as age, biological sex, income, and education level. They also controlled for the use of other substances, including tobacco and cannabis. This process helped them isolate the specific relationship between psychedelics and alcohol problems.

The researchers assessed whether participants met the diagnostic criteria for alcohol use disorder within the past year. They also looked at the severity of the disorder by counting the number of symptoms reported. These symptoms range from experiencing cravings to neglecting responsibilities due to drinking.

The analysis revealed a distinct association regarding lysergic acid diethylamide, better known as LSD. Adults who reported using LSD in the past year were significantly less likely to meet the criteria for alcohol use disorder. The adjusted odds ratio indicated a 30 percent reduction in likelihood compared to non-users. Among those who did have the disorder, LSD users reported approximately 15 percent fewer symptoms.

The study did not find the same pattern for other popular substances. The researchers analyzed the use of MDMA and ketamine over the same twelve-month period. Neither of these drugs showed a statistical association with the presence or absence of alcohol use disorder. This suggests that the potential protective effect observed with LSD might be specific to that compound or the context in which it is typically used.

A more complex picture emerged when the team examined lifetime usage histories. The survey asked participants if they had ever used certain drugs, even if they had not done so recently. Individuals who had used psilocybin or MDMA at any point in their lives were actually more likely to meet the criteria for alcohol use disorder in the past year. In contrast, lifetime use of DMT was linked to a lower probability of having the disorder.

These contradictory findings highlight the difficulty of interpreting observational data. The researchers propose several theories to explain why lifetime psilocybin use might track with higher alcohol problems while past-year LSD use tracks with lower ones. It is possible that individuals with existing substance use issues are more inclined to experiment with psilocybin.

Another possibility involves the nature of the psychedelic experience itself. While clinical trials optimize the setting to ensure a positive outcome, recreational use carries risks. The authors note that unsupervised trips can sometimes be distressing or psychologically destabilizing. If a person has a negative experience, they might increase their alcohol consumption as a way to cope with the resulting stress.

Conversely, the potential benefits of LSD could stem from psychological shifts often reported by users. Previous studies indicate that psychedelics can alter personality traits. Users often report increased “openness” and decreased “neuroticism” after a profound experience. If LSD facilitates such changes more reliably in naturalistic settings, it could theoretically reduce the psychological drivers of heavy drinking.

These results contribute to a growing body of literature that often points in different directions. For example, a survey of Canadian adults previously found that people self-reported large reductions in alcohol use after taking psychedelics. In that study, respondents specifically cited psilocybin as the most effective agent for change. The discrepancy between that survey and the current findings underscores the difference between self-perception and objective diagnostic criteria.

Clinical research has also provided evidence for the efficacy of psilocybin, provided it is administered professionally. A small trial conducted in Denmark tested a single high dose of psilocybin on patients with severe alcohol use disorder. In that experiment, patients received psychological support before and after the session. The clinicians observed a reduction in heavy drinking days and cravings.

The contrast between the clinical success of psilocybin and the negative association found in the general population data is noteworthy. It suggests that the element of therapy and professional guidance may be essential for achieving therapeutic outcomes. Without the safety net of a clinical setting, the risks of using these powerful substances may outweigh the benefits for some individuals.

There are some limitations to the current study that affect how the results should be viewed. The analysis is cross-sectional, meaning it captures a snapshot in time rather than following people forward. As a result, the researchers cannot prove that LSD causes a reduction in drinking. It is equally possible that people who choose to use LSD simply have different lifestyle patterns that protect them from alcohol addiction.

The study also faced constraints regarding the data available. The federal survey only asked about past-year use for a subset of drugs. For psilocybin, the survey only asked about lifetime use. This prevented the researchers from seeing if recent psilocybin use might have shown a positive benefit similar to LSD. Additionally, the data relies on self-reporting. Participants may not always be truthful about their involvement with illegal substances or the extent of their alcohol consumption.

The researchers emphasize the need for longitudinal studies in the future. Tracking individuals over many years would clarify the order of events. It would show whether psychedelic use typically precedes a change in drinking behavior. The authors also suggest that future research should measure the dosage and frequency of use. Understanding whether a person took a substance once or heavily and repeatedly is necessary to fully understand the risks and benefits.

The study, “The Relationship Between Psychedelic Use and Alcohol Use Disorder in a Nationally Representative Sample,” was authored by James M. Zech, Jérémie Richard, and Grant M. Jones.

Recent trends in popular culture suggest that sexual behaviors involving physical force, such as choking or spanking, have moved from the fringes into the mainstream. A new study involving a nationally representative sample of adults provides evidence that these practices are widespread in the United States, particularly among younger generations. Published in the Archives of Sexual Behavior, the findings indicate that while many adults engage in these acts consensually, a significant portion of the population has also experienced them without permission.

The prevalence of “rough sex” has appeared to increase over the last decade. Depictions of these behaviors have become common in television, music, and social media. This visibility may lead to the perception that such practices are a standard or expected part of sexual intimacy. While these acts can enhance pleasure and intimacy for many, public health professionals have raised questions about safety and consent.

Previous attempts to measure these behaviors have often faced methodological hurdles. Many earlier surveys relied on data that is now outdated or focused exclusively on college students, limiting the ability to apply findings to the general public. Other studies used non-probability samples, such as online opt-in panels, which may not accurately reflect the broader population. Additionally, standard public health surveys often focus on disease prevention and pregnancy, omitting specific questions about acts like choking or slapping.

Debby Herbenick, a professor at the Indiana University School of Public Health, led the new research. Herbenick and her colleagues sought to fill the gaps in existing literature by collecting current data from a diverse range of ages and backgrounds. Their objective was to provide precise estimates of how many Americans engage in these behaviors and to identify demographic factors associated with them.

To achieve this, the researchers analyzed data from the 2022 National Survey of Sexual Health and Behavior. This survey is a recurring project that gathers detailed information on the sexual lives of Americans. The team used the Ipsos KnowledgePanel to recruit participants. This panel utilizes address-based sampling methods to create a pool of respondents that is statistically representative of the United States non-institutionalized adult population.

The final sample consisted of 9,029 adults between the ages of 18 and 94. The survey presented participants with a list of ten specific sexual behaviors. These included hair pulling, biting, face slapping, genital slapping, light spanking, hard spanking, choking, punching, name-calling, and smothering. The researchers avoided using the potentially ambiguous term “rough sex” in the questions. Instead, they asked about each specific act individually.

Participants reported their experiences in three distinct contexts. They indicated if they had performed these acts on a partner. They also indicated if a partner had done these acts to them with permission or consent. Finally, they reported if a partner had done these acts to them without permission or consent.

The results indicated that engagement in these behaviors is common. Approximately 48 percent of women and 61 percent of men reported having ever performed at least one of the listed behaviors on a partner. When it came to receiving these acts with consent, about 54 percent of women and 46 percent of men reported having at least one such experience.

Age emerged as a strong predictor of engagement. The researchers observed a substantial divide between adults under the age of 40 and those in older cohorts. Younger adults were significantly more likely to report both performing and receiving these behaviors. For instance, while choking a partner was rarely reported by men over the age of 50, it was a common experience for men in their 20s and 30s.

The types of behaviors reported varied in intensity. Biting and light spanking were among the most common activities reported by all groups. More intense behaviors, such as punching or smothering, were reported less frequently.

Gender patterns in the data generally aligned with traditional roles. Men were more likely to report being the ones to perform the acts, such as spanking or choking a partner. Conversely, women were more likely to report being on the receiving end of these behaviors. This suggests that even within practices considered “kinky” or alternative, mainstream participation often mirrors conventional active-male and passive-female scripts.

Transgender and gender nonbinary participants reported high rates of engagement across all categories. About 71 percent of these individuals reported ever performing at least one of the acts on a partner. Similarly, roughly 72 percent reported receiving at least one of the acts with consent.

One of the most concerning findings related to non-consensual experiences. The survey revealed that a substantial number of adults have been subjected to rough sex behaviors without their agreement. Approximately 20 percent of women reported that a partner had performed at least one of the ten behaviors on them without permission.

The rates of non-consensual experiences were also notable for men, with about 16 percent reporting such incidents. The risk was highest for transgender and gender nonbinary individuals. Approximately 35 percent of this group reported experiencing at least one of the behaviors without consent.

These findings align with and expand upon several lines of previous inquiry regarding rough sex. For example, a 2024 study by Döring and colleagues surveyed a national sample of German adults using an online panel. They found a lifetime prevalence of rough sex involvement at 29 percent. Similar to the current U.S. study, the German researchers identified a steep age gradient. Younger participants were much more likely to engage in these acts than older cohorts.

The German study also mirrored the gendered nature of these interactions observed in the U.S. data. Döring’s team found that men were significantly more likely to take an active role, while women were more likely to take a passive role. This consistency across Western nations suggests that the rise of rough sex is occurring within the boundaries of traditional gender expectations rather than subverting them.

Earlier research involving U.S. college students also provides context for the current findings. A 2021 study by Herbenick and colleagues found that nearly 80 percent of sexually active undergraduates had engaged in rough sex.

The most common behaviors identified in that probability sample—choking, hair pulling, and spanking—match the most prevalent behaviors in the new national adult study. The extremely high rates among college students align with the age-related trends seen in the adult data. It appears that emerging adults are the primary demographic driving these statistics.

Research from an evolutionary psychology perspective offers potential explanations for why these behaviors are occurring. Studies by Burch and Salmon have suggested that consensual rough sex is often driven by a desire for novelty rather than aggression. Their work with undergraduates indicated that people who consume pornography are more likely to seek out these novel experiences. They also found that men were more likely to initiate rough sex in response to feelings of jealousy.

Burch and Salmon’s findings framed these behaviors as largely recreational and resulting in little physical injury. The current study complicates that narrative. While many respondents reported consensual engagement, the high rates of non-consensual experiences indicate that these behaviors are not always harmless play. The prevalence of non-consensual choking and slapping suggests a darker side to the normalization of rough sex that novelty-seeking theories may not fully address.

The researchers pointed out several limitations to their study. The list of ten behaviors may not capture the full spectrum of what individuals consider to be rough sex. Additionally, the survey did not measure the “wantedness” of the acts. It is possible for an act to be consensual but not necessarily desired or enjoyed, and the study did not make this distinction.

The study also grouped bisexual and pansexual individuals together for analysis. This decision was made due to sample sizes but may obscure unique experiences within these distinct identities. Furthermore, the reliance on self-reported data means that memory recall could influence the accuracy of the lifetime prevalence estimates.

Future research aims to explore the nuances of consent in these scenarios. The researchers suggest investigating how partners communicate boundaries regarding specific acts like choking or slapping. Understanding the context in which non-consensual acts occur—whether as part of an otherwise consensual encounter or as distinct assaults—is a priority for public health.

The study, “Prevalence and Demographic Correlates of “Rough Sex” Behaviors: Findings from a U.S. Nationally Representative Survey of Adults Ages 18–94 Years,” was authored by Debby Herbenick, Tsung‑chieh Fu, Xiwei Chen, Sumayyah Ali, Ivanka Simić Stanojević, Devon J. Hensel, Paul J. Wright, Zoë D. Peterson, Jaroslaw Harezlak, and J. Dennis Fortenberry.

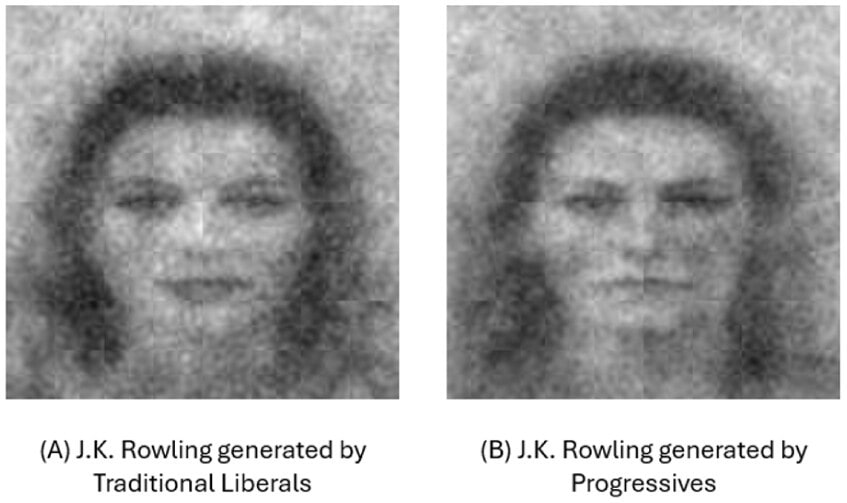

New research published in the Personality and Social Psychology Bulletin reveals a psychological split within the political left regarding perceptions of in-group dissenters. The study indicates that self-identified Progressives and Traditional Liberals generate fundamentally different mental images of author J.K. Rowling based on her views regarding gender identity. While Progressives conceptualize Rowling as appearing cold and right-wing, Traditional Liberals visualize her in a warm and positive light.

Political psychology has historically focused on the ideological conflict between the Left and the Right. Scholars have frequently characterized right-wing individuals as more prone to rigidity and hostility toward out-groups. However, recent academic inquiries have shifted focus to the increasing fragmentation within the left-wing itself. This internal division is often categorized into two distinct subgroups: Progressives and Traditional Liberals.

Elena A. Magazin, Geoffrey Haddock, and Travis Proulx from Cardiff University conducted this research to investigate how these two groups perceive ideological dissenters from within their own ranks. The researchers utilized the Progressive Values Scale (PVS) to distinguish between the groups.

This scale identifies Progressives as those who emphasize mandated diversity, concern over cultural appropriation, and the public censure of offensive views. In contrast, Traditional Liberals tend to favor free expression and gradual institutional change over activist approaches.

The primary objective was to determine if the tendency to derogate—or negatively perceive—others extends to members of one’s own political group who hold controversial views. J.K. Rowling served as the focal point for this investigation.

Rowling is a prominent figure who has historically supported left-wing causes but has recently expressed “gender critical” views that conflict with the “gender self-identification” stance held by many on the Left. The researchers sought to visualize how these political orientations shape the mental representations of such a figure.

The researchers employed a technique known as reverse correlation to capture these internal mental images. This method allows scientists to visualize a participant’s internal representation of a person or group without asking them to draw or describe features explicitly. In the first study, the team recruited 82 left-wing university students in the United Kingdom to act as “generators.”

During the image generation phase, participants viewed pairs of faces derived from a neutral base image overlaid with random visual noise. For each pair, they selected the face that best resembled their mental image of J.K. Rowling. By averaging the selected images across hundreds of trials, the researchers created composite “classification images” representing the average visualization of Rowling for Progressives and Traditional Liberals respectively.

A separate group of 178 undergraduates then served as “raters.” These participants evaluated the resulting composite images on various character traits, such as warmth, competence, morality, and femininity. The raters were unaware of how the images were generated or which political group created them.

The results from Study 1 provided evidence of a stark contrast in perception. The image of Rowling generated by Progressives was rated as cold, incompetent, immoral, and relatively masculine. Raters also perceived this face as appearing “right-wing” and prejudiced.

On the other hand, the image generated by Traditional Liberals was evaluated positively across these dimensions. It appeared warm, competent, feminine, and distinctly left-wing. This suggests that while Progressives mentally penalized the dissenter, Traditional Liberals maintained a flattering perception of her.

To ensure these findings were not limited to a specific demographic or location, the researchers conducted a second study with a more diverse sample. Study 2 involved 382 adults from the United States. This experiment aimed to replicate the findings and expand upon them by including abstract targets alongside concrete ones.

Participants were asked to generate images for four different categories. These included specific public figures, such as J.K. Rowling (representing gender critical views) and Lady Gaga (representing gender self-identification views). They also generated images for generalized, abstract descriptions of a “fellow left-winger” who held either gender critical or self-identification beliefs.

Following the generation phase, 301 distinct participants rated the eight resulting composite images. The findings from the second study reinforced the patterns observed in the first. In general, faces representing gender critical views were rated more negatively than those representing self-identification views. This aligns with the general left-wing preference for the self-identification model.

However, the degree of negativity varied by generator type. Progressives consistently generated gender critical faces that were evaluated more harshly than those generated by Traditional Liberals. This held true for both the abstract descriptions and the specific example of J.K. Rowling.

A specific divergence occurred regarding the concrete representation of Rowling. Consistent with the UK study, US Progressives generated a negative image of the author. In contrast, US Traditional Liberals generated an image that raters viewed as warm, competent, and moral. This occurred even though Traditional Liberals generated a negative image for the abstract concept of a gender critical person.

This discrepancy suggests a nuanced psychological process for Traditional Liberals. While they may disagree with the abstract views Rowling holds, their mental representation of her as an individual remains protected by a “benevolent exterior.” They appear to separate the person from the specific ideological disagreement in a way that Progressives do not.

The researchers also noted an unexpected pattern regarding gender perception. In both studies, the images of Rowling generated by Progressives were rated as looking less feminine and more masculine than those generated by Traditional Liberals. This finding implies that the devaluation of a target may involve stripping away gender-congruent features.

There are limitations to this research that context helps clarify. The first study relied heavily on a student population which was predominantly female and white. While the second study expanded the demographic range, both studies focused exclusively on the issue of gender identity. It remains unclear if this pattern of intra-left derogation would apply to other contentious topics, such as economic policy or foreign affairs.