An Introduction to Zero-Knowledge Proofs for Developers

Imagine proving you know a secret password without revealing the password itself. Or verifying someone is over 18 without exposing a birth date. That may sound impossible, yet zero-knowledge proofs make it practical. This cryptographic approach is reshaping how we design privacy and verification across modern networks.

For blockchain developers, understanding zero-knowledge proofs is moving from optional to required. ZK technology drives scalable execution with zk-rollups, privacy-preserving DeFi flows, selective disclosure for identity, and audit-grade compliance. If you already know smart contracts and consensus, ZK sits next to them as a core skill.

Most explanations either oversimplify with metaphors that do not help you build, or they jump into heavy math that stalls real progress. This guide stays developer-first. We will connect the core ideas to production patterns, cover trade-offs between popular proof systems, and show how to start building with Circom, SnarkJS, Noir, and related stacks.

What Is a Zero-Knowledge Proof?

A zero-knowledge proof is a protocol where a prover convinces a verifier that a statement is true, while revealing no additional information beyond the truth of that statement.

Consider a Sudoku example that maps cleanly to cryptographic commitments. You commit to your full solution in a hidden form, the verifier challenges a few rows, columns, or boxes, you reveal only those parts, and repeat enough times that cheating becomes overwhelmingly unlikely. The verifier never sees the full solution, yet gains strong confidence that you have one.

On blockchains, this enables powerful patterns. You can prove that an account has sufficient funds without revealing balances. You can show that a computation ran correctly without re-executing it on-chain. You can demonstrate that a user satisfies a policy without exposing raw personal data.

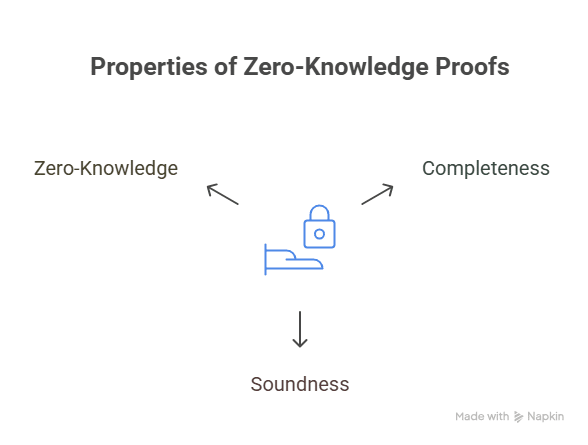

The Three Properties That Define Zero-Knowledge Proofs

- Completeness: If the statement is true and both sides follow the protocol, the verifier accepts the proof.

- Soundness: If the statement is false, a cheating prover cannot convince the verifier except with negligible probability.

- Zero-knowledge: The verifier learns nothing beyond the truth of the statement. No secrets are leaked, no hints are exposed.

In production, violating any one of these can be costly. Incomplete constraints can accept invalid states. Weakened soundness can allow counterfeit proofs. Leaky designs can disclose private data. Treat these properties as non-negotiable.

Interactive vs Non-Interactive ZKPs

Academic texts often start with interactive protocols, where the verifier sends random challenges and the prover responds across multiple rounds. This helps with intuition, yet it is not ideal for public blockchain environments that need one-shot verification.

Non-interactive zero-knowledge proofs solve that limitation. The prover creates a single artifact that anyone can verify at any time. The key trick is the Fiat Shamir heuristic, which replaces live randomness with a cryptographic hash over the transcript so far. The prover derives challenges from the hash, then packages everything into one proof. Validators or auditors verify the object without multi-round communication.

Why this matters on-chain: thousands of nodes cannot engage in live back-and-forth. They need a deterministic proof that verified in constant time.

Quick Comparison

| Dimension | Interactive ZKPs | Non-Interactive ZKPs |

| Communication | Multiple challenge and response rounds | Single proof, verify anytime |

| Randomness | Verifier selected | Hash derived via Fiat Shamir |

| Reuse | Limited | High, proofs are portable |

| Best fit | Live authentication, synchronous protocols | Blockchains, archives, public attestations |

How Code Becomes a Zero-Knowledge Proof

Most modern stacks follow a common choreography.

- Arithmetize the program: Translate logic into algebraic constraints over a finite field.

- Commit to private values: The prover binds hidden inputs and intermediate results using commitments.

- Prove constraint satisfaction: The prover generates a compact object that convinces the verifier that all constraints hold for the committed values.

- Verify without re-execution: The verifier checks a small set of algebraic relations instead of repeating the entire computation.

Two dominant styles appear in practice:

- R1CS: Rank 1 Constraint Systems represent relations as simple multiplicative equations.

- Plonkish systems: Use polynomial identities over evaluation domains, which allows flexible custom gates and efficient batching.

A useful mental model: proving is heavy, verification is light. Design your architecture around that asymmetry.

ZK-SNARKs vs ZK-STARKs

When you implement zero-knowledge proofs, you quickly run into the choice between SNARKs and STARKs. The trade-offs influence circuit design, on-chain costs, and long-term security posture.

Head to Head

| Feature | zk-SNARKs | zk-STARKs |

| Proof size | Tiny, around 200 to 300 bytes | Large, around 100 to 200 KB |

| Verification time | Very fast, roughly 5 to 10 ms | Fast, roughly 20 to 50 ms |

| Prover time | Generally faster | Generally slower |

| Trusted setup | Required in many schemes | Not required |

| Post quantum security | No | Yes, considered resistant |

| Transparency | Lower | Higher |

| On-chain gas | Lower due to small proofs | Higher due to larger proofs |

| Best for | General computation in production with low gas | Transparency first designs with future proofing goals |

ZK-SNARKs: These are succinct non-interactive arguments of knowledge that popularized production ZK. The main win is tiny proofs and low verification cost, which is ideal for on-chain validation. The main drawback is the trusted setup. Many modern systems mitigate this with large, public multi-party ceremonies and with universal setups in newer constructions.

ZK-STARKs: These are scalable transparent arguments that avoid a trusted setup. They use hash-based commitments and information-theoretic techniques and are widely considered more comfortable for a post-quantum world. The proof sizes are much larger, which makes gas and storage more expensive on chains like Ethereum, although data availability and off-chain verification can soften that cost.

Beyond SNARKs and STARKs

The proof landscape includes specialized systems that fit particular needs.

Bulletproofs and PLONK at a Glance

| Proof system | Setup | Proof size | Verification | Best use case |

| Groth16 | Trusted, circuit specific | Around 200 bytes | 1 to 2 ms | High performance SNARKs in production |

| PLONK | Universal trusted | Around 400 bytes | 5 to 10 ms | Flexible development, reusable setup |

| Bulletproofs | None | Around 1 to 2 KB | 50 to 100 ms | Range proofs and confidential amounts |

| STARKs | None | Around 100 to 200 KB | 20 to 50 ms | Transparent systems at scale |

| Halo2 | None or universal style depending on stack | Around 1 to 5 KB | 10 to 30 ms | Recursion and proof aggregation friendly designs |

Bulletproofs: Excellent for range proofs, for example proving a value is non negative without revealing it. Widely used in privacy focused payment systems. Verification grows with circuit size, which limits very large or complex computations.

PLONK: Uses a universal and updateable setup that can be reused across circuits, which simplifies long term maintenance. Custom gates allow tuning circuits for high impact operations. Many modern stacks are Plonkish, including Halo2 based approaches that favor recursion.

Halo2: Focuses on flexible gadgets and efficient recursion. Recursion lets you aggregate many inner proofs into a single outer proof, which reduces on-chain verification cost.

Real World Use Cases

zk-Rollups for Scaling

A zk-rollup executes transactions off-chain, then posts a succinct proof to the base chain that all rules were enforced. The base chain verifies the proof, not the full batch. This converts thousands of operations into a constant time check, which improves throughput and reduces fees. Projects like zkSync, StarkNet, and Polygon zkEVM use this approach. Compared to optimistic rollups, zk-rollups do not need a long challenge window, so withdrawals can finalize much faster.

Privacy Preserving Payments and Trading

You can prove that an account has sufficient funds and that total balances remain consistent without revealing amounts or counterparties. You can run sealed bid auctions that reveal the winner while hiding losing bids. You can validate matching and settlement rules with an audit trail that reveals correctness but not strategy.

Identity and Selective Disclosure

Prove that someone is over 18, is a resident of a required region, or holds a specific credential without shipping raw documents. The verifier learns only the minimal fact required for the decision. This reduces attack surface and compliance burden.

Compliance and Audit

Financial institutions can publish cryptographic proofs of reserves, solvency, or policy adherence without disclosing customer level data. Regulators verify the claims and gain confidence without handling sensitive records.

ZK in APIs and Federation

Gate access based on zero-knowledge claims such as subscription status, rate limit tier, or role membership, while keeping private attributes local to the origin system.

ZKML on the Horizon

Zero-knowledge machine learning aims to prove that a model produced a particular output for a hidden input, while hiding both the model parameters and the input. This enables private inference in sensitive domains like healthcare and credit risk. Tooling is early, but the direction is clear.

Tooling You Can Use Today

Circom and SnarkJS

- What it is: Circom is a circuit language, SnarkJS is a toolkit for compiling circuits, generating keys, creating proofs, and verifying proofs.

- Why developers use it: Documentation is solid, the community is large, and it generates Solidity verifiers for Ethereum with minimal friction.

- Workflow: Write the circuit, compile to R1CS and WASM, generate proving and verifying keys, create proofs, verify locally, and deploy an on-chain verifier when needed.

Pattern to prefer: Keep secrets on the client. Use a WASM prover in the browser or mobile app, then send only the proof and public inputs to your server or contract.

ZoKrates

A high level toolkit tailored for the Ethereum ecosystem. It provides a standard library for hashing, Merkle operations, and signature checks, plus a familiar deployment flow where you generate proofs off-chain and verify them via a smart contract.

Noir

A modern language that feels similar to Rust in style. Noir targets Plonkish back ends, so you can rely on a universal setup and iterate quickly. Compilation is fast and error messages are friendlier than older stacks.

Halo2

A flexible framework for gadget composition and recursion. If you plan to aggregate many proofs or build layered proof systems, Halo2 is a strong choice.

Cairo and StarkNet

If you want transparent proof systems and a STARK native path, Cairo and StarkNet are designed for that model.

Design Patterns and Reference Architectures

Client Side Proving, Server Side Verification

Users generate proofs locally, which keeps secrets on device. The server or contract verifies and authorizes. This is ideal for identity checks and entitlement proofs.

Off Chain Compute, On Chain Verify

Do the expensive work off-chain, then submit a succinct proof that the result follows the rules. This is the rollup pattern and also applies to oracle attestations and cross domain state updates.

Batched Proofs

Aggregate many checks into one proof to amortize costs. Useful for bulk validations, large queues, and periodic attestations.

Recursion

Aggregate many inner proofs into a single outer proof that the chain verifies once. This keeps verification costs bounded.

Hashes Inside Circuits

Circuit friendly hashes like Poseidon or MiMC reduce constraints compared to Keccak or SHA inside the circuit. Where compatibility is mandatory, bridge at the boundary, not everywhere.

Curves and Precompiles

- BN254: Cheap verification on Ethereum due to precompiles, lower security margin.

- BLS12 381: Higher security, higher gas.

Pick based on your chain and cost model, then test with the exact verifier you will deploy.

Guided Example: Age Verification Without Revealing Birth Date

Goal: Prove that a user is at least 21 without revealing the date of birth.

Inputs:

- currentDate as public input

- ageThreshold as public input set to 21

- birthdate as private input

Circuit sketch:

- Convert dates to comparable integers

- Compute age = currentDate minus birthdate

- Constrain age >= ageThreshold

- If the constraint holds, a proof can be generated, otherwise it fails

Developer flow:

- Author the circuit with explicit assertions.

- Compile and generate proving and verifying keys.

- In the client, compute the witness and generate the proof.

- Send the proof and public inputs to a verifier endpoint or contract.

- Verify, then issue an authorization decision.

UX notes:

- Proving may take a few seconds on mid range phones, show progress.

- Verification is fast, so the decision step feels instant.

- Cache proving keys and parameters to avoid repeated setup costs.

Security checks:

- Negative tests that try underage values and boundary dates

- Integration tests against the exact on-chain verifier

- Document your hash and curve choices for auditors

Performance Considerations

- Proving vs verification: Proving is heavy and parallelizable, verification is light and constant. Optimize for verification cost in on-chain flows.

- Make circuits lean: Choose gadgets that minimize constraints. Prefer circuit friendly hashes when possible. Reuse arithmetic building blocks that you have profiled.

- Batch and recurse: Aggregate many checks or inner proofs to reduce total verification cost.

- Prover hardware: GPU support can cut proving time substantially. Specialized proving hardware is emerging and can improve throughput.

- On-chain costs: Store proofs efficiently. Consider calldata compression or off-chain storage with on-chain commitments if your design allows it.

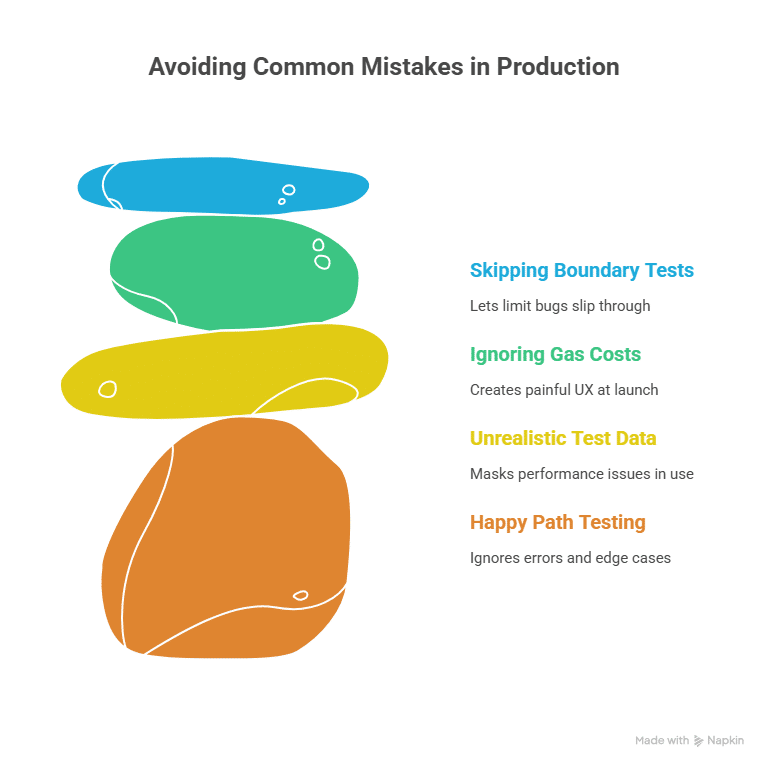

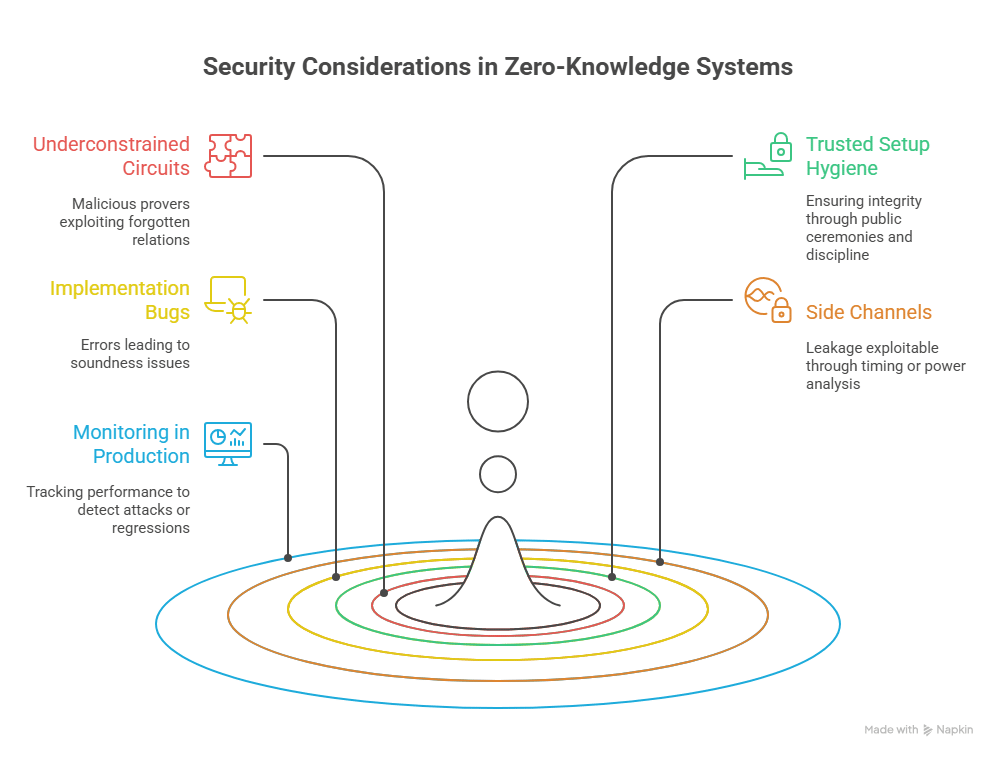

Security Considerations and Common Pitfalls

- Underconstrained circuits: The most common failure. If you forget a relation, a malicious prover may craft a witness that slips through. Use unit tests, property based tests, and adversarial inputs to catch gaps.

- Trusted setup hygiene: If your system requires a setup, treat it like critical infrastructure. Favor public multi party ceremonies, publish transcripts, and ensure strong operational discipline.

- Implementation bugs: Off by one errors, incorrect indexing, and boundary mistakes can break soundness. Test thoroughly and consider formal checks for critical gadgets.

- Side channels: Constant time implementations and careful memory access patterns reduce leakage that timing or power analysis could exploit.

- Monitoring in production: Track proving time, memory use, verification failures, and gas usage. Spikes can indicate attacks or regressions.

When ZK Is Not the Right Tool

If you only need password authentication, standard hashing and salted credentials are simpler. For at rest encryption, rely on proven ciphers and key management, not a proof system. If you require microsecond response times, proving cost may be too high.

Alternatives Matrix

| Technology | Best for | Weak at |

| Zero-knowledge proofs | Proving facts without revealing data | Ultra low latency real time loops |

| Homomorphic encryption | Computation on encrypted data | Simple yes or no checks |

| Secure enclaves | Trusted execution on specific hardware | Decentralized trust models |

| MPC | Joint computation across parties | Single party attestations |

Pick based on threat model, latency budget, trust assumptions, and operational overhead.

The Road Ahead

- Hardware acceleration: Dedicated proving chips and accelerated GPU stacks are moving from lab to production. Expect 10 to 100 times speedups for some circuits.

- Proof aggregation and recursion: Better aggregation will allow millions of operations to collapse into a small number of verifications.

- Standards and interoperability: Shared proof formats and verification interfaces will reduce vendor lock in and allow teams to mix toolchains.

- Developer experience: Expect better debuggers, circuit profilers, and IDE support that make constraint authoring and failure analysis more intuitive.

Final Words

Zero-knowledge proofs let developers validate truths without revealing secrets. They scale verification for heavy computation, protect personal data by design, and allow compliance without disclosing sensitive records. On-chain, they compress thousands of operations into one verification. Off-chain, they enable portable attestations that anyone can check.

The choice between SNARKs and STARKs depends on costs, setup, and long-term assumptions. SNARKs deliver tiny proofs and very low gas at the price of a setup. STARKs deliver transparency and comfort for a post-quantum world at the price of larger proofs. Systems like PLONK and Halo2 offer a practical middle ground, with universal setups and strong support for recursion.

Your starting point is straightforward. Pick a small use case such as age verification or membership proofs, build a circuit, generate a proof on the client, verify on a server or contract, then iterate. As your needs grow, adopt batching, recursion, and specialized gadgets. With careful testing and professional audits, ZK features can be shipped safely in production.

Frequently Asked Questions About Zero-Knowledge Proofs for Developers

How difficult is it to learn zero-knowledge proofs without a cryptography background?

Modern tools hide most of the math. If you are comfortable with programming and testing, you can write useful circuits. Production systems still require careful engineering and audits.

What is the practical difference between SNARKs and STARKs?

SNARKs have tiny proofs and very fast verification, yet often need a trusted setup. STARKs avoid setup and are considered post quantum friendly, yet their proofs are large and cost more on-chain.

Do zk-rollups scale every application?

They scale workloads that benefit from heavy off-chain compute and cheap on-chain verification. Simple flows may not gain enough to justify proving cost.

How do zk-rollups differ from optimistic rollups?

Both move execution off-chain. zk-rollups post validity proofs for immediate finality. Optimistic rollups assume validity and allow challenges for a set window, which delays withdrawals.

What is a trusted setup ceremony in practice?

Multiple participants contribute randomness and publish transcripts. If at least one deletes their secret contribution, security holds. Universal setups reduce repeated ceremonies across circuits.

Are there use cases beyond payments and scaling?

Yes. Identity and credentials, voting, supply chain attestations, private gaming logic, compliance and solvency proofs, and early stage ZKML for private inference.

How much gas does proof verification cost on Ethereum?

It varies by scheme. Groth16 verification often lands in the range of a few hundred thousand gas, which is modest compared to the computation it replaces.

Glossary

- Circuit: A mathematical representation of a computation with inputs, outputs, and constraints.

- Completeness: Honest proofs for true statements will be accepted by the verifier.

- Fiat Shamir heuristic: Converts interactive protocols to non interactive ones using a hash derived challenge.

- Proof generation: The process of creating a proof from a circuit and a witness, typically heavy.

- Soundness: A dishonest prover cannot convince the verifier of a false statement except with negligible probability.

- Trusted setup ceremony: A process to generate public parameters, which requires at least one honest participant.

- Witness: The private inputs and intermediate values known to the prover.

- zk-rollup: A Layer 2 approach that executes off-chain and posts validity proofs on-chain.

- R1CS: A constraint system used by many SNARK stacks.

- Plonkish: A family of polynomial identity-based proof systems with flexible gates.

Read More: An Introduction to Zero-Knowledge Proofs for Developers">An Introduction to Zero-Knowledge Proofs for Developers