Microsoft is Refreshing Secure Boot Certificates on Millions of Windows PCs

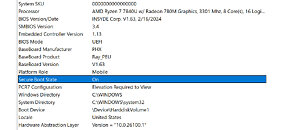

On your Windows PC, the Unified Extensible Firmware Interface (UEFI) firmware has a Secure Boot Certificate that mandates only verified software starts the boot-up sequence. Microsoft is preparing to refresh these certificates, and the company announced that millions of Windows PCs in circulation will receive new Secure Boot Certificates in an industry-wide gradual rollout to replace aging certificates that are expiring soon. According to the latest Windows Blog, the original Secure Boot Certificates introduced way back in 2011 are reaching the end of their planned lifecycle, with the expiration date set for late June 2026. This not only mandates updating but also requires a massive staged rollout from OEMs and Microsoft's partners to ensure that all Windows devices stay secure.

According to Microsoft, this is one of the largest industry collaborations that spans the Windows ecosystem, including servicing, firmware updates, and countless device configurations from OEMs and other hardware makers. Firmware makers are at the center with their UEFI BIOS patches, which will now have to replace their aging Secure Boot Certificates. The blog also states that OEMs have been provisioning updated certificates on their new devices, with some devices from 2024 and almost all PCs from 2025 updated to support the new certificate. Interestingly, older PCs and devices that were shipped prior to these years will also be taken care of, with major OEMs providing their own guidance on updating the certificate. If you don't see your OEM offering an update, be patient as the rollout is gradual.

According to Microsoft, this is one of the largest industry collaborations that spans the Windows ecosystem, including servicing, firmware updates, and countless device configurations from OEMs and other hardware makers. Firmware makers are at the center with their UEFI BIOS patches, which will now have to replace their aging Secure Boot Certificates. The blog also states that OEMs have been provisioning updated certificates on their new devices, with some devices from 2024 and almost all PCs from 2025 updated to support the new certificate. Interestingly, older PCs and devices that were shipped prior to these years will also be taken care of, with major OEMs providing their own guidance on updating the certificate. If you don't see your OEM offering an update, be patient as the rollout is gradual.