Analysts Say Bitcoin’s Future Lies in Infrastructure, Not Gold Comparison

For a long time, mainstream investors compared Bitcoin to a precious hedge and called it digital gold. That line helped people grasp scarcity and self custody. It did not tell the whole story. A better description is starting to win out in boardrooms and dev chats. Bitcoin is infrastructure explains how the network actually works in the world. It settles value with finality, anchors transparent collateral, and supports services that keep time with global markets.

Why the infrastructure lens is more useful than the vault metaphor

Infrastructure is the quiet backbone behind daily life. People rarely talk about power lines or fiber cables until something breaks. Value networks should be judged the same way. When analysts say Bitcoin is infrastructure, they are arguing that the asset and the ledger behave like productive capital.

Coins can be pledged as collateral, routed across borders, or priced into working capital cycles. The older label, digital gold, still matters as a store narrative. It simply covers one slice of the picture, not the whole panorama.

From passive store to productive capital

The base layer confirms transactions on a schedule that does not beg for headlines. Each block reduces settlement risk. On top of that, secure custody and policy controls create the operating environment enterprises expect. In that environment, lenders can mark risks, venues can clear trades, and treasurers can plan cash cycles. The practical outcome is simple.

Bitcoin is infrastructure captures how teams deploy the asset, not only how they hold it. The phrase digital gold explains scarcity and durability, but it does not teach a finance lead on margin rules, event playbooks, or audits.

Indicators that separate hope from use

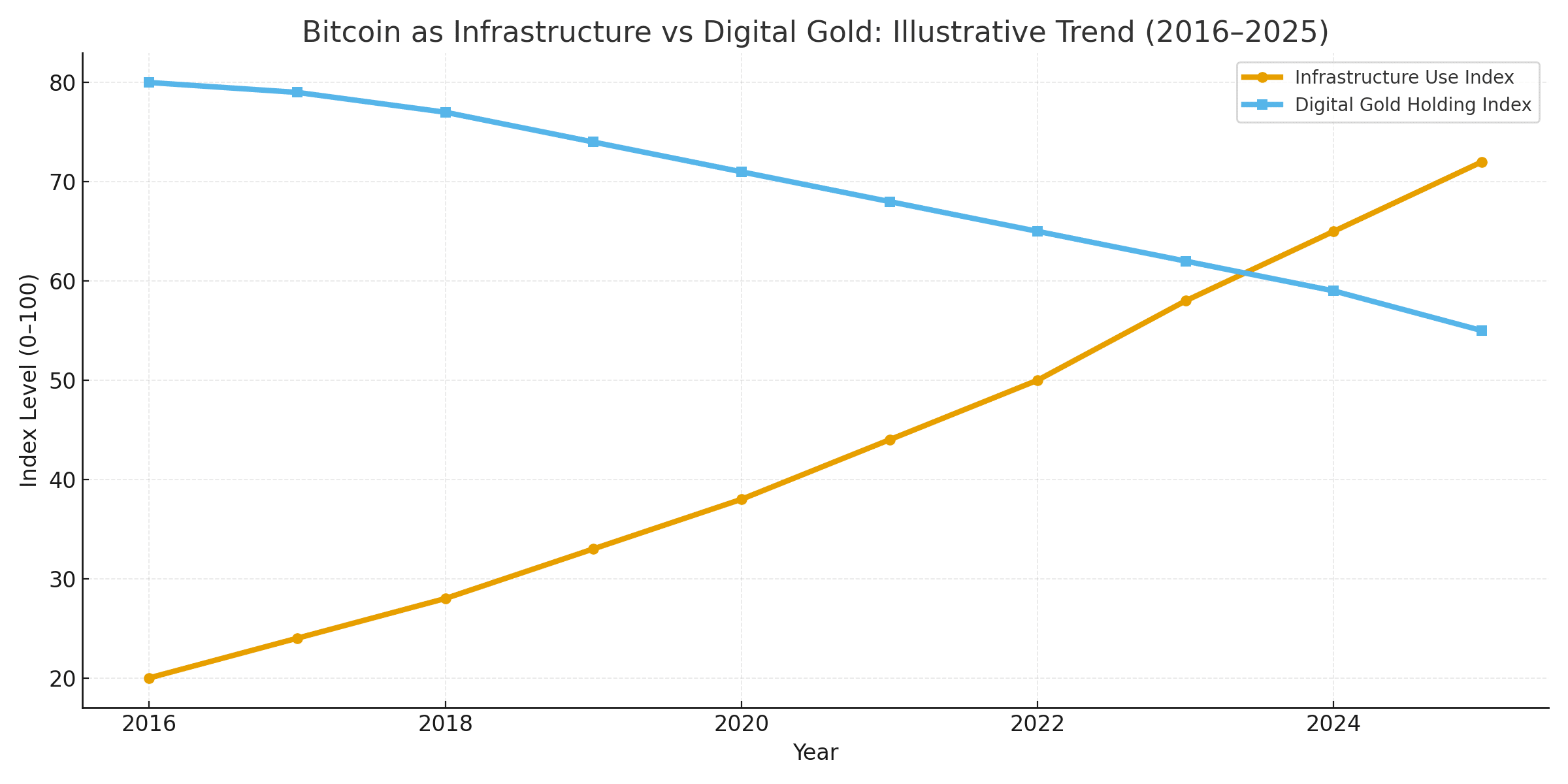

Good decisions follow good data as on chain velocity shows whether value sits or moves. A rising share of long term holders supports the store thesis. A rising share of active addresses and settlement value signals use at scale. Liquidity depth and realized volatility explain how much size a market can absorb before price slides. Basis and funding rates show ease or stress across derivatives.

In healthy tapes, basis is narrow and funding is stable. In stressed moments, gaps widen and risk managers step in. When the numbers point to increasing throughput and predictable operations, the label Bitcoin is infrastructure sounds less like marketing and more like measurement. The label digital gold points to patience and caution, which are valuable, but incomplete.

Payments, remittances, and the parts most users never see

Most users will never read a settlement explorer and they care about two taps and a clear receipt. Cross-border remittances, e-commerce checkout, and machine payments all benefit from neutral finality. Service providers can move on-chain when it reduces friction, or off-chain when that lowers cost without hiding risk.

With the right controls, a payment company can quote steady prices and keep promises during busy hours. These are classic infrastructure wins. To call the network digital gold only is to ignore the daily work of routing value. When that work becomes normal, people begin to understand why Bitcoin is infrastructure for real world flows.

Collateral, yield, and the honest sources of return

In the infrastructure frame, returns come from visible services. Lending desks earn a spread for placing capital with risk controls. Market makers earn fees for quoting two sided markets. Settlement providers earn for reliable processing.

None of these flows require smoke and mirrors. They require process discipline, asset liability matching, and clean reporting. The message hidden in Bitcoin is infrastructure is that sound operations beat clever slogans. The message hidden in digital gold is that saving alone cannot explain an economy built on programmable value.

Governance, compliance, and the adult questions

Serious capital does not move without answers. Who holds the keys. What policies define access? Which audits confirm that a process works as described? Mature custody uses role-based permissions, hardware-backed isolation, and recovery plans that are tested, not imagined. Clear licensing and disclosure rules channel innovation into products that enterprises can actually use.

With those pillars in place, the claim that Bitcoin is infrastructure reads as common sense. The older comfort phrase, digital gold, keeps its place for savers but does not guide an operations team through policy and oversight. Institutions lean toward systems that run every hour of the week.

Education that respects both stories

Education is changing. Instead of telling newcomers to buy and wait, educators now explain how settlement finality reduces counterparty risk, how collateral makes lending safer, and how risk is priced across time.

This does not toss the store story in the trash. It folds it into a broader playbook. The network can serve the saver and the operator at once. That dual use is the strongest proof that Bitcoin is an infrastructure is a practical description. The phrase digital gold helps a person start, then the infrastructure frame shows how teams keep going.

Reading the road ahead without drama

Cycles will continue. Prices will swing. Critics will point to every drop and call the model broken. The better habit is to grade the network on uptime, cost to settle, and resilience during stress. Those metrics tell the truth over time.

As more services plug into neutral rails, interoperability improves and the end user sees faster confirmations and fewer surprises. Step by step, enterprises begin to treat the system like any other critical dependency. At that point, repeating that Bitcoin is infrastructure sounds less like a slogan and more like a weather report.

Conclusion

The early metaphor served the market well. It gave people a simple way to think about scarcity and self-custody. The market is larger now and the demands are tougher. Savings still matter and the phrase digital gold will not disappear. It will sit beside a broader reality. The asset stores value, and it moves value with intent at a global scale. In that full picture, Bitcoin is infrastructure is the plain description that fits.

Frequently Asked Questions (FAQ)

What does it mean to say Bitcoin is infrastructure.

It means the asset and the ledger function together as productive capital. The system stores value and also settles payments, anchors collateral, and supports yield with transparent rules.

How is this different from calling Bitcoin digital gold.

The store frame points to scarcity and patience. The infrastructure frame points to throughput, reliability, and cash cycle planning. Both can be true at once, but the second gives operators a clearer map.

Which indicators help validate the infrastructure view.

Settlement value, active addresses, liquidity depth, basis, and funding paint a picture of actual use. Collateralized lending and custody adoption add further proof.

Is the infrastructure view only for institutions.

No. Faster confirmations, clear fees, and neutral rails help retail users, merchants, and developers. Better plumbing improves the whole street.

What risks remain.

Market volatility, custody mistakes, and unclear rules remain the big three. The answer is risk limits, tested recovery plans, and steady education.

Glossary of Key Terms

Collateralization

The practice of pledging an asset to secure a loan. If the borrower fails to repay, the lender can claim the collateral according to agreed rules.

Basis

The difference between spot and futures prices. It signals stress or ease in funding markets and helps traders read risk.

Funding rate

A periodic payment between long and short positions in perpetual futures that keeps the contract near the spot price.

Custody

The service of holding and safeguarding assets with security controls, permissions, and audits that reduce operational risk.

Liquidity depth

A measure of how much size the market can handle before price moves. Deeper books improve execution and reduce slippage.

Read More: Analysts Say Bitcoin’s Future Lies in Infrastructure, Not Gold Comparison">Analysts Say Bitcoin’s Future Lies in Infrastructure, Not Gold Comparison