Look back at Google Play’s biggest launches for developers in 2025

As 2025 comes to an end, we’re revisiting some of the biggest updates and key developments in Google Play this year. It all reflects our commitment to making Google Play…

As 2025 comes to an end, we’re revisiting some of the biggest updates and key developments in Google Play this year. It all reflects our commitment to making Google Play…  As 2025 comes to an end, we’re revisiting some of the biggest updates and key developments in Google Play this year. It all reflects our commitment to making Google Play…

As 2025 comes to an end, we’re revisiting some of the biggest updates and key developments in Google Play this year. It all reflects our commitment to making Google Play…

Ginny Marvin, Google’s Ads Liaison, is clarifying how keyword match types interact with AI Overviews (AIO) and AI Mode ad placements — addressing ongoing confusion among advertisers testing AI Max and mixed match-type setups.

Why we care. As ads expand into AI-powered placements, advertisers need to understand which keywords are eligible to serve — and when — to avoid unintentionally blocking reach or misreading performance.

Back in May. Responding to questions from Marketing Director Yoav Eitani, Marvin confirmed that an ad can serve either above or below an AI Overview or within the AI Overview — but not both in the same auction:

While both exact and broad match keywords can be eligible to trigger ads above or below AIO, only broad match keywords (or keywordless targeting) are eligible to trigger ads within AI Overviews.

What’s changed. In a follow-up exchange with Paid Search specialist Toan Tran, Marvin clarified that Google has updated how eligibility works. Previously, the presence of an exact match keyword could prevent a broad match keyword from serving in AI Overviews. That is no longer the case.

Since exact and phrase match keywords are not eligible for AI Overview placements, they do not compete with broad match keywords in that auction — meaning broad match can still trigger ads within AIO even when the same keyword exists as exact match.

The big picture. Google is reinforcing a clear separation between traditional keyword matching and AI-powered intent matching. Ads in AI Overviews rely on a deeper understanding of both the user query and the AI-generated content, which is why eligibility is limited to broader targeting signals.

The bottom line. Exact and phrase match keywords won’t show ads in AI Overviews — but they also won’t block broad match from doing so. For advertisers leaning into AI Max and AIO placements, broad match and keywordless strategies are now essential to unlocking reach in Google’s AI-driven surfaces.

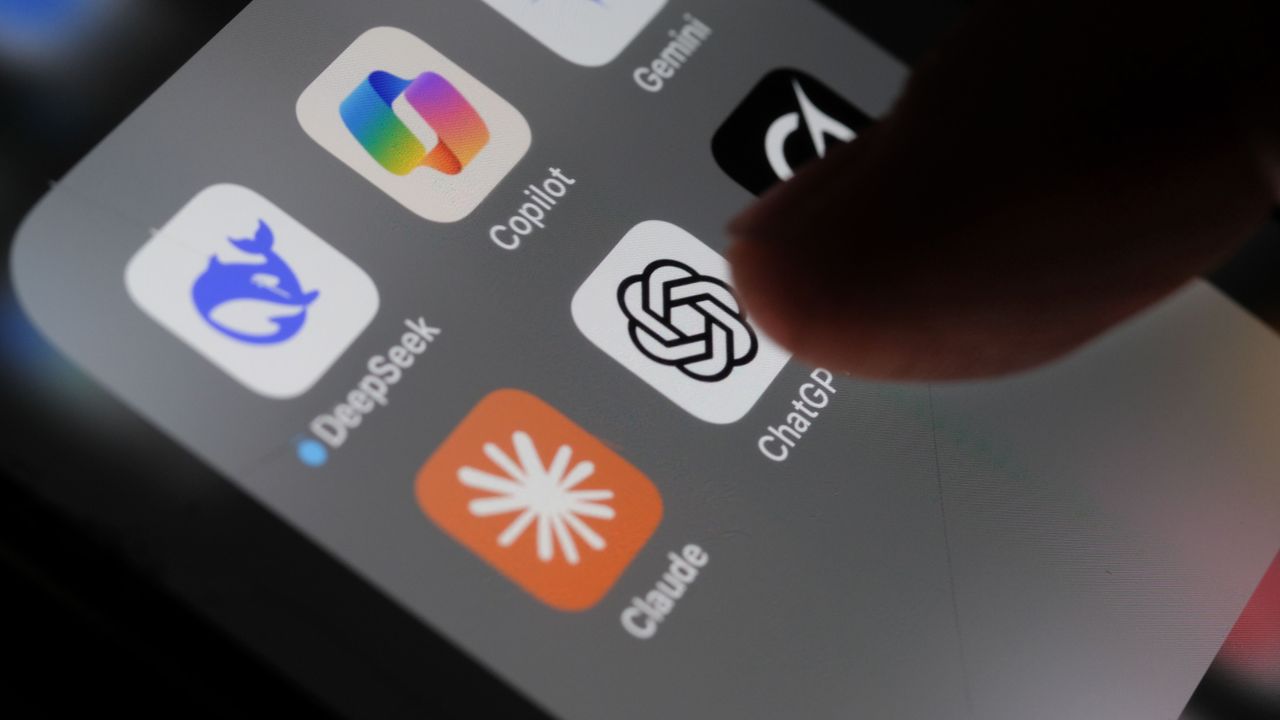

The post ChatGPT Images Unleashes Unprecedented Visual Agility appeared first on StartupHub.ai.

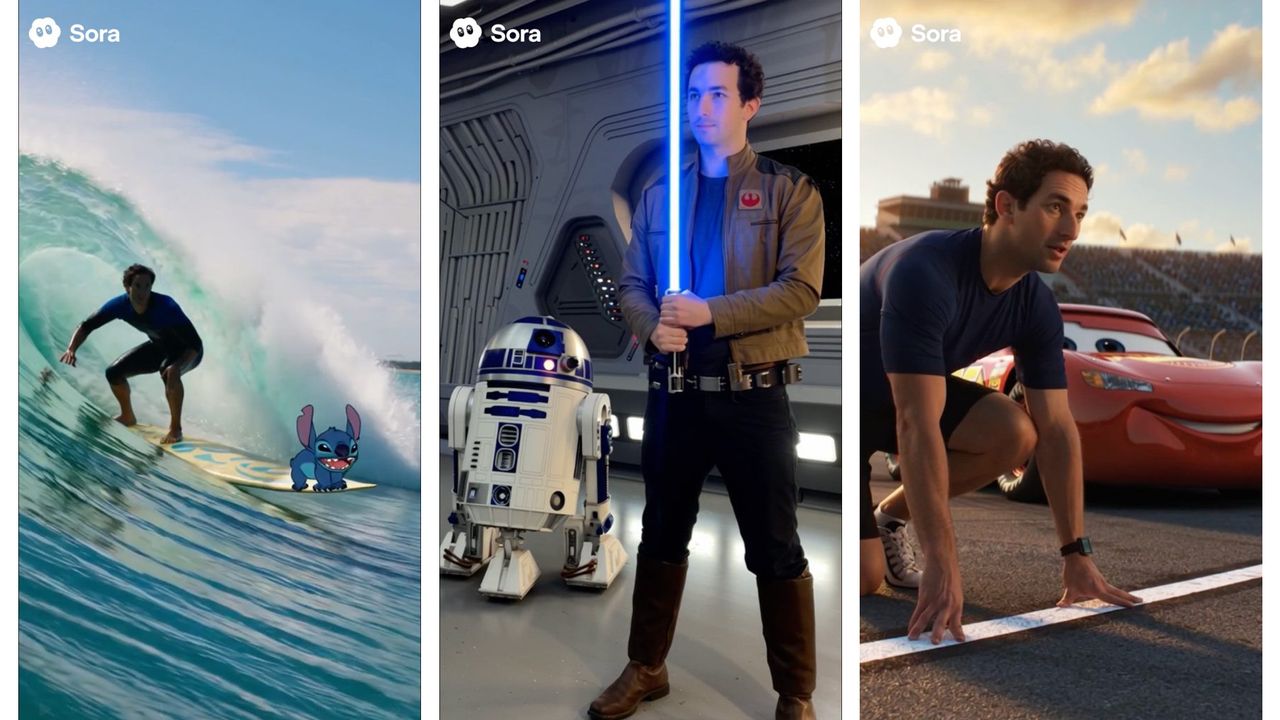

The latest iteration of ChatGPT Images, powered by OpenAI’s new flagship image generation model, GPT Image 1.5, signals a profound shift in the accessibility and flexibility of visual content creation. This release, demonstrated through a dynamic visual showcase, moves beyond simple image generation to offer sophisticated editing and stylistic transformations that were once the exclusive […]

The post ChatGPT Images Unleashes Unprecedented Visual Agility appeared first on StartupHub.ai.

The post The 2026 AI predictions: Why infrastructure will fail, but apps will fly. appeared first on StartupHub.ai.

While Big Tech faces supply chain bottlenecks and AGI timelines push into the 2030s, AI application startups are set to achieve unprecedented scale in 2026.

The post The 2026 AI predictions: Why infrastructure will fail, but apps will fly. appeared first on StartupHub.ai.

The post OpenAI’s new ChatGPT Images is 4x faster and more precise: Everything you need to know appeared first on StartupHub.ai.

The new ChatGPT Images, powered by GPT Image 1.5, delivers 4x faster generation speeds and crucial improvements in editing consistency and text rendering.

The post OpenAI’s new ChatGPT Images is 4x faster and more precise: Everything you need to know appeared first on StartupHub.ai.

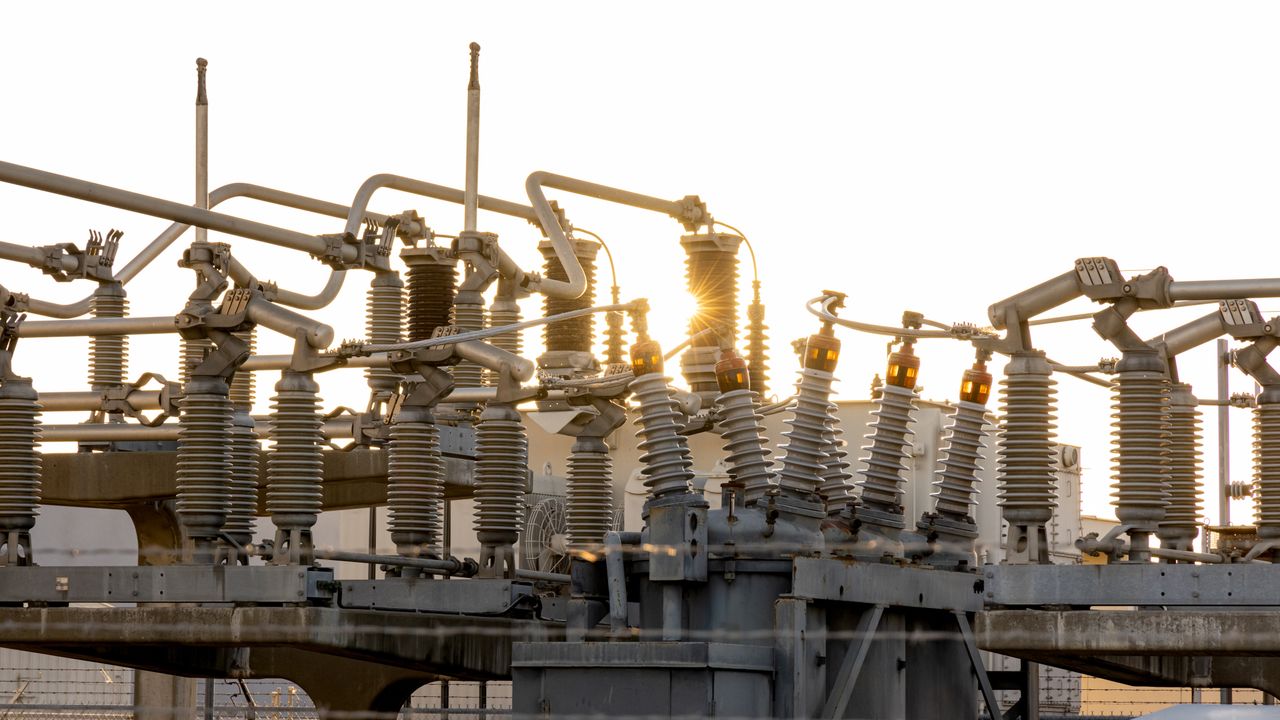

The post AI’s Unseen Cost: Political Pressure Mounts on Data Center Energy Demands appeared first on StartupHub.ai.

The burgeoning computational demands of artificial intelligence are rapidly colliding with public policy and local politics, as highlighted in a recent CNBC “Money Movers” segment. CNBC Business News TechCheck Anchor Deirdre Bosa reported on growing political pressure stemming from the massive energy consumption of AI data centers, revealing a new front of risk for the […]

The post AI’s Unseen Cost: Political Pressure Mounts on Data Center Energy Demands appeared first on StartupHub.ai.

The post Your Support Team Should Ship Code – Lisa Orr, Zapier appeared first on StartupHub.ai.

Lisa Orr, Product Leader at Zapier, shared a compelling narrative about how her company is leveraging artificial intelligence to transform its support operations, enabling the support team to actively ship code. The core problem was the sheer volume of support tickets generated by API changes, overwhelming traditional support workflows. Zapier’s journey began with a clear […]

The post Your Support Team Should Ship Code – Lisa Orr, Zapier appeared first on StartupHub.ai.

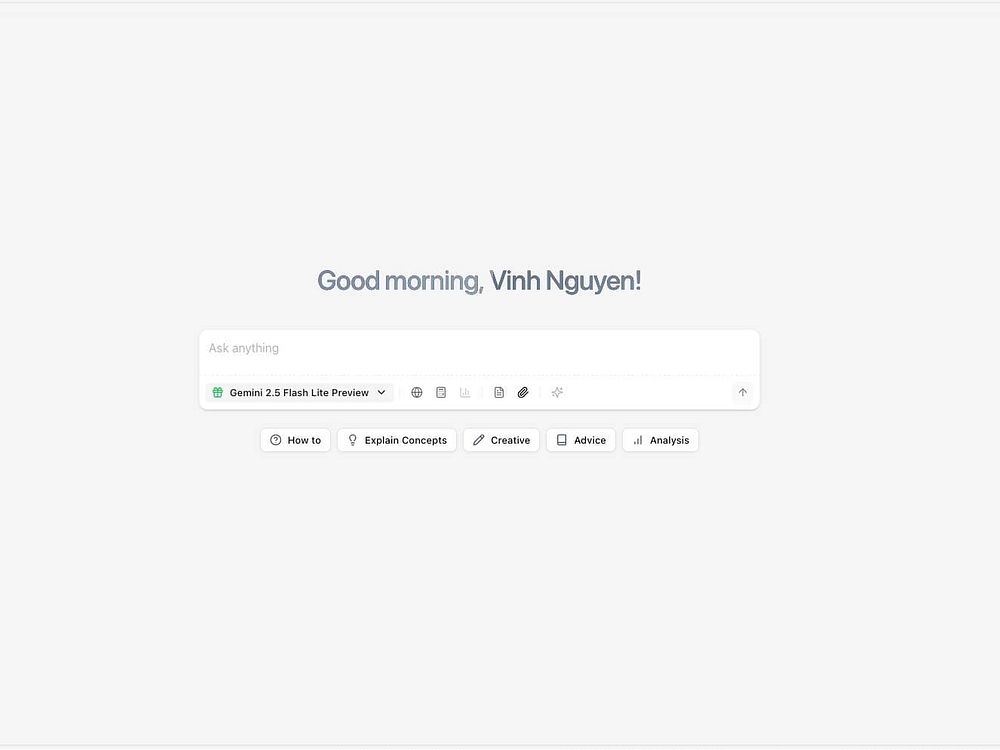

CC is our new experimental AI productivity agent from Google Labs, built with Gemini to help you stay organized and get things done. When you sign up, it connects your G…

CC is our new experimental AI productivity agent from Google Labs, built with Gemini to help you stay organized and get things done. When you sign up, it connects your G…

Google rapidly expanded AI Overviews in search during 2025, then pulled back as they moved into commercial and navigational queries. These findings are based on a new Semrush analysis of more than 10 million keywords from January to November.

AI Overviews surged, then retreated. Google didn’t roll out AI Overviews in a straight line in 2025. A mid-year spike gave way to a pullback, suggesting Google moved fast to test the feature, then eased off based on user data:

Zero-click behavior defied expectations. Surprisingly, click-through rates for keywords with AI Overviews have steadily risen since January. AI Overviews don’t automatically reduce clicks and may even encourage them.

Informational queries no longer dominate. Early 2025 AI Overviews were almost entirely informational:

Now, AI Overviews are appearing for commercial and transactional queries:

Navigational queries are rising fast. In an unexpected shift, AI summaries are increasingly intercepting brand and destination searches:

Google Ads + AI Overviews. Earlier this year, ads rarely appeared next to AI Overviews. Now they’re common:

Science is the most impacted industry. By keyword saturation, Science leads all verticals for AI Overviews at 25.96%. Computers & Electronics follows at 17.92%, with People & Society close behind at 17.29%.

Why we care. AI Overviews are unevenly and persistently reshaping click behavior, commercial visibility, and ad placement. Volatility is likely to continue, so closely monitor performance shifts tied to AI Overviews.

The report. Semrush AI Overviews Study: What 2025 SEO Data Tells Us About Google’s Search Shift

Dig deeper. In May, I reported on the original version of Semrush’s study in Google AI Overviews now show on 13% of searches: Study.

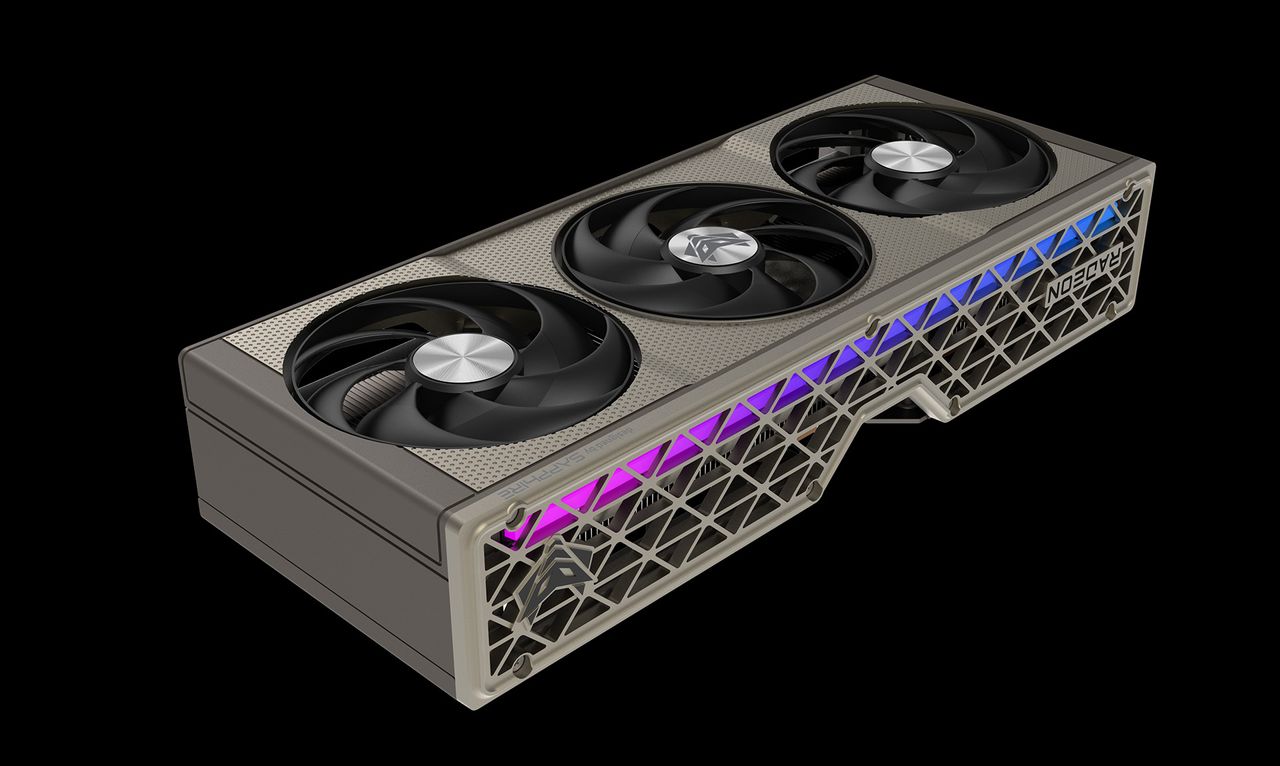

Hotfix 8 for Ghost of Tsushima adds FSR ML Frame Generation to the game Nixxes Software has released its “Patch 8 Hotfix” for Ghost of Tsushima’s PC version, adding support for AMD FSR ML Frame Generation. This new Frame Generation technique is part of AMD’s FSR “Redstone” update. With this update, users of AMD’s Radeon […]

The post Nixxes updates Ghost of Tsushima to enable FSR ML Frame Generation appeared first on OC3D.

Rec'd is a social discovery platform, turning trusted social signals into personalised recommendations. Right now people use multiple apps to discover places, save them, verify them and book. Rec'd integrates this process into one, powerful, AI based app that lets people discover the way they want, saving into one clean and intelligent platform.

The post AI Liberation: Unlocking Potential Beyond “Security Theater” appeared first on StartupHub.ai.

The prevailing narrative around artificial intelligence often centers on the race for capability, but a recent discussion on the Latent Space podcast unveiled a contrasting, equally vital perspective: the imperative of liberation and radical transparency in AI development. Pliny the Liberator, renowned for his “universal jailbreaks” that dismantle the guardrails of frontier models, and John […]

The post AI Liberation: Unlocking Potential Beyond “Security Theater” appeared first on StartupHub.ai.

The post Greylock’s Enduring Legacy: People, Principles, and the AI Frontier appeared first on StartupHub.ai.

Greylock Partners, a venture capital firm celebrating its 60th anniversary this year, offers a compelling study in enduring success through relentless adaptation and an unwavering commitment to core principles. In a recent episode of Uncapped with Jack Altman, General Partner Saam Motamedi, one of Greylock’s youngest partners, delved into the foundational elements that have allowed […]

The post Greylock’s Enduring Legacy: People, Principles, and the AI Frontier appeared first on StartupHub.ai.

The post What We Learned Deploying AI within Bloomberg’s Engineering Organization – Lei Zhang, Bloomberg appeared first on StartupHub.ai.

“The reality of applying AI at scale inside a mature engineering organization is far more complex and nuanced,” stated Lei Zhang, Head of Technology Infrastructure Engineering at Bloomberg, during a recent discussion. Zhang, speaking about Bloomberg’s extensive experience integrating AI into the workflows of over 9,000 software engineers, offered a candid look at the practical […]

The post What We Learned Deploying AI within Bloomberg’s Engineering Organization – Lei Zhang, Bloomberg appeared first on StartupHub.ai.

The post The AI Memory Wars Heat Up Around Video appeared first on StartupHub.ai.

Video has become a dominant signal on the internet. It powers everything from Netflix’s $82 billion Warner Bros Discovery acquisition to the sensor streams feeding warehouse robots and city surveillance grids. Yet beneath this sprawl, AI systems are hitting a wall in that they can tag clips and rank highlights, but they struggle to remember […]

The post The AI Memory Wars Heat Up Around Video appeared first on StartupHub.ai.

The post New EWA study results suggest on-demand pay boosts income appeared first on StartupHub.ai.

Independent EWA study results analyzing over one million EarnIn users suggest that flexible access to earned wages increases monthly income by 11.5 percent.

The post New EWA study results suggest on-demand pay boosts income appeared first on StartupHub.ai.

We are navigating the “search everywhere” revolution – a disruptive shift driven by generative AI and large language models (LLMs) that is reshaping the relationship between brands, consumers, and search engines.

For the last two decades, the digital economy ran on a simple exchange: content for clicks.

With the rise of zero-click experiences, AI Overviews, and assistant-led research, that exchange is breaking down.

AI now synthesizes answers directly on the SERP, often satisfying intent without a visit to a website.

Platforms such as Gemini and ChatGPT are fundamentally changing how information is discovered.

For enterprises, visibility increasingly depends on whether content is recognized as authoritative by both search engines and AI systems.

That shift introduces a new goal – to become the source that AI cites.

A content knowledge graph is essential to achieving that goal.

By leveraging structured data and entity SEO, brands can build a semantic data layer that enables AI to accurately interpret their entities and relationships, ensuring continued discoverability in this evolving economy.

This article explores:

To become a source that AI cites, it’s essential to understand how traditional search differs from AI-driven search.

Traditional search functioned much like software as a service.

It was deterministic, following fixed, rule-based logic and producing the same output for the same input every time.

AI search is probabilistic.

It generates responses based on patterns and likelihoods, which means results can vary from one query to the next.

Even with multimodal content, AI converts text, images, and audio into numerical representations that capture meaning and relationships rather than exact matches.

For AI to cite your content, you need a strong data layer combined with context engineering – structuring and optimizing information so AI can interpret it as reliable and trustworthy for a given query.

As AI systems rely increasingly on large-scale inference rather than keyword-driven indexing, a new reality has emerged: the cost of comprehension.

Each time an AI model interprets text, resolves ambiguity, or infers relationships between entities, it consumes GPU cycles, increasing already significant computing costs.

A comprehension budget is the finite allocation of compute that determines whether content is worth the effort for an AI system to understand.

For content to be cited by AI, it must first be discovered and understood.

While many discovery requirements overlap with traditional search, key differences emerge in how AI systems process and evaluate content.

Your site’s infrastructure must allow AI engines to crawl and access content efficiently.

With limited compute and a finite comprehension budget, platform architecture matters.

Enterprises should support progressive crawling of fresh content through IndexNow integration to optimize that budget.

Ideally, this capability is native to the platform and CMS.

Before creating content, you need an entity strategy that accurately and comprehensively represents your brand.

Content should meet audience needs and answer their questions.

Structuring content around customer intent, presenting it in clear “chunks,” and keeping it fresh are all important considerations.

Dig deeper: Chunk, cite, clarify, build: A content framework for AI search

Schema markup, clean information architecture, consistent headings, and clear entity relationships help AI engines understand both individual pages and how multiple pieces of content relate to one another.

Rather than forcing models to infer what a page is about, who it applies to, or how information connects, businesses make those relationships explicit.

AI engines, like traditional search engines, prioritize authoritative content from trusted sources.

Establishing topical authority is essential. For location-based businesses, local relevance and authority are also critical to becoming a trusted source.

Many enterprises claim to use schema but see no measurable lift, leading to the belief that schema doesn’t work.

The reality is that most failures stem from basic implementations or schema deployed with errors.

Tags such as Organization or Breadcrumb are foundational, but they provide limited insight into a business.

Used in isolation, they create disconnected data points rather than a cohesive story AI can interpret.

The more AI knows about your business, the better it can cite it.

A content knowledge graph is a structured map of entities and their relationships, providing reliable information about your business to AI systems.

Deep nested schema plays a central role in building this graph.

A deep nested schema architecture expresses the full entity lineage of a business in a machine-readable form.

In resource description framework (RDF) terms, AI systems need to understand that:

By fully nesting entities – Organization → Brand → Product → Offer → PriceSpecification → Review → Person – you publish a closed-loop content knowledge graph that models your business with precision.

Dig deeper: 8 steps to a successful entity-first strategy for SEO and content

In “How to deploy advanced schema at scale,” I outlined the full process for effective schema deployment – from developing an entity strategy through deployment, maintenance, and measurement.

At the enterprise level, facts change constantly, including product specifications, availability, categories, reviews, offers, and prices.

If structured data, entity lineage, and topic clusters do not update dynamically to reflect these changes, AI systems begin to detect inconsistencies.

In an AI-driven ecosystem where accuracy, coherence, and consistency determine inclusion, even small discrepancies can erode trust.

Manual schema management is not sustainable.

The only scalable approach is automation – using a schema management solution aligned with your entity strategy and integrated into your discovery and marketing flywheel.

As keyword rankings lose relevance and traffic declines, you need new KPIs to evaluate performance in AI search.

Dig deeper: 7 focus areas as AI transforms search and the customer journey in 2026

The web is shifting from a “read” model to an “act” model.

AI agents will increasingly execute tasks on behalf of users, such as booking appointments, reserving tables, or comparing specifications.

To be discovered by these agents, brands must make their capabilities machine-callable. Key steps to prepare include:

Brands that are callable are the ones that will be found. Acting early provides a compounding advantage by shaping the standards agents learn first.

Use this checklist to evaluate whether your entity strategy is operational, scalable, and aligned with AI discovery requirements.

Your martech stack must align with the evolving customer discovery journey.

This requires a shift from treating schema as a point solution for visibility to managing a holistic presence with total cost of ownership in mind.

Data is the foundation of any composable architecture.

A centralized data repository connects technologies, enables seamless flow, breaks down departmental silos, and optimizes cost of ownership.

This reduces redundancy and improves the consistency and accuracy AI systems expect.

When schema is treated as a point solution, content changes can break not only schema deployment but the entire entity lineage.

Fixing individual tags does not restore performance. Instead, multiple teams – SEO, content, IT, and analytics – are pulled into investigations, increasing cost and inefficiency.

The solution is to integrate schema markup directly into brand and entity strategy.

When structured content changes, it should be:

This enables faster recovery and lower compute overhead.

Integrating schema into your entity lineage and discovery flywheel helps optimize total cost of ownership while maximizing efficiency.

Several core requirements define AI readiness.

Together, these efforts make your omnichannel strategy more durable while reducing total cost of ownership across the technology stack.

Thanks to Bill Hunt and Tushar Prabhu for their contributions to this article.

Google’s pitch for AI-powered bidding is seductive.

Feed the algorithm your conversion data, set a target, and let it optimize your campaigns while you focus on strategy.

Machine learning will handle the rest.

What Google doesn’t emphasize is that its algorithms optimize for Google’s goals, not necessarily yours.

In 2026, as Smart Bidding becomes more opaque and Performance Max absorbs more campaign types, knowing when to guide the algorithm – and when to override it – has become a defining skill that separates average PPC managers from exceptional ones.

AI bidding can deliver spectacular results, but it can also quietly destroy profitable campaigns by chasing volume at the expense of efficiency.

The difference is not the technology. It is knowing when the algorithm needs direction, tighter constraints, or a full override.

This article explains:

Smart Bidding comes in several strategies, including:

Each uses machine learning to predict the likelihood of a conversion and adjust bids in real time based on contextual signals.

The algorithm analyzes hundreds of signals at auction time, such as:

It compares these signals with historical conversion data to calculate an optimal bid for each auction.

During the “learning period,” typically seven to 14 days, the algorithm explores the bid landscape, testing bid levels to understand the conversion probability curve.

Google recommends patience during this phase, and in general, that advice holds. The algorithm needs data.

The first problem is that learning periods are not always temporary.

Some campaigns get stuck in perpetual learning and never achieve stable performance.

Dig deeper: When to trust Google Ads AI and when you shouldn’t

The algorithm optimizes for metrics that drive Google’s revenue, not necessarily your profitability.

When a Target ROAS of 400% is set, the algorithm interprets that as “maximize total conversion value while maintaining a 400% average ROAS.”

Notice the word “maximize.”

The system is designed to spend the full budget and, ideally, encourage increases over time.

More spend means more revenue for Google.

Business goals are often different.

You may want a 400% ROAS with a specific volume threshold.

You may need to maintain margin requirements that vary by product line.

Or you may prefer a 500% ROAS at lower volume because fulfillment capacity is constrained.

The algorithm does not understand this context.

It sees a ROAS target and optimizes accordingly, often pushing volume at the expense of efficiency once the target is reached.

This pattern is common. An algorithm increases spend by 40% to deliver 15% more conversions at the target ROAS. Technically, it succeeds.

In practice, cash flow cannot support the higher ad spend, even at the same efficiency.

The algorithm does not account for working capital constraints.

AI bidding works well, but it has limits.

Without intervention, several factors can’t be fully accounted for.

Seasonal patterns not yet reflected in historical data

Launch a campaign in October, and the algorithm has no visibility into a December peak season.

It optimizes based on October performance until December data proves otherwise, often missing early seasonal demand.

Product margin differences

A $100 sale of Product A with a 60% margin and a $100 sale of Product B with a 15% margin look identical to the algorithm.

Both register as $100 conversions. The business impact, however, is very different.

This is where profit tracking, profit bidding, and margin-based segmentation matter.

Customer lifetime value variations

Unless lifetime value modeling is explicitly built into conversion values, the algorithm treats a first-time customer the same as a repeat buyer.

In most accounts, that modeling does not exist.

Market and competitive changes

When a competitor launches an aggressive promotion or a new entrant appears, the algorithm continues bidding based on historical conditions until performance degrades enough to force adjustment.

Market share is often lost during that lag.

Inventory and supply chain constraints

If a best-selling product is out of stock for two weeks, the algorithm may continue bidding aggressively on related searches because of past performance.

The result is paid traffic that cannot convert.

This is not a criticism of the technology. It’s a reminder that the algorithm optimizes only within the data and parameters provided.

When those inputs fail to reflect business reality, optimization may be mathematically correct but strategically wrong.

Learning periods are normal. Extended learning periods are red flags.

If your campaign shows a “Learning” status for more than two weeks, something is broken.

Common causes include:

When to intervene

If learning extends beyond three weeks, either:

Sometimes the algorithm is simply telling you it does not have enough data to succeed.

Healthy AI bidding campaigns show relatively smooth budget pacing.

Daily spend fluctuates, but it stays within reasonable bounds.

Problematic patterns include:

Budget pacing is a proxy for algorithm confidence.

Smooth pacing suggests the system understands your conversion landscape.

Erratic pacing usually means it is guessing.

This is the most dangerous pattern. Performance starts strong, then gradually or suddenly deteriorates.

This shows up often in Target ROAS campaigns.

What happened?

The algorithm exhausted the most efficient audience segments and search terms.

To keep growing volume – because it is designed to maximize – it expanded into less qualified traffic.

Broad match reached further. Audiences widened. Bid efficiency declined.

Sometimes the numbers look fine, but qualitative signals tell a different story.

These quality signals do not directly influence optimization because they are not part of the conversion data.

To address them, the algorithm needs constraints: bid adjustments, audience exclusions, or ad scheduling.

The search terms report is the truth serum for AI bidding performance.

Export it regularly and look for:

A high-end furniture retailer should not spend $8 per click on “free furniture donation pickup.”

A B2B software company targeting “project management software” should not appear for “project manager jobs.”

These situations occur when the algorithm operates without constraints.

Keyword matching is also looser than it was in the past, which means even small gaps can allow the system to bid on queries you never intended to target.

Dig deeper: How to tell if Google Ads automation helps or hurts your campaigns

One-size-fits-all AI bidding breaks down when a business has diverse economics.

The solution is segmentation, so each algorithm optimizes toward a clear, coherent goal.

Separate high-margin products – 40%+ margin – into one campaign with more aggressive ROAS targets, and low-margin products – 10% to 15% margin – into another with more conservative targets.

If the Northeast region delivers 450% ROAS while the Southeast delivers 250%, separate them.

Brand campaigns operate under fundamentally different economics than nonbrand campaigns, so optimizing both with the same algorithm and target rarely makes sense.

Segmentation gives each algorithm a clear mission. Better focus leads to better results.

Pure automation is not always the answer.

In many cases, hybrid approaches deliver better results.

The most effective setups combine AI bidding with manual control campaigns.

Allocate 70% of the budget to AI bidding campaigns, such as Target ROAS or Maximize Conversion Value, and 30% to Enhanced CPC or manual CPC campaigns.

Manual campaigns act as a baseline. If AI underperforms manual by more than 20% after 90 days, the algorithm is not working for the business.

Use tightly controlled manual campaigns to capture the most valuable traffic – brand terms and high-intent keywords – while AI campaigns handle broader prospecting and discovery.

This approach protects the core business while still exploring growth opportunities.

Google now allows advertisers to report cost of goods sold, or COGS, and detailed cart data alongside conversions.

This is not about bidding yet, but seeing true profitability inside Google Ads reporting.

Most accounts optimize for revenue, or ROAS, not profit.

A $100 sale with $80 in COGS is very different from a $100 sale with $20 in COGS, but standard reporting treats them the same.

With COGS reporting in place, actual profit becomes visible, dramatically improving the quality of performance analysis.

To set it up, conversions must include cart-level parameters added to existing tracking.

These typically include item ID, item name, quantity, price, and, critically, the cost_of_goods_sold parameter for each product.

Google is testing a bid strategy that optimizes for profit instead of revenue.

Access is limited, but advertisers with clean COGS data flowing into Google Ads can request entry.

In this model, bids are optimized around actual profit margins rather than raw conversion value.

This is especially powerful for retailers with wide margin variation across products.

For advertisers without access to the beta, a custom margin-tracking pixel can be implemented manually. It is more technical to set up, but it achieves the same outcome.

Dig deeper: Margin-based tracking: 3 advanced strategies for Google Shopping profitability

AI bidding works best when the fundamentals are in place:

In these conditions, AI bidding often outperforms manual management by processing more signals and making more granular optimizations than humans can execute at scale.

This tends to be true in:

When those conditions hold, the role shifts.

Bid management gives way to strategic oversight – monitoring trends, identifying expansion opportunities, and testing new structures.

The algorithm then handles tactical optimization.

Google is steadily reducing advertiser control under the banner of automation.

For advertisers with complex business models or specific strategic goals, this loss of granularity creates tension.

You are often asked to trust the algorithm even when business context suggests a different decision.

That shift changes the role. You are no longer a bid manager.

You are an AI strategy director who:

No matter how advanced AI bidding becomes, certain decisions still require human judgment.

Strategic positioning – which markets to enter and which product lines to emphasize – cannot be automated.

Neither can creative testing, competitive intelligence, or operational realities like inventory constraints, margin requirements, and broader business priorities.

This is not a story of humans versus AI. It is humans directing AI.

Dig deeper: 4 times PPC automation still needs a human touch

AI-powered bidding is the most powerful optimization tool paid media has ever had.

When conditions are right – sufficient data, a stable business model, and clean tracking – it delivers results manual management cannot match.

But it is not magic.

The algorithm optimizes for mathematical targets within the data you provide.

If business context is missing from that data, optimization can be technically correct and strategically wrong.

If markets change faster than the system adapts, performance erodes.

If your goals diverge from Google’s revenue incentives, the algorithm will pull in directions that do not serve the business.

The job in 2026 is not to blindly trust automation or stubbornly resist it.

It is to master the algorithm – knowing when to let it run, when to guide it with constraints, and when to override it entirely.

The strongest PPC leaders are AI directors. They do not manage bids. They manage the system that manages bids.

The post Billionaire CRE Developer Warns of Data Center Finance Risks appeared first on StartupHub.ai.

The burgeoning demand for artificial intelligence has ignited a gold rush in data center development, but for seasoned commercial real estate (CRE) billionaire Fernando De Leon, this frenetic activity bears unsettling resemblances to past market excesses. De Leon, CEO of Leon Capital Group, recently spoke with CNBC Senior Real Estate Correspondent Diana Olick on the […]

The post Billionaire CRE Developer Warns of Data Center Finance Risks appeared first on StartupHub.ai.

The post Lazard CEO: U.S. economy increasingly a levered bet on AI appeared first on StartupHub.ai.

Lazard CEO Peter Orszag joined CNBC’s “Squawk Box” to discuss the current state of the economy, the impact of the AI boom, and the broader implications for businesses and employment. He articulated a bifurcated economic landscape where AI-driven sectors are experiencing significant growth, contrasted with other areas that are not seeing the same level of […]

The post Lazard CEO: U.S. economy increasingly a levered bet on AI appeared first on StartupHub.ai.

The post Data 360 Powers Trusted AI: A New Foundation for ISVs appeared first on StartupHub.ai.

Salesforce's Data 360, now generally available, provides ISVs with a critical unified data foundation essential for developing and deploying trusted AI solutions.

The post Data 360 Powers Trusted AI: A New Foundation for ISVs appeared first on StartupHub.ai.

Preferred Sources in Top Stories has expanded globally: it's now available for English-language users worldwide, and we’ll roll it out to all supported languages early n…

Preferred Sources in Top Stories has expanded globally: it's now available for English-language users worldwide, and we’ll roll it out to all supported languages early n…

Shopify powers more than 6 million live ecommerce websites, supported by a robust app ecosystem that can extend nearly every part of the customer journey.

Anyone can develop an app to perform virtually any function.

But with so many integrations to choose from, ecommerce teams often waste time testing add-ons that promise revenue gains but fail to deliver.

Having worked across a wide range of Shopify implementations, I’ve seen which tools consistently improve checkout completion, recover abandoned carts, and increase revenue.

Based on that experience, I’ve organized the most effective integrations into three tiers by priority – so you can implement the essentials first, then move on to more advanced optimization.

With 54.5% of holiday purchases happening on mobile, the ecommerce experience must be seamless and flexible.

As a result, every Shopify site should have two components integrated into its storefront:

Without these in place, Shopify users introduce unnecessary friction into the purchase journey and risk sending customers to competitors.

The good news is that both components integrate natively with Shopify, requiring no custom development.

Digital wallets, such as Apple Pay, Google Pay, and PayPal, autofill delivery and payment information with a single click, eliminating the friction of typing on a small screen.

This ease of use can shorten the purchase journey to just a few clicks between a social ad and checkout.

Adoption is accelerating. Up to 64% of Americans use digital wallets at least as often as traditional payment methods, and 54% use them more often.

Beyond payment convenience, customers also expect flexibility.

BNPL providers, including Klarna and Afterpay, allow buyers to spread payments over time, reducing price objections at checkout.

These options contributed $18.2 billion to online spending during last year’s holiday season – an all-time high, according to Adobe.

Together, digital wallets and BNPL form the foundation of a modern, mobile-first checkout experience.

With these essentials in place, Shopify users can focus on tools that re-engage customers and bring them back to complete their purchases.

Dig deeper: The ultimate Shopify SEO and AI readiness playbook

The second tier focuses on re-engagement – tools designed to bring back customers who have already shown intent.

These integrations improve abandoned-cart recovery, increase repeat purchases, and build trust through social proof.

Email remains one of the most effective channels for re-engaging customers at every stage of the journey.

Klaviyo and Attentive are strong options for Shopify users because both offer deep platform integration with minimal setup.

Both platforms also support SMS, allowing Shopify sellers to send automated text messages directly to customers’ mobile devices.

SMS consistently delivers higher open, click-through, and conversion rates than email, making it especially effective for re-engagement use cases such as abandoned-cart recovery.

Together, these tools enable targeted campaigns and sophisticated automated flows that drive incremental revenue.

However, CAN-SPAM and TCPA regulations require explicit opt-in for email and SMS marketing, respectively.

As a result, sellers can only use these channels to contact customers who have agreed to receive marketing messages.

While Attentive and Klaviyo effectively reach customers who have opted in to marketing, CartConvert helps sellers engage the 50% to 60% of shoppers who have not.

The platform uses real people to contact cart abandoners via SMS. Because the outreach is not automated, TCPA restrictions do not apply.

CartConvert agents have live conversations with potential customers about their shopping experience.

They are familiar with the products and can guide buyers back toward a purchase by suggesting alternatives or offering discounts.

Running CartConvert alongside Klaviyo or Attentive ensures both subscribers and non-subscribers are included in re-engagement efforts.

Human-centered marketing also plays a role in building buyer confidence.

Today’s online shoppers rely heavily on reviews when making purchasing decisions.

When reviews are integrated directly into the shopping experience, they help establish trust and legitimacy, which in turn drive higher conversion rates.

A product with five reviews is 270% more likely to be purchased than one with no reviews, research from the Spiegel Research Center at Northwestern University found.

Shopify users can choose from several review aggregators that pull Google reviews into product pages.

Sellers should prioritize aggregators that also sync with Google Merchant Center, which powers Google Ads.

Tools such as Okendo, Yotpo, and Shopper Approved integrate smoothly with both Shopify and Google’s ecosystem.

When reviews sync with Merchant Center, they can appear in Google Shopping ads, improving ad performance.

While these tools add cost, they are also proven to generate incremental revenue that offsets the investment.

Dig deeper: How to make ecommerce product pages work in an AI-first world

The final tier includes more advanced integrations designed to help sellers optimize their sales funnel and performance at scale.

GA4’s changes to reporting, session logic, and interface have made attribution more difficult for many ecommerce teams.

As a result, sellers are increasingly seeking clearer, independent performance insights.

Since 2023, Triple Whale has emerged as a leading alternative to Google Analytics, offering third-party attribution tools that integrate seamlessly with Shopify.

The platform supports multiple attribution models – including first-click, last-click, and linear – along with cross-platform cost integration.

It also provides real-time data, which Google Analytics does not.

This capability becomes especially valuable during high-pressure sales periods, such as Black Friday, when delayed reporting can lead to missed opportunities.

Although Triple Whale can cost up to $10,000 annually for mid-size brands, the improved data quality often justifies the investment for teams scaling paid acquisition.

For sellers focused on improving conversion rates, landing page testing is essential.

While Shopify is relatively easy to use, making changes to a live storefront for A/B testing carries the risk of breaking the site.

Replo allows Shopify users to build custom landing pages that can be tested at scale without coding.

These pages typically provide a better user experience than default Shopify themes.

It can also use site data to personalize landing pages based on a shopper’s browsing history.

As a result, Replo-built pages often convert at higher rates than static site pages.

TikTok continues to grow as a paid media channel, but it has traditionally presented a higher barrier to entry for advertisers.

Previously, sellers needed an active TikTok account and could only purchase ads within the app, adding complexity and cost.

TikTok’s Shopify integration allows sellers to create ads that link directly to their websites, rather than keeping users inside the app.

This change has lowered the barrier to entry and expanded access to the platform.

Early testing shows promise for use cases such as cart abandonment, making the integration worth exploring despite its relative immaturity.

Dig deeper: Ecommerce SEO: Start where shoppers search

Shopify is a powerful platform for ecommerce, but maximizing results requires going beyond its default features.

Sellers do not need to implement every solution at once.

Instead, conduct a quick audit of the existing stack against this framework, identify gaps, and prioritize the tools that improve conversion and re-engagement.

Shopify’s flexibility is its greatest strength, and its app ecosystem enables sellers to turn more visitors into buyers.

Optimizing for AI search is “the same” as optimizing for traditional search, Google SVP of Knowledge and Information Nick Fox said in a recent podcast. His advice was simple: build great sites with great content for your users.

More details. Fox made the point on the AI Inside podcast, during an interview with Jason Howell and Jeff Jarvis. Here is the transcript from the 22 minute mark:

Jarvis: “Is there guidance for enlightened publishers who want to be part of AI about how they should view, should they view their content in any way differently now?”

Fox: “The short answer is no. The short answer is what you would have built and the way to optimize to do well in Google’s AI experiences is very similar, I would say the same, as how to perform well in traditional search. And it really does come down to build a great site, build great content. The way we put it is: build for users. Build what you would want to read, what you would want to access.”

Why we care. Many of you have been practicing SEO for many years, and now with this AI revolution in Search, you should know you are very well equipped to perform well in AI Search with many, if not all, of the skills you learned doing SEO. So have at it.

The video. Is AI Search Hurting The Open Web? With Google’s Nick Fox // AI Inside #104

We celebrated a major milestone in June: the return of SMX Advanced as an in-person event. It was our first since 2019.

More than a conference, SMX Advanced 2025 was a reunion. Search marketers from around the world came together to connect, exchange ideas, and learn the most current and advanced insights in search.

But search never stands still. With rapid shifts in AI SEO, constant algorithm changes, and the challenge of balancing generative AI with a human touch, the need for truly advanced, actionable education has never been greater.

We’re committed to making the SMX Advanced 2026 program our most relevant, advanced, and exciting deep-dive experience yet. And we can’t do it without you – the expert community that makes this event legendary.

We’re inviting you to directly shape the curriculum for 2026.

Help us build a program that tackles the biggest challenges and opportunities on your radar by completing our short survey. Tell us:

Fill out the survey here.

To thank you for your time and insights, everyone who completes the survey will have the opportunity to enter an exclusive drawing.

One lucky participant will win a coveted All Access pass to SMX Advanced 2026, taking place June 3-5 at the Westin Boston Seaport.

Beyond shaping the agenda, we also invite you to submit a session pitch. If you have a breakthrough strategy, an innovative case study, or next-level insights, this is your chance to help lead the industry conversation.

Read our guide to speaking at SMX for more details on how to submit a session idea. When you’re ready, create your profile and send us your session pitch.

We look forward to your submissions and insights! If you have any questions, feel free to reach out to me at kathy.bushman@semrush.com.

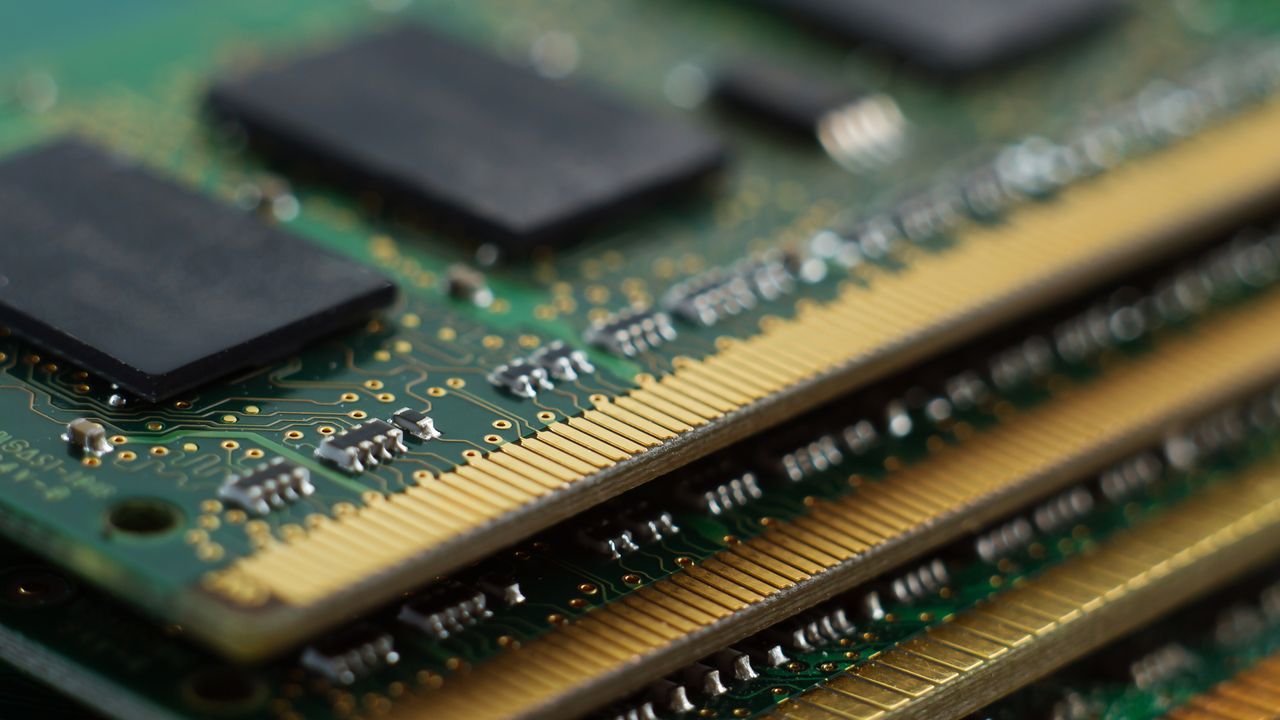

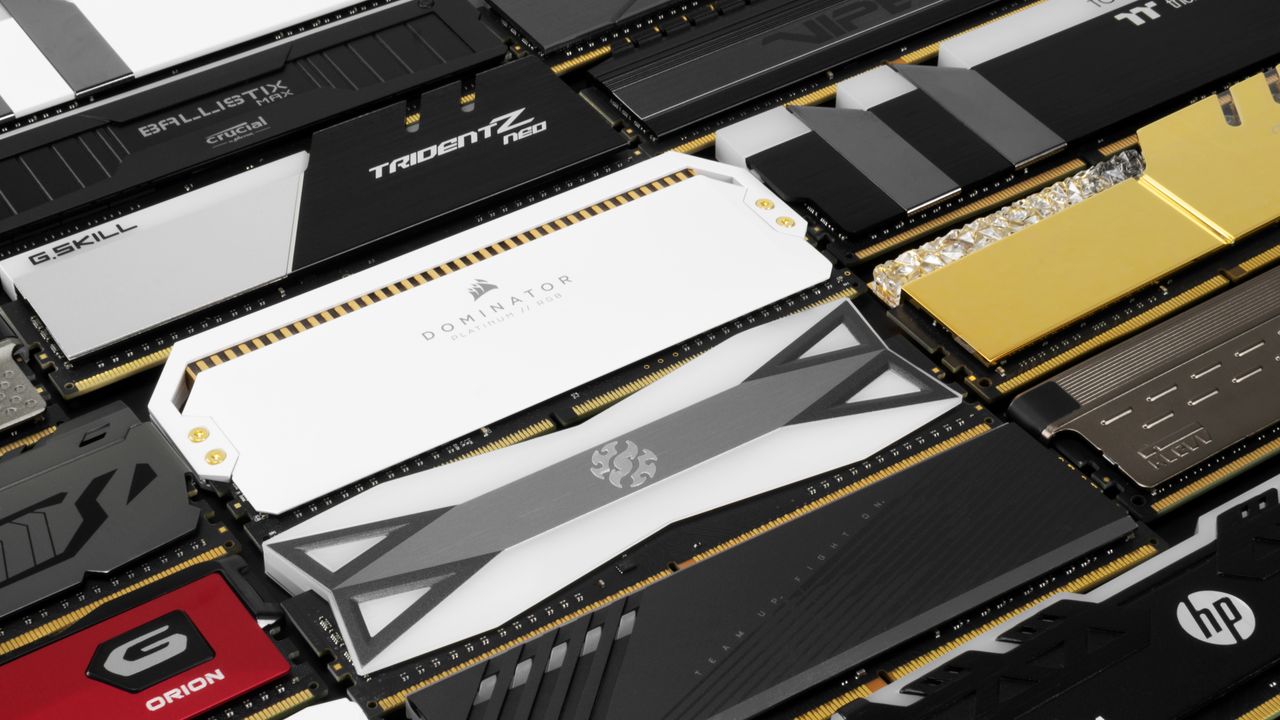

High DDR5 pricing is causing AMD/AM4 CPU price rises Consumer-grade DDR5 memory modules have seen price increases of 178-258%, forcing PC builders to consider alternative upgrade paths. Instead of moving to newer DDR5 platforms, some PC builders are upgrading to AMD’s DDR4-based AM4 platform. Why? The answer is simple: they can keep using the DDR4 memory they […]

The post AMD AM4 CPU pricing spike as PC market forces alternative upgrades appeared first on OC3D.

The post Progress Stalls: Sheryl Sandberg Warns AI Could Exacerbate Gender Inequality appeared first on StartupHub.ai.

The latest Lean In-McKinsey study reveals a stark truth: progress for women in the workplace is not just slowing, it’s stalling. Sheryl Sandberg, a pivotal figure in advocating for women’s leadership, returned to the public spotlight to deliver this sobering message, underscoring how emerging technologies like artificial intelligence threaten to further widen the gender gap. […]

The post Progress Stalls: Sheryl Sandberg Warns AI Could Exacerbate Gender Inequality appeared first on StartupHub.ai.

The post AI Fuels Megadeal Surge, Redefining M&A Landscape appeared first on StartupHub.ai.

Nearly a quarter of megadeals this year were AI-driven, a stark indicator of artificial intelligence’s transformative power in the M&A landscape. This trend, highlighted by Paul Griggs, U.S. Senior Partner at PwC, during his recent interview with Frank Holland on CNBC’s Worldwide Exchange, underscores a pivotal shift where strategic positioning and technological advancement are paramount. […]

The post AI Fuels Megadeal Surge, Redefining M&A Landscape appeared first on StartupHub.ai.

The post Unifying AI Operations: Flexible Orchestration Beyond Kubernetes appeared first on StartupHub.ai.

The sheer velocity of AI innovation demands an infrastructure that can adapt, not just scale. At IBM’s TechXchange in Orlando, Solution Architect David Levy and Integration Engineer Raafat “Ray” Abaid illuminated the critical need for a paradigm shift in how AI and machine learning workloads are managed, moving beyond the traditional automation paradigms. Their discussion […]

The post Unifying AI Operations: Flexible Orchestration Beyond Kubernetes appeared first on StartupHub.ai.

The post House proposes bill to advance data center buildout speed appeared first on StartupHub.ai.

The proposed legislation, dubbed “The SPEED Act,” seeks to significantly reduce the time required for permitting and construction of data centers and associated power infrastructure. This is a crucial development, as the voracious appetite of AI for computational power necessitates a corresponding acceleration in the physical infrastructure that supports it. The bill proposes to limit […]

The post House proposes bill to advance data center buildout speed appeared first on StartupHub.ai.

Google Search Console appears to have fixed the weeks-long delay in Performance reports. After several weeks of 50+ hour lag times, the reports now seem up to date as of the past few hours.

Now up-to-date. If you check the Search Performance report now, you should see a normal delay of about two to six hours. Over the past few weeks, that delay had stretched to more than 70 hours.

This is what I see:

The delays began a few weeks ago and took roughly three weeks to fully clear, including the backlog of data.

Page indexing report. Meanwhile, the Page Indexing report delay we reported weeks ago is still unresolved. The report is now almost a month behind, and Google has not fixed it yet. Google posted a notice at the top of the report that says:

Why we care. If you rely on Search Console data for analytics and stakeholder or client reporting, this has been extremely frustrating. The Performance reports now appear to be updating normally, but the Page Indexing report remains heavily delayed and will continue to create reporting headaches.

Meanwhile, Google released a number of new features in the past few weeks, including:

Managing large catalogs in Google Performance Max can feel like handing the algorithm your wallet and hoping for the best.

La Maison Simons faced that exact challenge: too many products and not enough control. Then they rebuilt their segmentation with Channable Insights and turned a “black box” campaign into a revenue-generating machine.

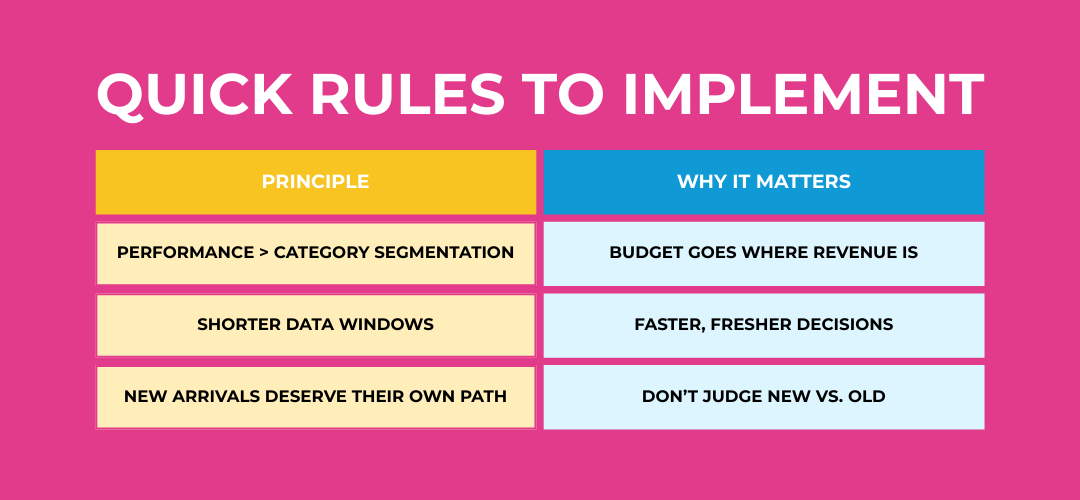

Simons originally split campaigns by product category. It sounded logical – until their best-selling sweater ate the budget and newer or overlooked products never had a chance to surface.

Static segmentation meant limited visibility and slow decisions.

Marketers stayed stuck making manual tweaks while Google kept auto-prioritizing only what was already working.

Enter Channable Insights. Product-level performance data (ROAS, clicks, visibility) now powers dynamic grouping:

Products automatically move between these segments as performance shifts – no manual work needed. As Etienne Jacques, Digital Campaign Manager, Simons, put it:

“One super popular item no longer takes all the money.”

Instead of waiting 30 days for signals, Simons switched to a rolling 14-day window.

The result: faster reactions, sharper accuracy, and less wasted spend in a fast-moving catalog.

Why stop at Google? The same segmentation logic was automatically applied on:

Cross-channel consistency creates compounding optimization.

Without raising ad spend, Simons unlocked:

Even the “invisibles” turned into surprise profit drivers once they finally got the spotlight.

Automation restored marketing control – it didn’t remove it.

Teams can finally learn from the data and influence which products grow, instead of letting PMax run everything on autopilot.

Want Simons-style ROAS gains without extra ad spend? Start by testing the quality of your product data with a free feed and segmentation audit.

How much have DDR5 memory prices increased? We all know that DDR5 memory pricing has shot up, but how bad is the situation? Has AI-driven datacenter demand ruined the DRAM market? Yes, but how much is it hitting our wallets? Today we have looked at today’s DRAM pricing and have compared it to 30 days […]

The post It’s bad – Here’s how much DDR5 pricing has increased appeared first on OC3D.

The post Physical AI’s Off-Screen Revolution: Sanjit Biswas on Scaling Real-World Impact appeared first on StartupHub.ai.

The next transformative wave of artificial intelligence is unfolding not in the digital ether, but in the tangible, messy reality of the physical world. This was the central thesis articulated by Sanjit Biswas, CEO of Samsara, in a recent discussion with Sequoia Capital’s Sonya Huang and Pat Grady. Biswas, a serial founder known for scaling […]

The post Physical AI’s Off-Screen Revolution: Sanjit Biswas on Scaling Real-World Impact appeared first on StartupHub.ai.

Google's core updates can trigger issues that standard SEO audits fail to catch. Here are eight factors to check.

The post Eight Overlooked Reasons Why Sites Lose Rankings In Core Updates appeared first on Search Engine Journal.

Samsung denies SATA SSD phase-out rumours, calling them false Samsung has officially denied reports that it plans to phase out its SATA SSDs and other consumer products. This follows recent rumours that Samsung planned to wind down its SATA SSD production to free up manufacturing capacity for data centre and AI customers. With Micron killing […]

The post Samsung refutes consumer SSD phase-out rumours appeared first on OC3D.

Introducing VT Chat, a privacy-first AI chat application that keeps all your conversations local while providing advanced research capabilities and access to 15+ AI models including Claude 4 Sonnet and Claude 4 Opus, O3, Gemini 2.5 Pro and DeepSeek R1.

Research features: Deep Research does multi-step research with source verification, Pro Search integrates real-time web search with grounding web search powered by Google Gemini.

There's also document processing for PDFs, a "thinking mode" to see complete AI reasoning, and structured extraction to turn documents into JSON. AI-powered semantic routing automatically activates tools based on your queries.

Live website previews in your Mac menu bar

Autonomous AI for smarter e-signatures

Detect hidden apps on MacOS

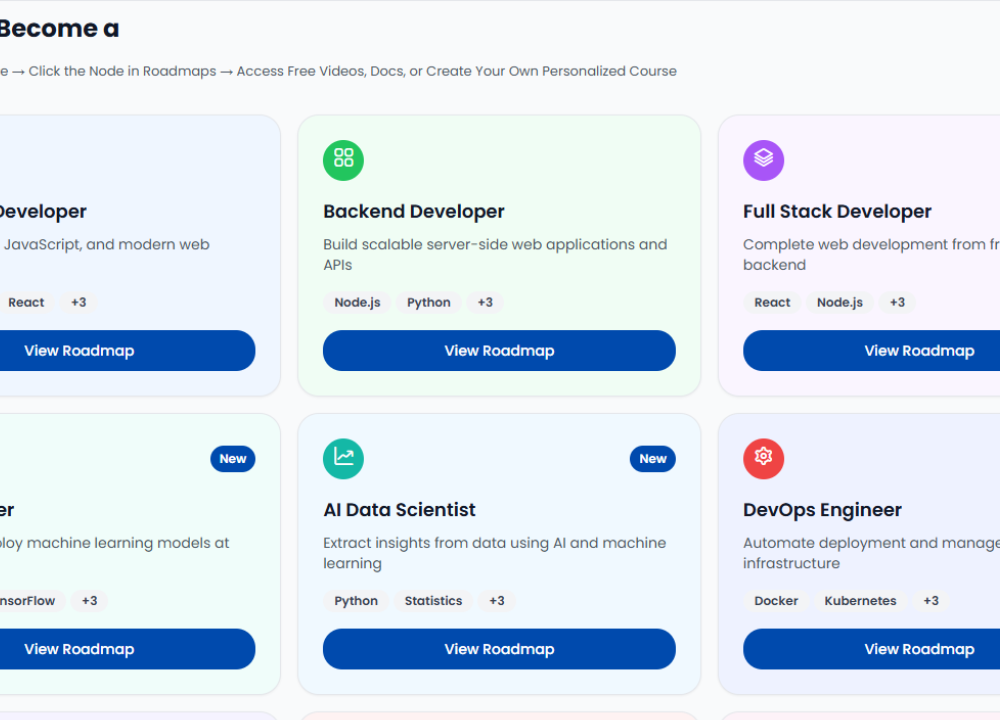

The post Salesforce AI Careers: A New Talent Pipeline Emerges appeared first on StartupHub.ai.

Salesforce's global Workforce Development programs are actively shaping the landscape of AI careers, equipping over 120,000 learners with critical skills and certifications.

The post Salesforce AI Careers: A New Talent Pipeline Emerges appeared first on StartupHub.ai.

The post Amazon Upskills Workforce for Agentforce AI Era appeared first on StartupHub.ai.

Amazon is strategically investing in employee 'Agentforce AI' skills through Salesforce Trailhead, preparing its workforce for the agentic AI era.

The post Amazon Upskills Workforce for Agentforce AI Era appeared first on StartupHub.ai.

Open Source, Free Anonymous AI Chat - Ready to Run Locally

Read books with Elon Musk, Steve Jobs, or anyone you choose

Test apps in a click with AI QA agents that scale like infra

One dashboard to run and organize multiple AI CLI agents

Dial in espresso & pourover

A showcase for AI-assisted builds, inspiration, and how-tos

Test data as code: YAML rules, Git versioned, & CI/CD ready

A browser-first marketplace for PC games

Private ai chat with 30+ open source models

Open source DevOps agent for devs who just want to ship

Easiest solution to deploy multimodal AI to mobile

The post NVIDIA Acquires SchedMD, Bolstering AI Infrastructure appeared first on StartupHub.ai.

NVIDIA's acquisition of SchedMD, the creator of Slurm, strategically enhances its control over critical open-source workload management for HPC and AI.

The post NVIDIA Acquires SchedMD, Bolstering AI Infrastructure appeared first on StartupHub.ai.

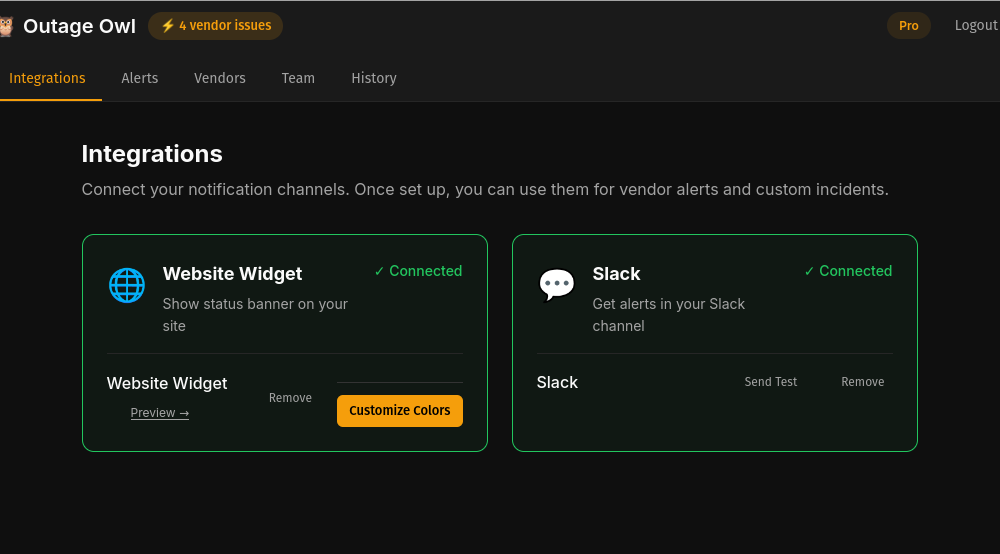

Outage Owl monitors 20+ vendor status pages in real time and alerts your team and customers when issues arise. Add a single script to show a website banner during outages and connect Slack to notify your team before tickets pile up. Create custom incidents and messages, tune alert rules, delays, and quiet hours, and keep everyone informed within seconds. Set up in under five minutes, and start free with one alert rule.

Apparently, no one knows if TikTok will be allowed to remain in the U.S.

Insights into some of the major trends among Snapchat users in 2025.

SOme handy pointers for your LinkedIn video content approach.

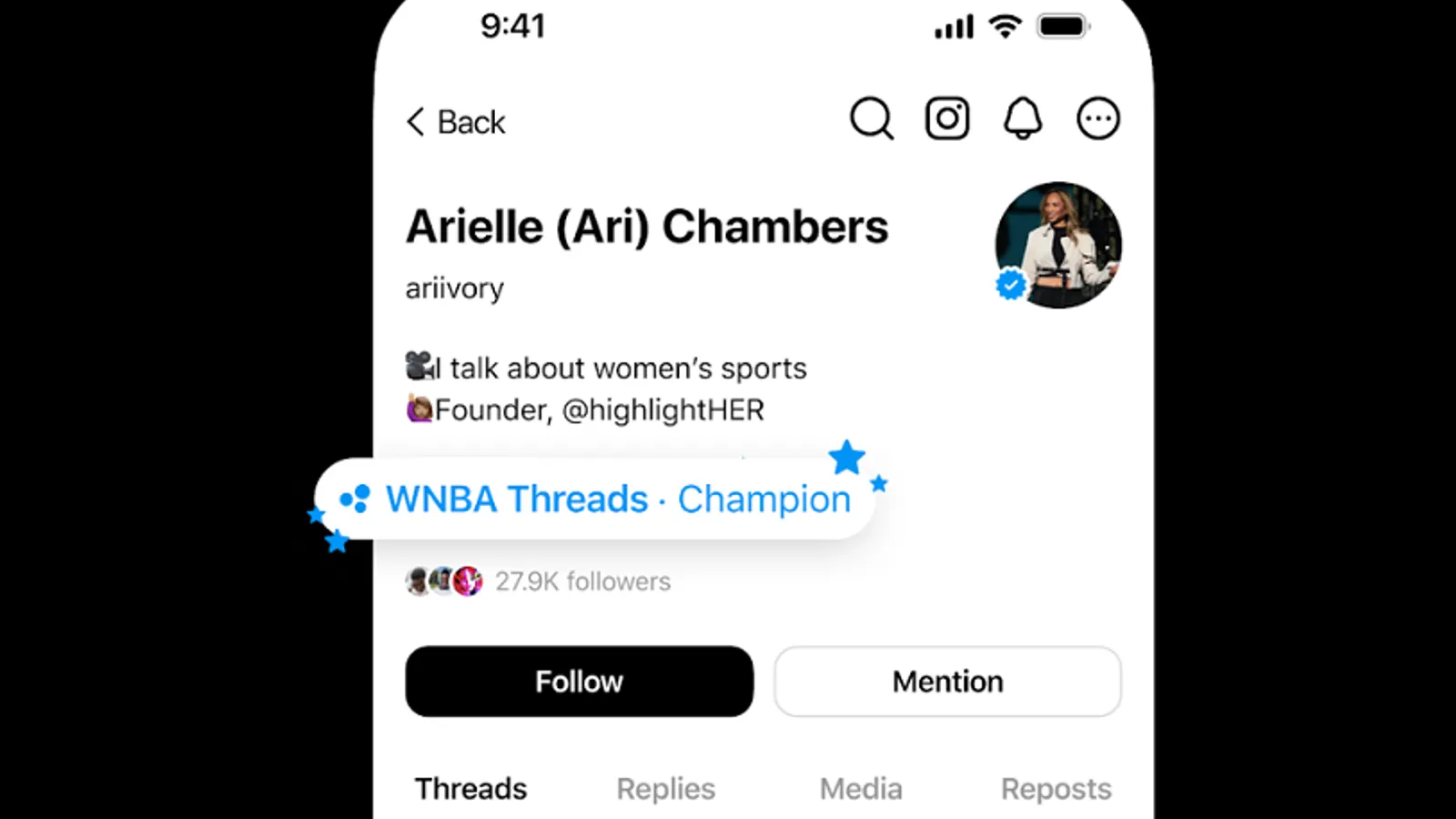

Threads is looking to encourage more topical engagement in the app.

Ahrefs data suggests Google’s AI Mode and AI Overviews often align on meaning while citing different URLs.

The post Google AI Mode & AI Overviews Cite Different URLs, Per Ahrefs Report appeared first on Search Engine Journal.

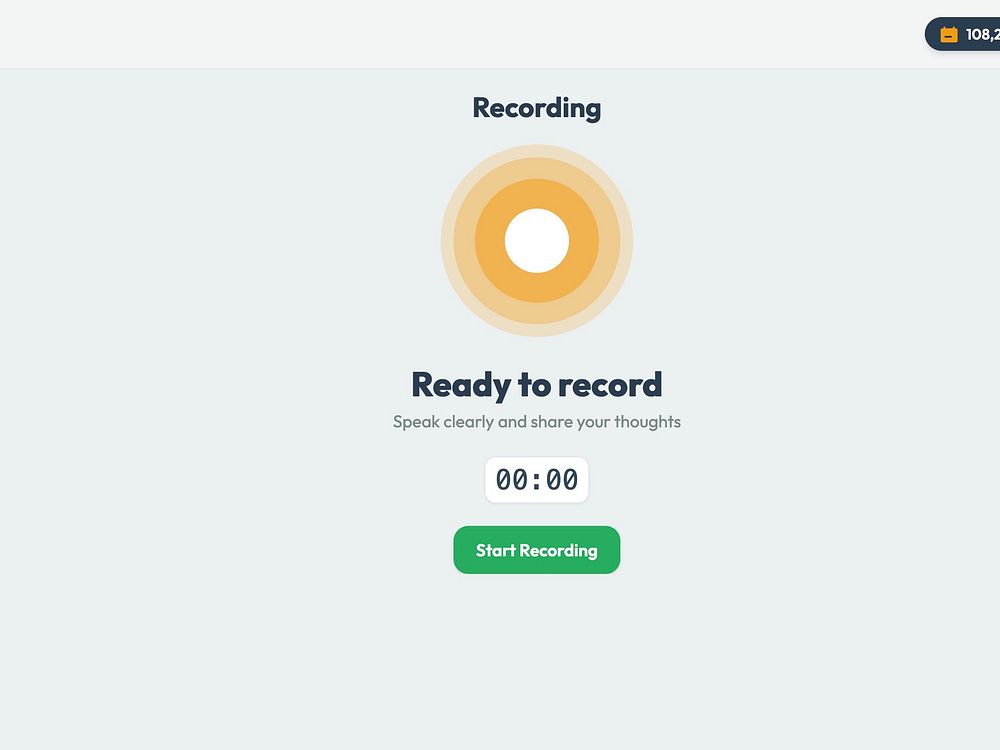

Zinggit is an AI-powered voice note to text app designed to take your idea to content quicker. No more typing out ideas, or trying to create an outline. Just speak your thoughts and 'vibe type' your first draft. Perfect for busy business owners with tons of ideas, agency owners trying to sound out their next article, or social media managers who want to summarise an idea into a post.

The post White House AI Czar David Sacks on Navigating the AI Frontier: Regulation, Race, and Jobs appeared first on StartupHub.ai.

The rapid acceleration of artificial intelligence has ignited a multifaceted debate spanning innovation, national security, and economic impact, a tension vividly explored in a recent CNBC “Closing Bell Overtime” interview. David Sacks, the White House AI and Crypto Czar, spoke with Morgan Brennan about President Trump’s executive order aiming to streamline AI regulation, the intensifying […]

The post White House AI Czar David Sacks on Navigating the AI Frontier: Regulation, Race, and Jobs appeared first on StartupHub.ai.

We’re enhancing Gemini Deep Research to help you visualize complex information instantly. Now available to Google AI Ultra subscribers, Deep Research can go beyond text …

We’re enhancing Gemini Deep Research to help you visualize complex information instantly. Now available to Google AI Ultra subscribers, Deep Research can go beyond text …

Google's John Mueller explains that staggered site migrations may impact how the site is understood

The post Google Explains Why Staggered Site Migrations Impact SEO Outcome appeared first on Search Engine Journal.

Phantom is an AI website builder designed to help anyone go from an idea to a fully functional website in just a few minutes. It handles all the heavy lifting automatically — setting up authentication, database, payments, analytics, and even AI integrations for you. Instead of juggling multiple tools or writing code from scratch, you just describe what you want, and Phantom builds it out instantly.

It runs on a network of specialized AI agents, each focused on a different area like frontend, backend, bug fixing, and review. This makes the process faster, more accurate, and more creative. Phantom lets you skip the setup and get straight to building — without needing technical expertise.

The post Tesla’s Trillion-Dollar AI Future: Dan Ives on Autonomy and Robotics appeared first on StartupHub.ai.

Wedbush Securities’ Dan Ives recently offered a compelling vision of Tesla’s future, asserting that the company, alongside Nvidia, stands at the forefront of the “physical AI revolution.” This isn’t merely about electric vehicles; it’s about the profound convergence of hardware and artificial intelligence to create tangible, real-world autonomous capabilities. Ives’s commentary underscores a pivotal shift […]

The post Tesla’s Trillion-Dollar AI Future: Dan Ives on Autonomy and Robotics appeared first on StartupHub.ai.

The post Bolmo Advances Byte-Level Language Models with Practicality appeared first on StartupHub.ai.

AI2's Bolmo makes byte-level language models practical by "byteifying" existing subword models, offering superior character understanding and flexible inference.

The post Bolmo Advances Byte-Level Language Models with Practicality appeared first on StartupHub.ai.

The post Rebuilding American Industry: The AI-Powered Factory Renaissance appeared first on StartupHub.ai.

Erin Price-Wright, a General Partner at Andreessen Horowitz, unveiled a compelling vision for “The Renaissance of the American Factory” as part of the firm’s 2026 Big Ideas series. Her presentation posits that America’s industrial muscle, which has atrophied over decades due to offshoring, financialization, and regulatory burdens, is poised for a significant resurgence. This revitalization […]

The post Rebuilding American Industry: The AI-Powered Factory Renaissance appeared first on StartupHub.ai.

The post Beyond Snippets: The Evolving Landscape of AI Code Evaluation appeared first on StartupHub.ai.

The rapid ascent of AI in code generation, from single-line suggestions to architecting entire codebases, demands an equally sophisticated evolution in how these models are evaluated. This critical shift was at the heart of Naman Jain’s compelling presentation at the AI Engineer Code Summit, where the Engineering lead at Cursor unpacked the journey of AI […]

The post Beyond Snippets: The Evolving Landscape of AI Code Evaluation appeared first on StartupHub.ai.

The post Google DeepMind Unveils Gemini 3 and Nano Banana Pro, Redefining AI Development appeared first on StartupHub.ai.

Google DeepMind recently showcased its latest advancements in artificial intelligence at the AI Engineer Code Summit, where Product Manager Kat Kampf and Product & Design Lead Ammaar Reshi introduced Gemini 3 Pro and Nano Banana Pro. Their presentation, “Building in the Gemini Era,” highlighted how these new models, combined with the Google AI Studio, are […]

The post Google DeepMind Unveils Gemini 3 and Nano Banana Pro, Redefining AI Development appeared first on StartupHub.ai.

The post NVIDIA Nemotron 3 Nano launches on FriendliAI appeared first on StartupHub.ai.

FriendliAI is aggressively positioning itself as the crucial infrastructure layer for productionizing the new wave of efficient, open-source agentic AI models.

The post NVIDIA Nemotron 3 Nano launches on FriendliAI appeared first on StartupHub.ai.

The post Unsloth Accelerates LLM Fine-Tuning on NVIDIA GPUs appeared first on StartupHub.ai.

Unsloth, combined with NVIDIA GPUs and Nemotron 3 models, is democratizing efficient, specialized LLM fine-tuning for next-generation agentic AI applications.

The post Unsloth Accelerates LLM Fine-Tuning on NVIDIA GPUs appeared first on StartupHub.ai.

The post Vertex AI Unlocks Flexible Open Model Deployment appeared first on StartupHub.ai.

The accelerating pace of AI development has made the deployment of open models a critical challenge, often mired in infrastructure complexities. Google Cloud’s Vertex AI platform, as detailed by Developer Advocate Ivan Nardini in his recent video, “Serving open models on Vertex AI: The comprehensive developer’s guide,” directly addresses this by offering a strategic roadmap […]

The post Vertex AI Unlocks Flexible Open Model Deployment appeared first on StartupHub.ai.

We’re sharing a practical playbook to help organizations streamline and enhance sustainability reporting with AI.Corporate transparency is essential, but navigating frag…

We’re sharing a practical playbook to help organizations streamline and enhance sustainability reporting with AI.Corporate transparency is essential, but navigating frag…

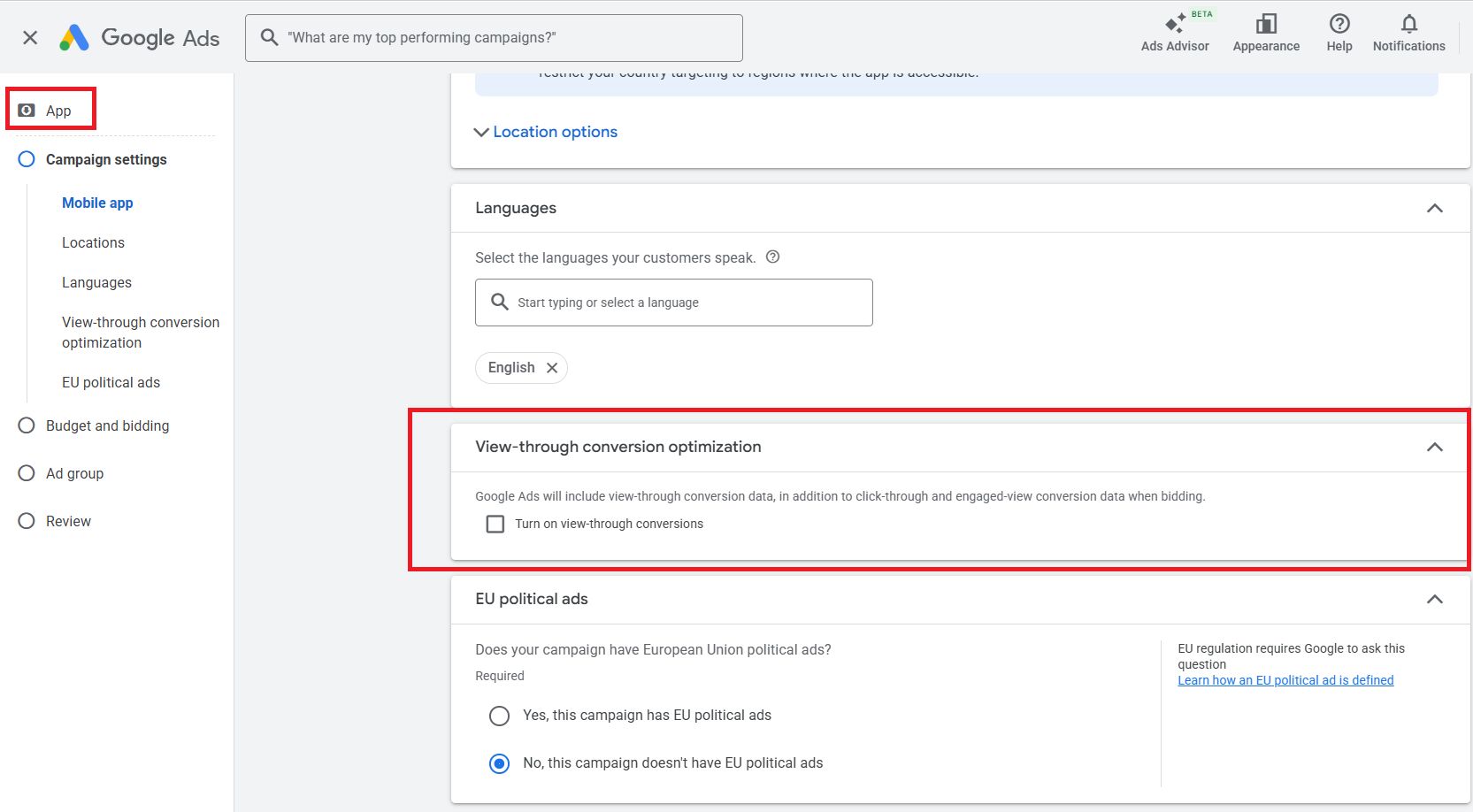

Google Ads launched VTC-optimized bidding for Android app campaigns, letting advertisers toggle bidding toward conversions that happen after an ad is viewed rather than clicked.

Previously, VTC worked as a hidden signal inside Google’s systems. Now, it’s a clear, explicit optimization option.

The shift. Google is shifting app advertising away from click-centric logic and toward incrementality and influence, especially for formats like YouTube and in-feed video. This update aligns bidding more closely with how users actually discover and install apps.

Why we care. You can now bid beyond clicks, improving measurement for video-led app campaigns and strengthening the case for upper-funnel activity.

Who benefits most. Video-first app advertisers and teams focused on awareness, engagement, and long-term growth – not just last-click installs.

What to watch

First seen. This update was first spotted by Senior Performance Marketing Executive Rakshit Shetty when he posted on LinkedIn.

Sergey Brin, Google’s co-founder, admitted that Google “for sure messed up” by underinvesting in AI and failing to seriously pursue the opportunity after releasing the research that led to today’s generative AI era.

Google was scared. Google didn’t take it seriously enough and failed to scale fast enough after the Transformer paper, Brin said. Also:

The full quote. Brin said:

Yes, but. Google still benefits from years of AI research and control over much of the technology that powers it, Brin said. That includes deep learning algorithms, years of neural network research and development, data-center capacity, and semiconductors.

Why we care. Brin’s comments help explain why Google’s AI-driven search changes have felt abrupt and inconsistent. After years of hesitation about shipping imperfect AI, Google is now moving fast (perhaps too fast?). The volatility we see in Google Search is collateral damage from that catch-up mode.

Where is AI going? Brin framed today’s AI race as hyper-competitive and fast-moving: “If you skip AI news for a month, you’re way behind.” When asked where AI is going, he said:

One more thing. Brin said he often uses Gemini Live in the car for back-and-forth conversations. The public version runs on an “ancient model,” Brin said, adding that a “way better version” is coming in a few weeks.

The video. Brin’s remarks came at a Stanford event marking the School of Engineering’s 100th anniversary. He discussed Google’s origins, its innovation culture, and the current AI landscape. Here’s the full video.

Google updated its JavaScript SEO documentation to clarify that noindex tags may prevent rendering and JavaScript execution, blocking changes.

The post Google Warns Noindex Can Block JavaScript From Running appeared first on Search Engine Journal.

Prototype HDMI 2.2 hardware will be showcased at CES The HDMI Licensing Administrator has confirmed that early HDMI 2.2 prototype hardware will be showcased at CES 2026. This will give the world its first look at the next-generation display technology. With HDMI 2.2, the HDMI standard’s maximum bandwidth will increase from 48 Gbps (HDMI 2.1) […]

The post Expect to see HDMI 2.2 in action at CES 2026 appeared first on OC3D.

Core Temp is a small, free utility that monitors CPU temperatures by reading data directly from each processor core. It delivers accurate, real-time readings, supports a wide range of CPUs, and runs with minimal overhead. If you want precise temperature monitoring, Core Temp delivers.

ResumaLive is a platform where video creators build swipeable, shareable profiles that introduce them in under 2 minutes.

Clients don't have time to dig through scattered links, they leave before they understand you. ResumaLive guides them through your identity, your credibility, your showreel, your personality, and how to reach you. like a movie trailer for your career. It doesn't replace your portfolio or social media. It gets your foot in the door, then they explore the rest.

The post AI’s Real Boom: Data Centers, ROI, and a Maturing IPO Market appeared first on StartupHub.ai.

“Every single AI company on the planet is saying if you give me more compute, I can make more revenue.” This assertion by Matt Witheiler, Head of Late-Stage Growth at Wellington Management, cuts directly to the core of the current artificial intelligence boom, framing the debate around an “AI bubble” not as a question of […]

The post AI’s Real Boom: Data Centers, ROI, and a Maturing IPO Market appeared first on StartupHub.ai.

The post Rockefeller’s Ruchir Sharma Declares AI Market in “Advanced Stages of a Bubble” appeared first on StartupHub.ai.

The current euphoria surrounding artificial intelligence has propelled the tech sector to unprecedented valuations, prompting seasoned financial analysts to question the sustainability of this growth. Ruchir Sharma, Chairman of Rockefeller International and Founder & CIO of Breakout Capital, offers a sobering perspective, asserting that the market is already in the “advanced stages of a bubble.” […]

The post Rockefeller’s Ruchir Sharma Declares AI Market in “Advanced Stages of a Bubble” appeared first on StartupHub.ai.

The post Apple Engineers Squeeze Powerhouse Vision Models into a Single Layer for Hyper-Efficient Image Generation appeared first on StartupHub.ai.

Generative AI is getting a major speed and efficiency boost, thanks to a surprisingly simple new framework from Apple researchers. The paper, “One Layer Is Enough: Adapting Pretrained Visual Encoders for Image Generation,” introduces the Feature Auto-Encoder (FAE), a novel approach that dramatically slashes the complexity required to integrate massive, pre-trained visual encoders (like DINOv2 […]

The post Apple Engineers Squeeze Powerhouse Vision Models into a Single Layer for Hyper-Efficient Image Generation appeared first on StartupHub.ai.

Google has updated its JavaScript SEO basics documentation to clarify how Google’s crawler handles noindex tags in pages that use JavaScript. In short, if “you do want the page indexed, don’t use a noindex tag in the original page code,” Google wrote.

What is new. Google updated this section to read:

In the past, it read:

Why the change. Google explained, “While Google may be able to render a page that uses JavaScript, the behavior of this is not well defined and might change. If there’s a possibility that you do want the page indexed, don’t use a noindex tag in the original page code.”

Why we care. It may be safer not to use JavaScript for important protocols and blocking of Googlebot or other crawlers. If you want to ensure a search engine does not rank a specific page, make sure not to use JavaScript to execute those directives.

The SEO industry is entering its most turbulent period yet.

Traffic is declining. AI is absorbing informational queries.

Social platforms now function as search engines. Google is shifting from a gateway to an answer engine.

The result is a sector running in circles – unsure what to measure, what to optimize, or even what SEO is meant to do.

Yet within this turbulence, something clear has emerged.

A single marketing metric that cuts through the noise and signals brand health and future demand.

A metric that marketers and SEOs can align around with confidence.

That metric is share of search.

The old model of being discovered by accident through classic search behavior is disappearing.

AI Overviews answer questions without sending traffic anywhere.

Meta is already rolling out its own AI to answer user queries.

TikTok and YouTube continue to grow as product discovery engines.

It is only a matter of time before LinkedIn becomes a business search engine powered by conversational AI.

We are witnessing a seismic shift. In moments like this, measurement becomes even more important.

Many SEO metrics are losing meaning, but one is rapidly gaining importance.

Share of search is a metric developed by James Hankins and Les Binet.

It is calculated by dividing a brand’s search volume by the total search volume for all brands in its category.

The result shows the proportion of category interest the brand commands.

The value is not in the calculation itself, but in what the metric correlates with.

Studies published by the Institute of Practitioners in Advertising (IPA) show that share of search correlates strongly with market share and future buying behavior.

As the IPA notes:

In simple terms, consumers search for brands they are considering, buying, or using.

That makes search behavior one of the clearest available signals of real demand.

Share of search was never designed to be perfect. It does not capture every nuance of how people find information across platforms.

It was built as a practical proxy for brand demand – and right now, practical measurement is exactly what the industry needs.

Dig deeper: Measuring what matters in a post-SEO world

Traffic as a measurement has become almost meaningless.

It has been easy to inflate, manipulate, and misunderstand.

Goodhart’s Law explains why. When a measure becomes a target, it stops being a good measure.

Traffic was treated as a target for years, and as a result, it stopped being a reliable indicator of anything meaningful.

Now traffic is falling – not because brands are doing anything wrong, but because AI is answering questions before users ever reach a website.

Ironically, this makes traffic more meaningful again, as much of the noise that once inflated it is disappearing.

The bigger advantage, however, belongs to share of search.

It cannot be inflated through content tactics or gamed by chasing trends. It reflects underlying consumer interest.

That is why share of search has become so significant.

It shows whether a brand is being searched for more or less than its competitors.

When share of search rises, brand demand is growing. When it falls, demand is weakening.

If an entire category collapses – as it did with air fryers once most consumers had already bought one – the metric also provides a clear signal that demand for the overall market is shrinking.

There is another advantage. Share of search is a multi-platform metric.

People no longer search in one place.

Product searches may begin on Amazon, TikTok, or Facebook.

Credibility checks often happen on YouTube. Long-form research may still take place on Google.

Discovery is fragmented, and behavior is fluid.

Share of search adapts to this reality. It is platform agnostic.

You can measure it using Google Trends, Ahrefs, Semrush, My Telescope, or any platform that provides reliable volume estimates.

You can track demand across Amazon, TikTok, YouTube, and emerging AI search interfaces.

Where the behavior happens matters less than the signal itself.

If people are looking for your brand, they are demonstrating intent.

This cross-platform visibility is critical because AI search sends little traffic to websites.

ChatGPT, Claude, and other LLMs present answers, snippets, and summaries, but rarely generate click-through.

Links are often buried, inaccessible, or accompanied by friction.

Instead, these systems trigger brand search.

Users encounter a brand in an AI response, then search for it when they want more information.

As a result, share of search becomes the tail-end signal of everything marketing does, including AI exposure.

When share of search rises, marketing is working. When it falls, it is not.

However, the metric needs a champion.

The SEO industry has spent years focused on two types of keywords:

That approach made sense when classic search was the dominant discovery channel. That world is disappearing.

Yet many SEOs continue to cling to outdated deliverables, such as structured data micro-optimization or churning out endless blog posts to influence hypothetical AI citations.

Citations are a distraction.

At best, they are a minor signal in LLM outputs.

At worst, they are a misleading metric that will not stand up to financial scrutiny.

When CFOs start questioning the value of SEO budgets, citations will not hold up as evidence of ROI.

Share of search will.

SEOs who embrace share of search position themselves not as keyword tacticians, but as strategic insights partners.

They become interpreters of demand who help:

This shift changes the role of SEO entirely.

Instead of being judged by how much content they produce, SEOs begin to be valued for how well they understand search behavior and the commercial impact of that behavior.

A well-structured share of search report tells a coherent story:

In the AI era, this narrative becomes essential.

Someone inside the organization must understand how people search, where they search, and what the numbers mean.

SEOs are naturally positioned to fill that role. You have the background and the expertise.

And as AI automates more mechanical SEO tasks, this progression becomes increasingly natural.

Because share of search requires interpretation.

Dig deeper: Why LLM perception drift will be 2026’s key SEO metric

Share of search does not have to be a single top-level number. It can be:

Consider the air fryer category.

Demand collapsed across the market once most consumers had already purchased one.

Within that collapse, however, individual models rose and fell based on their appeal.

Ninja’s latest model, for example, showed spikes and dips that revealed shifts in consumer interest long before sales data arrived.

Share of search acts as early detection for market movement.

SEOs who understand this level of nuance become indispensable. They can:

This is the future skill set – not chasing rankings, but interpreting behavior.

As AI becomes more integrated into search and site optimization, many mechanical SEO tasks will be increasingly automated.

The interpretation of marketing performance, however, cannot be fully automated.

Share of search requires human judgment.

It requires an understanding of context, seasonality, category dynamics, and brand strategy.

That role can and should belong to the SEO professional.

Some agencies may label this function an insights specialist or a data analyst.

Some organizations may house it within marketing.

But the people who understand search behavior most deeply are SEOs.

They are best positioned to interpret what the numbers mean and communicate those insights to leadership teams.

Leadership teams need to understand what is happening with their brand.

Marketing leaders are already discussing share of search, and it is beginning to appear in boardroom conversations.

It is quickly becoming a central indicator of brand strength.

In an AI-driven world where traffic is scarce and visibility is fragmented, the strategic imperative is clear.

Brands need to be searched for. Those that are searched for endure. Those that are not fade.

That is why share of search is not just another metric. It is becoming the metric.

SEOs who embrace it can elevate their role, influence, and strategic value at exactly the moment the industry needs it most.

The advice for SEOs is simple: Learn share of search.

To get started:

You will not become fluent in the metric without using it. Once you do, its applications become clear.

Share of search is the bridge that connects SEO to the broader world of brand.

Take the first step.

Sapphire wants AMD to let them “go nuts” with their GPU designs Ed Crisler, Sapphire Technology’s North American PR Manager, has openly stated that he would like GPU manufacturers to give their partners more freedom when building their graphics cards. Sapphire would like to “go nuts” when building graphics cards, but tight rules limit what […]