Vibe coding platform Cursor releases first in-house LLM, Composer, promising 4X speed boost

The vibe coding tool Cursor, from startup Anysphere, has introduced Composer, its first in-house, proprietary coding large language model (LLM) as part of its Cursor 2.0 platform update.

Composer is designed to execute coding tasks quickly and accurately in production-scale environments, representing a new step in AI-assisted programming. It's already being used by Cursor’s own engineering staff in day-to-day development — indicating maturity and stability.

According to Cursor, Composer completes most interactions in less than 30 seconds while maintaining a high level of reasoning ability across large and complex codebases.

The model is described as four times faster than similarly intelligent systems and is trained for “agentic” workflows—where autonomous coding agents plan, write, test, and review code collaboratively.

Previously, Cursor supported "vibe coding" — using AI to write or complete code based on natural language instructions from a user, even someone untrained in development — atop other leading proprietary LLMs from the likes of OpenAI, Anthropic, Google, and xAI. These options are still available to users.

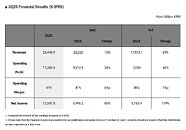

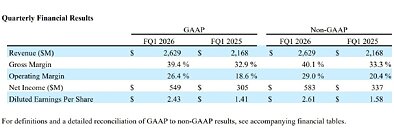

Benchmark Results

Composer’s capabilities are benchmarked using "Cursor Bench," an internal evaluation suite derived from real developer agent requests. The benchmark measures not just correctness, but also the model’s adherence to existing abstractions, style conventions, and engineering practices.

On this benchmark, Composer achieves frontier-level coding intelligence while generating at 250 tokens per second — about twice as fast as leading fast-inference models and four times faster than comparable frontier systems.

Cursor’s published comparison groups models into several categories: “Best Open” (e.g., Qwen Coder, GLM 4.6), “Fast Frontier” (Haiku 4.5, Gemini Flash 2.5), “Frontier 7/2025” (the strongest model available midyear), and “Best Frontier” (including GPT-5 and Claude Sonnet 4.5). Composer matches the intelligence of mid-frontier systems while delivering the highest recorded generation speed among all tested classes.

A Model Built with Reinforcement Learning and Mixture-of-Experts Architecture

Research scientist Sasha Rush of Cursor provided insight into the model’s development in posts on the social network X, describing Composer as a reinforcement-learned (RL) mixture-of-experts (MoE) model:

“We used RL to train a big MoE model to be really good at real-world coding, and also very fast.”

Rush explained that the team co-designed both Composer and the Cursor environment to allow the model to operate efficiently at production scale:

“Unlike other ML systems, you can’t abstract much from the full-scale system. We co-designed this project and Cursor together in order to allow running the agent at the necessary scale.”

Composer was trained on real software engineering tasks rather than static datasets. During training, the model operated inside full codebases using a suite of production tools—including file editing, semantic search, and terminal commands—to solve complex engineering problems. Each training iteration involved solving a concrete challenge, such as producing a code edit, drafting a plan, or generating a targeted explanation.

The reinforcement loop optimized both correctness and efficiency. Composer learned to make effective tool choices, use parallelism, and avoid unnecessary or speculative responses. Over time, the model developed emergent behaviors such as running unit tests, fixing linter errors, and performing multi-step code searches autonomously.

This design enables Composer to work within the same runtime context as the end-user, making it more aligned with real-world coding conditions—handling version control, dependency management, and iterative testing.

From Prototype to Production

Composer’s development followed an earlier internal prototype known as Cheetah, which Cursor used to explore low-latency inference for coding tasks.

“Cheetah was the v0 of this model primarily to test speed,” Rush said on X. “Our metrics say it [Composer] is the same speed, but much, much smarter.”

Cheetah’s success at reducing latency helped Cursor identify speed as a key factor in developer trust and usability.

Composer maintains that responsiveness while significantly improving reasoning and task generalization.

Developers who used Cheetah during early testing noted that its speed changed how they worked. One user commented that it was “so fast that I can stay in the loop when working with it.”

Composer retains that speed but extends capability to multi-step coding, refactoring, and testing tasks.

Integration with Cursor 2.0

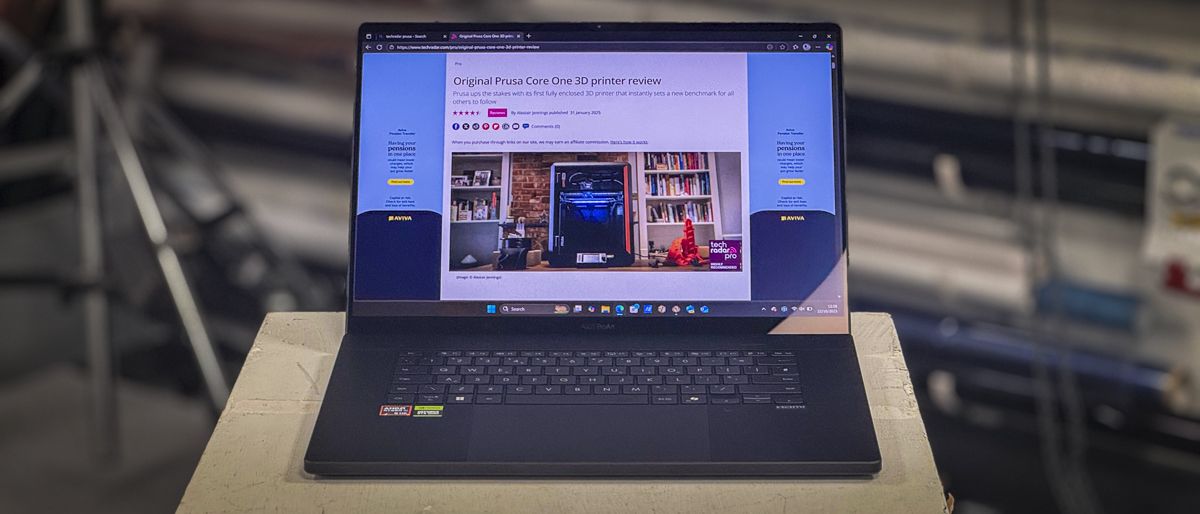

Composer is fully integrated into Cursor 2.0, a major update to the company’s agentic development environment.

The platform introduces a multi-agent interface, allowing up to eight agents to run in parallel, each in an isolated workspace using git worktrees or remote machines.

Within this system, Composer can serve as one or more of those agents, performing tasks independently or collaboratively. Developers can compare multiple results from concurrent agent runs and select the best output.

Cursor 2.0 also includes supporting features that enhance Composer’s effectiveness:

In-Editor Browser (GA) – enables agents to run and test their code directly inside the IDE, forwarding DOM information to the model.

Improved Code Review – aggregates diffs across multiple files for faster inspection of model-generated changes.

Sandboxed Terminals (GA) – isolate agent-run shell commands for secure local execution.

Voice Mode – adds speech-to-text controls for initiating or managing agent sessions.

While these platform updates expand the overall Cursor experience, Composer is positioned as the technical core enabling fast, reliable agentic coding.

Infrastructure and Training Systems

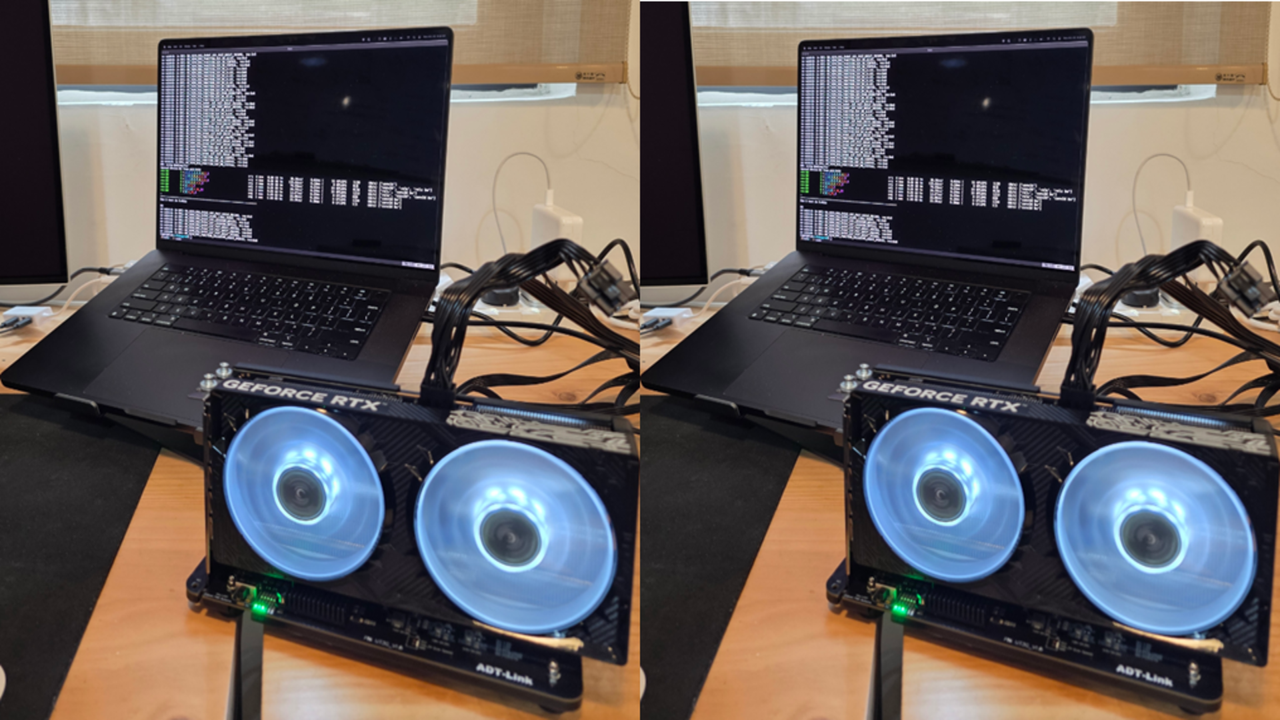

To train Composer at scale, Cursor built a custom reinforcement learning infrastructure combining PyTorch and Ray for asynchronous training across thousands of NVIDIA GPUs.

The team developed specialized MXFP8 MoE kernels and hybrid sharded data parallelism, enabling large-scale model updates with minimal communication overhead.

This configuration allows Cursor to train models natively at low precision without requiring post-training quantization, improving both inference speed and efficiency.

Composer’s training relied on hundreds of thousands of concurrent sandboxed environments—each a self-contained coding workspace—running in the cloud. The company adapted its Background Agents infrastructure to schedule these virtual machines dynamically, supporting the bursty nature of large RL runs.

Enterprise Use

Composer’s performance improvements are supported by infrastructure-level changes across Cursor’s code intelligence stack.

The company has optimized its Language Server Protocols (LSPs) for faster diagnostics and navigation, especially in Python and TypeScript projects. These changes reduce latency when Composer interacts with large repositories or generates multi-file updates.

Enterprise users gain administrative control over Composer and other agents through team rules, audit logs, and sandbox enforcement. Cursor’s Teams and Enterprise tiers also support pooled model usage, SAML/OIDC authentication, and analytics for monitoring agent performance across organizations.

Pricing for individual users ranges from Free (Hobby) to Ultra ($200/month) tiers, with expanded usage limits for Pro+ and Ultra subscribers.

Business pricing starts at $40 per user per month for Teams, with enterprise contracts offering custom usage and compliance options.

Composer’s Role in the Evolving AI Coding Landscape

Composer’s focus on speed, reinforcement learning, and integration with live coding workflows differentiates it from other AI development assistants such as GitHub Copilot or Replit’s Agent.

Rather than serving as a passive suggestion engine, Composer is designed for continuous, agent-driven collaboration, where multiple autonomous systems interact directly with a project’s codebase.

This model-level specialization—training AI to function within the real environment it will operate in—represents a significant step toward practical, autonomous software development. Composer is not trained only on text data or static code, but within a dynamic IDE that mirrors production conditions.

Rush described this approach as essential to achieving real-world reliability: the model learns not just how to generate code, but how to integrate, test, and improve it in context.

What It Means for Enterprise Devs and Vibe Coding

With Composer, Cursor is introducing more than a fast model—it’s deploying an AI system optimized for real-world use, built to operate inside the same tools developers already rely on.

The combination of reinforcement learning, mixture-of-experts design, and tight product integration gives Composer a practical edge in speed and responsiveness that sets it apart from general-purpose language models.

While Cursor 2.0 provides the infrastructure for multi-agent collaboration, Composer is the core innovation that makes those workflows viable.

It’s the first coding model built specifically for agentic, production-level coding—and an early glimpse of what everyday programming could look like when human developers and autonomous models share the same workspace.

We’re rolling out changes to NotebookLM to make it fundamentally smarter and more powerful.

We’re rolling out changes to NotebookLM to make it fundamentally smarter and more powerful.

Learn how to use Pixel Watch 4’s Raise to Talk feature so you can access Gemini hands-free.

Learn how to use Pixel Watch 4’s Raise to Talk feature so you can access Gemini hands-free.  Google Arts & Culture is partnering with the National Trust for Historic Preservation to celebrate Route 66’s legacy

Google Arts & Culture is partnering with the National Trust for Historic Preservation to celebrate Route 66’s legacy  The AI for Math Initiative brings together five of the world's most prestigious research institutions.

The AI for Math Initiative brings together five of the world's most prestigious research institutions.

Last week, we launched the Internet2 NET+ Google AI Education Leadership Program (ELP).

Last week, we launched the Internet2 NET+ Google AI Education Leadership Program (ELP).

Learn more about the startups chosen for Google for Startups Accelerator: AI for Cybersecurity.

Learn more about the startups chosen for Google for Startups Accelerator: AI for Cybersecurity.  A collection of Google's latest security features and updates for Cybersecurity Awareness Month 2025.

A collection of Google's latest security features and updates for Cybersecurity Awareness Month 2025.  The TransAmerica Trail is a legendary off-road route, stretching more than 5,900 miles and known for its rugged, remote beauty. And for the first time, you can now explo…

The TransAmerica Trail is a legendary off-road route, stretching more than 5,900 miles and known for its rugged, remote beauty. And for the first time, you can now explo…