Reading view

Apple Plans To Open-Source An LLVM Tool To Security Harden Large C++ Codebases

AMD Updates Zen 3 / Zen 4 CPU Microcode For Systems Lacking Microcode Signing Fix

SUSE Linux Enterprise 16 Announced: "Enterprise Linux That Integrates Agentic AI"

(PR) AAEON Releases UP Xtreme ARL Edge

Despite its fanless operation, the UP Xtreme ARL Edge offers a choice of Intel Core Ultra (Series 2) processors, with default models offering the Intel Core Ultra 5 processor 225H, Intel Core Ultra 7 processor 255H, or the Intel Core Ultra 7 processor 265H, with the latter capable of utilizing the platform's enhanced integrated CPU, GPU, and NPU to provide up to 97 TOPS of AI performance.

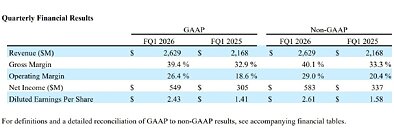

(PR) Electronic Arts Reports Q2 FY26 Results

Selected Operating Highlights and Metrics

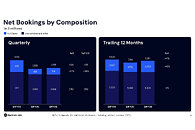

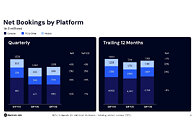

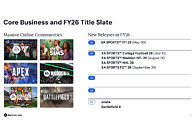

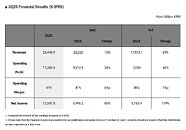

- Net bookings for the quarter totaled $1.818 billion, down 13% year-over-year, driven largely by the extraordinary release of College Football 25 in the prior year period.

- EA SPORTS Madden NFL 26 delivered net bookings growth year-over-year in the quarter, with players returning to the title.

- Apex Legends returned to net bookings growth on a year-over-year basis in Q2, growing double digits, as the team continues to deliver new experiences that drove deeper engagement.

- EA SPORTS FC 26 HD net bookings were up mid single digits year-over-year versus EA SPORTS FC 25 HD net bookings in the quarter, after adjusting for differences in deluxe edition content timing.

- The successful launches of skate. and Battlefield 6 - underscore the strength of EA's long-term strategy to build community-driven experiences centered on creativity, connection, and long-term growth.

(PR) Logitech Announces Q2 Fiscal Year 2026 Results

- Sales were $1.19 billion, up 6 percent in US dollars and 4 percent in constant currency compared to Q2 of the prior year.

- GAAP gross margin was 43.4 percent, down 20 basis points compared to Q2 of the prior year. Non-GAAP gross margin was 43.8 percent, down 30 basis points compared to Q2 of the prior year.

- GAAP operating income was $191 million, up 19 percent compared to Q2 of the prior year. Non-GAAP operating income was $230 million, up 19 percent compared to Q2 of the prior year.

- GAAP earnings per share (EPS) was $1.15, up 21 percent compared to Q2 of the prior year. Non-GAAP EPS was $1.45, up 21 percent compared to Q2 of the prior year.

- Cash flow from operations was $229 million. The quarter-ending cash balance was $1.4 billion.

- The Company returned $340 million to shareholders through its annual dividend payment and share repurchases.

Turtle Beach Launches PC Edition of Victrix Pro BFG Reloaded Modular Controller

The Victrix Pro BFG Reloaded Wireless Modular Controller - PC Edition is built for serious PC gamers and is available in North America as a Best Buy retail exclusive and directly from Turtle Beach at www.turtlebeach.com. Globally, the controller is also available on turtlebeach.com and participating retailers for $189.99|£159.99|€179.99 MSRP.

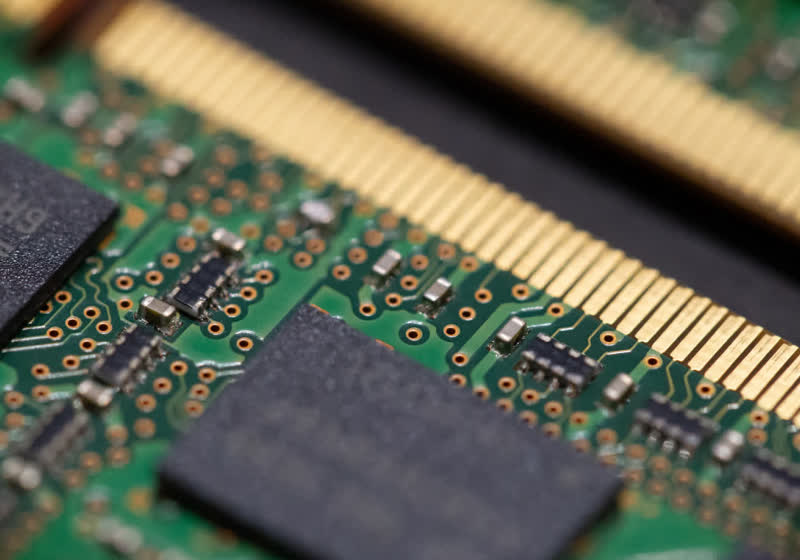

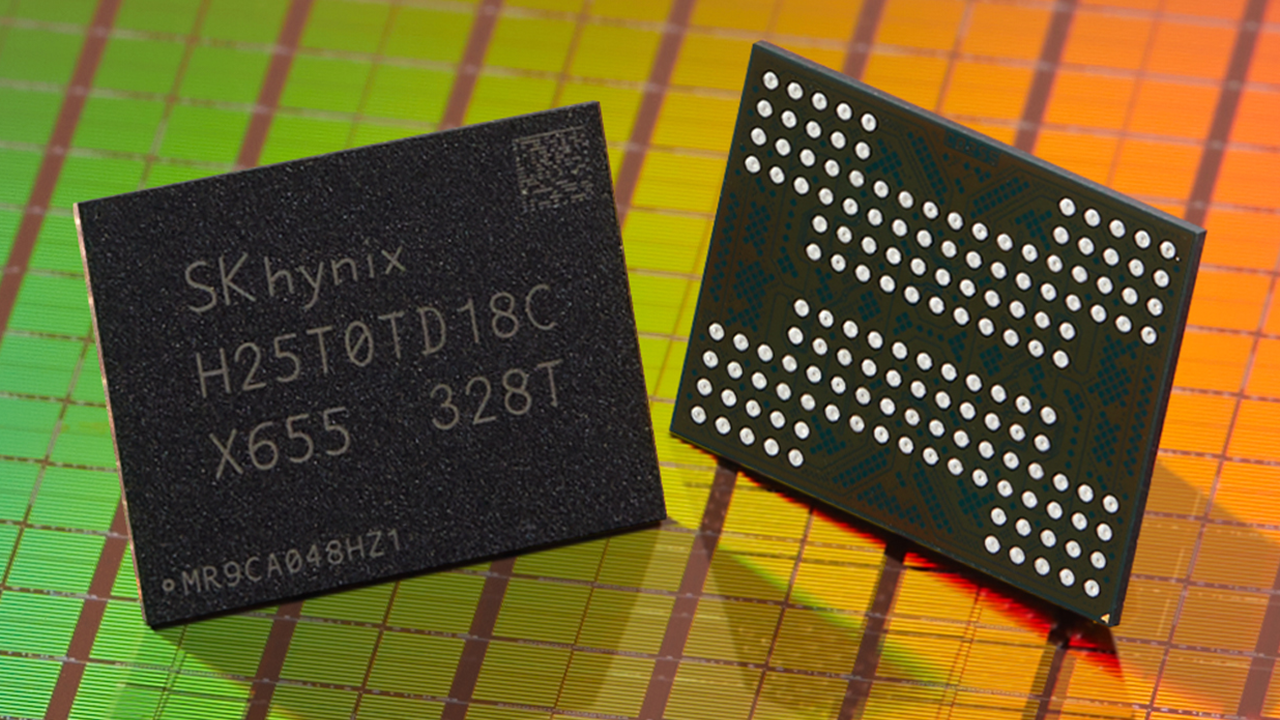

(PR) SK hynix Announces 3Q25 Financial Results

As demand across the memory segment has soared due to customers' expanding investments in AI infrastructure, SK hynix once again surpassed the record-high performance of the previous quarter due to increased sales of high value-added products such as 12-high HBM3E and DDR5 for servers. Driven by surging demand for AI servers, shipments of high-capacity DDR5s of 128 GB or more have more than doubled from the previous quarter. In NAND, the portion of AI server eSSD, which commands a price premium, expanded significantly as well. Building on this strong performance, the company's cash and cash equivalents at the end of the third quarter increased by 10.9 trillion won from the previous quarter, reaching 27.9 trillion won. Meanwhile, interest bearing debt stood at 24.1 trillion won, enabling the company to successfully transition to a net cash position of 3.8 trillion won.

Intel Nova Lake LGA1954 Socket Keeps Cooler Compatibility with LGA1851: Thermaltake

(PR) QNAP Launches All-Flash NASbook TBS-h574TX with Pre-installed Enterprise E1.S SSDs

"Speed and reliability are critical in media production. By integrating QNAP-validated E1.S SSDs into the TBS-h574TX, users no longer need to worry about drive compatibility. They can power on, configure, and get straight to editing." said Andy Chuang, Product Manager of QNAP, adding "This NASbook combines portable design, all-flash performance, and hot-swappable SSDs to offer a uniquely compact, powerful, and zero-downtime experience—so teams can focus on creativity anytime, anywhere with peace of mind."

(PR) Seagate Technology Reports Fiscal First Quarter 2026 Financial Results

"With clear visibility into sustained demand strength, we are ramping shipments of our areal density-leading Mozaic HAMR products, which are now qualified with five of the world's largest cloud customers. These products address customers' performance, durability and TCO needs at scale to continue supporting demand for existing use cases such as social media video platforms as well as growth driven by new AI applications. AI is transforming how content is being consumed and generated, increasing the value of data and storage and Seagate is well positioned for continued profitable growth," Mosley concluded.

(PR) Durabook Introduces Next-Generation R10 Copilot+ PC Rugged Tablet

Twinhead's CEO, Fred Kao, said: "Durabook devices are built to meet the needs of professionals who depend on powerful, reliable technology to stay productive in any environment. The compact and versatile R10 redefines the 10-inch rugged tablet category by providing AI-enhanced productivity supported by smart engineering for optimal usability. The R10's adaptive design and customisation capability make it the perfect partner for field service operatives working across a wide range of sectors, including industrial manufacturing, warehouse management, automotive diagnostics, public safety, utilities, transport and logistics."

Seattle startup TestSprite raises $6.7M to become ‘testing backbone’ for AI-generated code

In the era of AI-generated software, developers still need to make sure their code is clean. That’s where TestSprite wants to help.

The Seattle startup announced $6.7 million in seed funding to expand its platform that automatically tests and monitors code written by AI tools such as GitHub Copilot, Cursor, and Windsurf.

TestSprite’s autonomous agent integrates directly into development environments, running tests throughout the coding process rather than as a separate step after deployment.

“As AI writes more code, validation becomes the bottleneck,” said CEO Yunhao Jiao. “TestSprite solves that by making testing autonomous and continuous, matching AI speed.”

The platform can generate and run front- and back-end tests during development to ensure AI-written code works as expected, help AI IDEs (Integrated Development Environments) fix software based on TestSprite’s integration testing reports, and continuously update and rerun test cases to monitor deployed software for ongoing reliability.

Founded last year, TestSprite says its user base grew from 6,000 to 35,000 in three months, and revenue has doubled each month since launching its 2.0 version and new Model Context Protocol (MCP) integration. The company employs about 25 people.

Jiao is a former engineer at Amazon and a natural language processing researcher. He co-founded TestSprite with Rui Li, a former Google engineer.

Jiao said TestSprite doesn’t compete with AI coding copilots, but complements them by focusing on continuous validation and test generation. Developers can trigger tests using simple natural-language commands, such as “Test my payment-related features,” directly inside their IDEs.

The seed round was led by Bellevue, Wash.-based Trilogy Equity Partners, with participation from Techstars, Jinqiu Capital, MiraclePlus, Hat-trick Capital, Baidu Ventures, and EdgeCase Capital Partners. Total funding to date is about $8.1 million.

Crash Bandicoot Netflix series in the works – reports claim

It looks like Crash Bandicoot is the newest video game classic to move to Netflix Netflix is the king of video game adaptations. In recent years, Netflix has adapted Castlevania, Tomb Raider, Splinter Cell, Sonic the Hedgehog, and even Cyberpunk 2077. Now, the streaming giant is reportedly developing a new animated series based on Crash […]

The post Crash Bandicoot Netflix series in the works – reports claim appeared first on OC3D.

Microsoft's CEO Satya Nadella says Bill Gates almost nuked Microsoft's partnership with OpenAI before it started — "You're going to burn this billion dollars"

This insane Hades-like action RPG inspired by the original God of War looks great on PC — absolutely criminal that it's not on Xbox

I Was Fired From My Own Startup. Here’s What Every Founder Should Know About Letting Go

No founder plans for the day they get fired from their own company.

You plan for funding rounds, product launches and exits, but not for the boardroom moment when everyone raises their hand, and you realize your journey inside the company is over.

It happened to me. I called that board meeting. I set the vote. We had to choose who would stay, me or my co-founder. The vote didn’t go my way.

In movies, this is where the music swells and the credits roll. Steve Jobs after John Sculley. Travis Kalanick after Bill Gurley. In real life, there’s no cinematic pause. No final scene. Just the quiet realization that everything you built now belongs to someone else.

What follows isn’t drama, either. It’s disorientation. And like most founders, I had no idea how to handle it.

Don’t fill the silence too fast

When it ended, I filled my calendar with aimless meetings. Five or six a day. Not because they had any real purpose, but because it felt strange not to be doing business. For more than 10 years, I’d never had a day when I didn’t have to think about work. A startup teaches you to fix things fast.

When you’re out, though, there’s nothing left to fix. Only yourself. Getting pushed out isn’t like missing a quarterly target. It’s like losing the story you’ve been telling yourself for years.

The hardest part is that you don’t know who to blame.

Investors? They were doing their job. Yourself? Every decision made sense in context. So the frustration lands on the person closest to you. Your co-founder. It’s not about logic. I would say it is more of a defense mechanism. It’s how the mind tries to make sense of loss.

Learn to see the pattern

For months, I kept asking: What did we do wrong? It took me a couple of years to see the pattern.

Later, working inside a venture fund helped me see the truth. I saw the same story play out again and again. Founders repeating the same emotional arc, as follows:

- Expectation of an M&A deal;

- Long wait for the deal;

- The deal collapses;

- The startup stalls;

- Expectations diverge; and then

- Resentment between co-founders

Every time, the same sequence. And when the dream fades, blame fills the gap.

The pattern itself is that the anger toward a co-founder is often a projection of disappointment from a failed deal. If that energy isn’t processed consciously, it finds its own way out, usually as anger. You can’t really be mad at yourself; you did everything right. The other side acted in their own interest. So it lands on the person next to you, your co-founder and your team, and for them, it’s you.

And that’s where I have a bit of a claim toward investors because they often see this dynamic coming and could at least warn founders about it.

Once I recognized the pattern, I stopped seeing my story as a failure. It was part of a cycle almost every founder goes through, only most don’t talk about it.

Trade strategy for emotional tools

Traditional business tools didn’t help. OKRs, planning sessions, strategy off-sites, none of it worked on the inner collapse that comes when your identity and your company split apart.

This led me to begin studying Gestalt therapy. It gave me the language to understand how situations like this actually work, their cycles, causes and effects, and how to think about them with the right awareness and perspective. One part of building startups isn’t about pivots or fundraising. It’s realizing how much of yourself you’ve tied to the story you’re telling the world.

The point is to first get conscious of your anger, and then let it out.

Acceptance comes in stages

Acceptance doesn’t show up all at once. It arrives in pieces.

For me, the first piece came when I watched another founder go through the same breakdown and recognized every stage.

The second came when my first startup was acquired. Not at the valuation I’d dreamed of, but enough to accept that it continued without me. The third came with my current company, Intch, which is built from calm, not from fear.

I no longer measure success by control, but by clarity.

What I’d tell a founder in that room

Here’s what I’d share now with another entrepreneur who finds themselves in the same situation.

- You’re losing a story, not your worth. Give yourself space to grieve it.

- Don’t let anger choose a target. Name the pattern instead.

- Find mirrors. Other founders are walking through the same steps.

- Business tools have limits. Emotional tools matter here.

- Acceptance comes in stages. You’ll recognize them when they arrive.

Founders are trained to manage everything except their own psychology. But startups are way more than capital and code. They run on the emotional architecture of the people who build them. And when that structure breaks, rebuilding it is the most important startup you’ll ever work on.

Yakov Filippenko is a seasoned entrepreneur with more than 10 years of experience in IT and technologies, as well as scaling businesses internationally. As a product manager at Yandex, he led a team that grew the product’s user base from 500,000 to 1.2 million and secured its entry into the international market. Subsequently, he co-founded SailPlay, which he scaled to 45 countries and eventually exited, after it was acquired by Retail Rocket in 2018. In 2021, Filippenko launched Intch, an AI-powered platform connecting part-time professionals with flexible roles.

Illustration: Dom Guzman

Apple to bring OLED displays to more devices, including new water-resistant iPad mini and MacBooks

The stunning OLED display on the iPad Pro is one of its most compelling features, but the tablet's high price remains a barrrier for those who don't need all its power and features.

Read Entire Article

Ninja Gaiden 4 – Achieve S Rank Easily in All Missions With This Trick

With its many accessibility options, Ninja Gaiden 4 is one of the most approachable entries in the series, allowing players to experience its high-speed action without letting the high challenge level get in the way of enjoyment. Those who want to truly appreciate the game, however, will wish to master many of its intricacies, put their skills to the test, and attempt to achieve an S rank for completing each of the story chapters. Here are some tips to help you understand the mission scoring system and what you should always strive to do to achieve such a high rank. […]

Read full article at https://wccftech.com/how-to/ninja-gaiden-4-achieve-s-rank-easily-in-all-missions-with-this-trick/

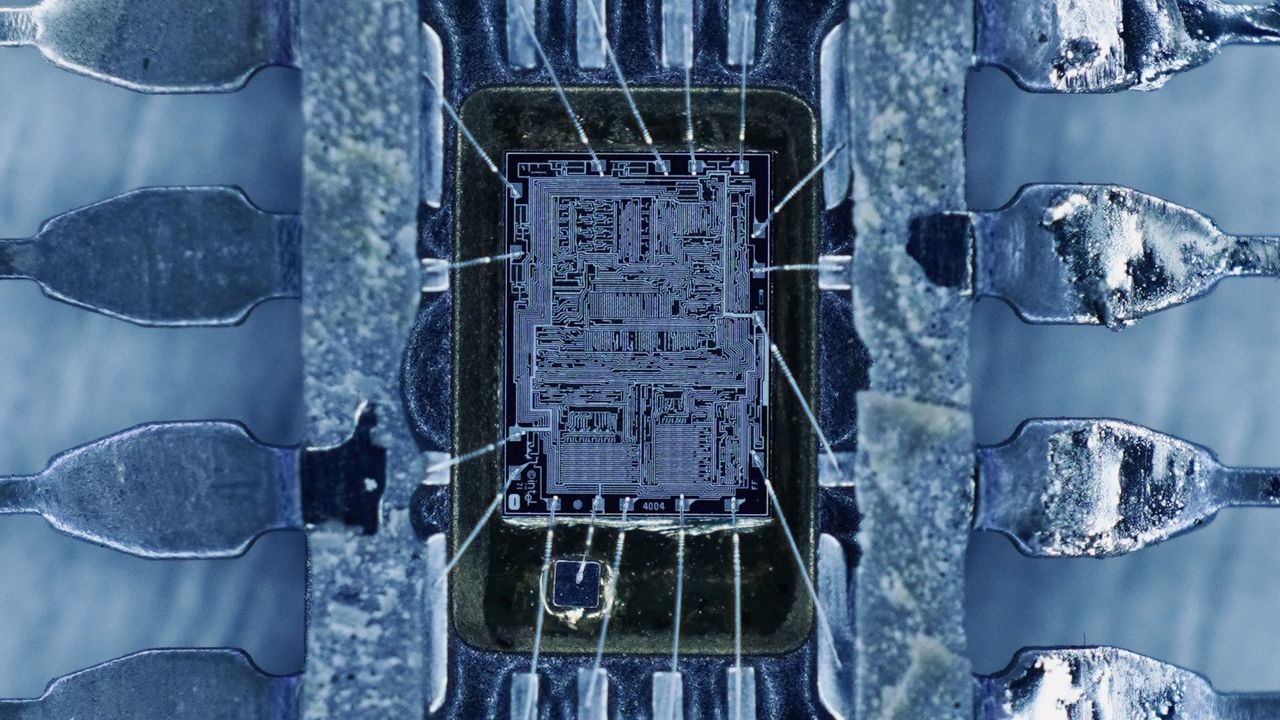

Apple Is ‘Not Yet In Talks With TSMC’ For Its A16 Process, As Its Current Focus Likely Lies In Developing Several 2nm Chipsets Next Year

The A20 and A20 Pro will be Apple’s first chipsets fabricated on TSMC’s 2nm process, pretty much highlighting the company’s propensity to jump to the newest manufacturing nodes as quickly as possible to have an advantage over the competition. On the same lithography, we expect the California-based giant to introduce a total of four chipsets, and after a couple of generations, Apple will switch to an even more advanced technology. The most obvious transition would be TSMC’s A16, or 1.6nm, but a report says neither company has entered talks for this node. Future Apple chipsets are expected to take advantage of […]

Read full article at https://wccftech.com/apple-not-yet-in-talks-with-tsmc-over-a16-process/

John Romero Says He’s Talking with Many Companies to Finish the Game That Was Being Funded by Microsoft

John Romero might not be a name that youngsters recognize, but he was a legend of the early days of the first-person shooter genre, co-founding id Software and making videogames like Wolfenstein 3D, Doom, Hexen, and Quake, to name a few. Nowadays, he makes smaller titles at Romero Games. The studio's most recent title was the Mafia-themed turn-based strategy game Empire of Sin, launched in late 2020 to a mixed reception. More recently, John Romero and his fellow developers signed a deal with Microsoft for their next project, but that deal went awry along with the latest Xbox layoffs. The […]

Read full article at https://wccftech.com/john-romero-talking-companies-finish-game-funded-by-microsoft/

Microsoft CEO: We’re Now the Largest Gaming Publisher and Want to Be Everywhere; The Real Competitor Is TikTok

Microsoft CEO Satya Nadella was featured in a live interview on TBPN (Technology Business Programming Network) discussing various topics, including the company's updated multiplatform strategy on the gaming front. Nadella pointed out that following the acquisition of Activision Blizzard, Microsoft is now the largest gaming publisher in terms of revenue. The goal, then, is to be everywhere the consumer is, just like with Office. The Microsoft CEO then interestingly pointed to TikTok, or, to be more accurate, short-form video as a whole, as the true competitor of gaming. Remember, the biggest gaming business is the Windows business. And of course, […]

Read full article at https://wccftech.com/microsoft-ceo-largest-gaming-publisher-want-to-be-everywhere-competition-tiktok/

ZipWik – ZipWik transforms static files into a single, shareable smart link

ZipWik lets you turn several documents—PDFs, slides, images or spreadsheets—into one simple document and a link you can share anywhere, from WhatsApp to Slack. You can control who sees it, set it to expire, and skip the hassle of sending large attachments. ZipWik also shows you what happens after you share: who viewed it, how long they spent, whether they downloaded or shared it, and which documents got the most attention. It’s an easy way to share files, stay in control, and actually understand how people engage with your content.

No more struggles with large attachments, sharing documents that you cannot control, inability to combine document formats..ZipWik does it all. Try Today.

Active Exploits Hit Dassault and XWiki — CISA Confirms Critical Flaws Under Attack

Microsoft says ‘once AGI is declared by OpenAI’ it will be verified by independent experts – here’s why that’s a big deal

From infrastructure to intelligence: elevating data as a strategic advantage

When AI malware meets DDoS: a new challenge for online resilience

After a couple weeks of using the Dell Tower Plus (EBT2250), I’m as impressed as I am perplexed by it

How to watch England vs South Africa: Live stream ICC Women's Cricket World Cup 2025 semi-final for FREE

The best Samsung Galaxy Z Flip 7 and Flip 7 FE plans in Australia October 2025

Flipkart’s Super.money teams up with Kotak811 to make India’s free UPI payments pay

Creative Labs revives Sound Blaster brand with modular audio hub — Re:Imagine is a tactile Stream Deck competitor, aimed at creators and audiophiles

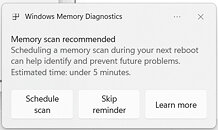

New Windows 11 feature aims to diagnose crashes — will check RAM after BSODs to look for problems

LaunchForge

AI launches your product: page, posts, PH draft all in one

Pomelli by Google Labs

Your copilot for on-brand content at any scale

ChetakAI

One workspace where AI, code, and teams work together

Parallax by Gradient

Host LLMs across devices sharing GPU to make your AI go brrr

Well Intelligence

When startups Financial data meets business intelligence

Hot100.ai

The weekly AI project chart judged by AI

Quartr AI chat

All investor relations material in one powerful AI chat.

Command Center

Understand, review, and refactor your code 20x faster

Dreamflow Mobile Preview

Preview and share live app updates across any device

SAST

Stop running commands one by one yourself

Spiral

The AI writing partner with taste

Animation Builder by Unicorns Club

Turn a boring LinkedIn post into a fun TikTok-style video

GhostForge

Build and deploy local AI agents offline

Mistral AI Studio

The Production AI Platform

GoMask.ai

Instant compliant test data for engineering teams

Zumie

Screen recordings made beautiful

Lightning

AI code editor for PyTorch development on GPU workspaces

VoiSistant

AI-powered speech to text, tts, & translation Mac app

Zoer

Build full-stack apps with AI from Database first

GitHub Mission Control

Assign, steer, and track copilot coding agent tasks

SocialKit

Social Media Videos to Data API

Arm’s GitHub Copilot Agentic AI: Cloud Migration’s Next Leap

The post Arm’s GitHub Copilot Agentic AI: Cloud Migration’s Next Leap appeared first on StartupHub.ai.

Arm and GitHub's new Migration Assistant Custom Agent for GitHub Copilot Agentic AI fundamentally transforms cloud migration to Arm-based infrastructure.

The post Arm’s GitHub Copilot Agentic AI: Cloud Migration’s Next Leap appeared first on StartupHub.ai.

RealWear Arc 3 launch: A lighter AR headset with natural language AI for industry

The post RealWear Arc 3 launch: A lighter AR headset with natural language AI for industry appeared first on StartupHub.ai.

The RealWear Arc 3 launch introduces a lightweight AR headset and a natural language voice OS, aiming to democratize industrial augmented reality.

The post RealWear Arc 3 launch: A lighter AR headset with natural language AI for industry appeared first on StartupHub.ai.

BRKZ’s $30M finance facility aims to unblock Saudi construction

The post BRKZ’s $30M finance facility aims to unblock Saudi construction appeared first on StartupHub.ai.

BRKZ's $30M finance facility will provide crucial flexible payment options, accelerating Saudi Arabia's massive construction projects.

The post BRKZ’s $30M finance facility aims to unblock Saudi construction appeared first on StartupHub.ai.

Vesence lands $9M to bring rigorous AI review to law firms

The post Vesence lands $9M to bring rigorous AI review to law firms appeared first on StartupHub.ai.

Vesence's $9M seed round fuels its mission to embed rigorous AI review agents directly into Microsoft Office, promising law firms unparalleled precision and compliance.

The post Vesence lands $9M to bring rigorous AI review to law firms appeared first on StartupHub.ai.

Cartesia’s Sonic-3 TTS laughs and emotes at human speed

The post Cartesia’s Sonic-3 TTS laughs and emotes at human speed appeared first on StartupHub.ai.

Cartesia's Sonic-3 uses a State Space Model architecture to deliver emotionally expressive AI speech, including laughter, at speeds faster than a human can respond.

The post Cartesia’s Sonic-3 TTS laughs and emotes at human speed appeared first on StartupHub.ai.

Salesforce Agentic AI: The Enterprise Evolution

The post Salesforce Agentic AI: The Enterprise Evolution appeared first on StartupHub.ai.

Salesforce's 'Agentic AI' strategy, featuring Agentforce 360 and Forward Deployed Engineers, aims to fundamentally redefine enterprise operations with unified, workflow-spanning AI agents.

The post Salesforce Agentic AI: The Enterprise Evolution appeared first on StartupHub.ai.

Polygraf AI Closes $9.5M Funding Round to Scale Its Secure AI Solutions for Enterprise Defense and Intelligence

The post Polygraf AI Closes $9.5M Funding Round to Scale Its Secure AI Solutions for Enterprise Defense and Intelligence appeared first on StartupHub.ai.

Polygraf AI, based in Austin, Texas announced the closing of their $9.5M seed round participation from DOMiNO Ventures, Allegis Capital, Alumni Ventures, DataPower VC and previous investors to accelerate their mission to bring clarity and trust to enterprise AI. With the new $9.5M Seed round, Polygraf AI is building the next generation of enterprise AI […]

The post Polygraf AI Closes $9.5M Funding Round to Scale Its Secure AI Solutions for Enterprise Defense and Intelligence appeared first on StartupHub.ai.

NVIDIA BlueField-4 Powers AI Factory OS

The post NVIDIA BlueField-4 Powers AI Factory OS appeared first on StartupHub.ai.

NVIDIA BlueField-4 is poised to redefine AI infrastructure, offering unprecedented compute power, 800Gb/s throughput, and advanced security for gigascale AI factories.

The post NVIDIA BlueField-4 Powers AI Factory OS appeared first on StartupHub.ai.

Primaa raises €7M to advance AI cancer diagnostics

The post Primaa raises €7M to advance AI cancer diagnostics appeared first on StartupHub.ai.

Biotech company Primaa raised €7 million to expand its AI software that helps pathologists improve the speed and accuracy of cancer diagnostics.

The post Primaa raises €7M to advance AI cancer diagnostics appeared first on StartupHub.ai.

TechSpot PC Buying Guide: Five Great Builds for Every Budget

GPU prices have finally cooled, DDR5 has spiked, and AMD's new Threadripper opens fresh pro power. Here are five builds for every budget - mix and match to fit your needs.

Read Entire Article

FitResume – AI Resume Generator, Job Tailoring and many more

Fitresume.app is a free, AI‑driven resume builder that generates ATS‑friendly resumes, lets you choose polished templates, and custom‑tailors every resume to the exact wording of each job description. Ready to download as a PDF in seconds. Beyond writing, it tracks every application, follow‑up, and interview while visualizing your entire pipeline with an interactive Sankey diagram, so you stay organised and land offers faster.

How to watch Australia v India T20 series 2025: live streams, schedule, teams

Withings U-Scan brought my urine analysis home and I need a drink – of water

CEO of spyware maker Memento Labs confirms one of its government customers was caught using its malware

LG Uplus is latest South Korean telco to confirm cybersecurity incident

NVIDIA Tackles AI Energy Consumption with Gigawatt Blueprint

The post NVIDIA Tackles AI Energy Consumption with Gigawatt Blueprint appeared first on StartupHub.ai.

NVIDIA's Omniverse DSX blueprint provides a standardized, energy-efficient framework for designing and operating gigawatt-scale AI factories, directly addressing AI energy consumption.

The post NVIDIA Tackles AI Energy Consumption with Gigawatt Blueprint appeared first on StartupHub.ai.

NVIDIA Open Models Broaden AI Innovation Access

The post NVIDIA Open Models Broaden AI Innovation Access appeared first on StartupHub.ai.

NVIDIA's new open models and data across language, robotics, and biology are set to democratize advanced AI and accelerate innovation.

The post NVIDIA Open Models Broaden AI Innovation Access appeared first on StartupHub.ai.

OpenAI Restructuring and Amazon’s AI Paradox Reshape Tech Landscape

The post OpenAI Restructuring and Amazon’s AI Paradox Reshape Tech Landscape appeared first on StartupHub.ai.

The capital requirements and strategic maneuvering defining the artificial intelligence frontier are starkly evident in recent developments, from OpenAI’s finalized restructuring to Amazon’s contrasting AI investment strategy. CNBC’s Morgan Brennan recently spoke with CNBC Business News reporter MacKenzie Sigalos, delving into the implications of these pivotal shifts for the broader tech ecosystem and workforce. Their […]

The post OpenAI Restructuring and Amazon’s AI Paradox Reshape Tech Landscape appeared first on StartupHub.ai.

NVIDIA AI Factory Government: Securing Public Sector AI

The post NVIDIA AI Factory Government: Securing Public Sector AI appeared first on StartupHub.ai.

NVIDIA's AI Factory for Government provides a secure, full-stack AI reference design, enabling federal agencies to deploy mission-critical AI with stringent security.

The post NVIDIA AI Factory Government: Securing Public Sector AI appeared first on StartupHub.ai.

NVIDIA IGX Thor Powers Real-Time AI at the Industrial Edge

The post NVIDIA IGX Thor Powers Real-Time AI at the Industrial Edge appeared first on StartupHub.ai.

NVIDIA IGX Thor is an industrial-grade platform delivering 8x the AI compute of its predecessor, enabling real-time physical AI for critical industrial and medical applications.

The post NVIDIA IGX Thor Powers Real-Time AI at the Industrial Edge appeared first on StartupHub.ai.

Battlefield Launches RedSec Free-to-Play Battle Royale Spin-Off With up to 100-Player Matches

Battlefield RedSec calls for, at minimum, an Intel Core i5-8400 or AMD Ryzen 5 2600, an AMD Radeon RX 5600 XT, NVIDIA GeForce RTX 2060, or Intel Arc A380, and 16 GB of RAM. As is increasingly the case with multiplayer games these days, RedSec also requires that players have TPM 2.0 and Secure Boot enabled, effectively locking out any potential gamers on Linux, including the Valve Steam Deck. RedSec also gives creators access to Portal, the updated Battlefield UGC and custom game creator, replete with all the vehicles and weapons from Battlefield RedSec.

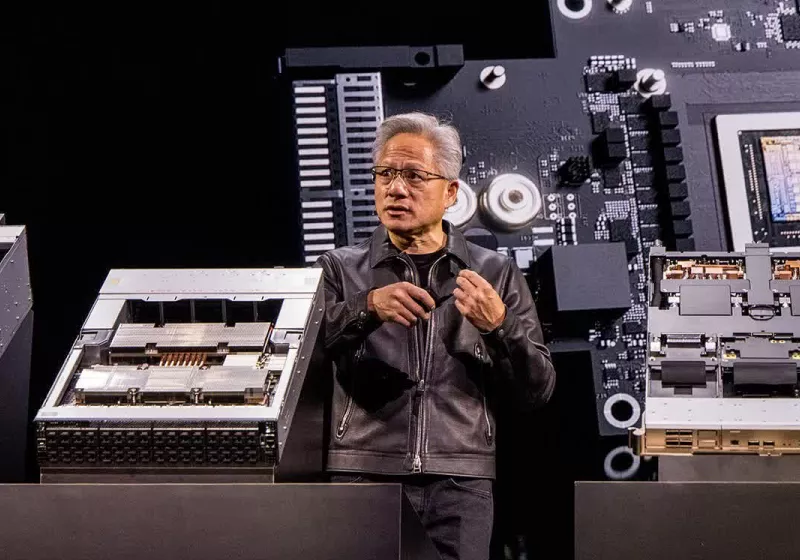

Nvidia, one of the few companies profiting from AI, claims there is no AI bubble

Nvidia CEO Jensen Huang recently told Bloomberg he doesn't believe the recent AI boom has become a bubble, breaking with months of warnings from across the business world. Speaking at the company's GTC conference in Washington DC, he characterized the current moment as a virtuous cycle.

Read Entire Article

NVIDIA Could Receive Approval for Blackwell AI Chip in China, Marking a Major “Bonus” for Its Market Share in the Region

NVIDIA's market position in China could see a significant boost following the Trump-Xi meeting, as President Trump hints at discussing 'Blackwell' AI chips for Beijing. NVIDIA's Blackwell AI Chip Will Be a Topic of Discussion Under Trump-Xi Meeting, With a Potential Breakthrough in Sight The Chinese market has been a significant challenge for Jensen Huang since the US-China trade hostilities, and now, it seems like there might be a sigh of relief on the horizon for NVIDIA. According to a report by Bloomberg, President Trump has suggested discussing NVIDIA's Blackwell AI chip with the Chinese counterpart, indicating that chips could […]

Read full article at https://wccftech.com/nvidia-could-receive-approval-for-blackwell-ai-chip-in-china/

The DM's Ark – Streamlined DM tool for campaign notes, Discord logs, session tracking

YouTube Provides Opt Out for Live-Stream Leaderboards

YouTube's also changing the name of the 'copyright' tab in YouTube Studio.

Suno just replaced its free AI music model with v4.5-all – and it’s faster, richer, and way more expressive

Nintendo Switch 2 Pro Controller vs 8BitDo Ultimate 2: which is the best value this Black Friday?

New Prime Video movie Hedda made me ask myself some difficult questions, but none as tricky as what I asked its cast

‘We had a list of over 100 titles’: Pluribus creator Vince Gilligan admits his new Apple TV series was incredibly difficult to name

Worried about AI taking your job? Don't worry, Sam Altman says some disappearing roles were never ‘real work’ to begin with

Netflix’s Crash Bandicoot show could be Naughty Dog’s next big adaptation – if it doesn’t get canceled again

Tata Motors confirms it fixed security flaws, which exposed company and customer data

Here are the 5 Startup Battlefield finalists at TechCrunch Disrupt 2025

‘Silicon Valley’ star Thomas Middleditch makes a surprise appearance at TechCrunch Disrupt 2025

Filing: Amazon cuts more than 2,300 jobs in Washington state as part of broader layoffs

Amazon will lay off 2,303 corporate employees in Washington state, primarily in its Seattle and Bellevue offices, according to a filing with the state Employment Security Department that provides the first geographic breakdown of the company’s 14,000 global job cuts.

A detailed list included with the state filing shows a wide array of impacted roles, including software engineers, program managers, product managers, and designers, as well as a significant number of recruiters and human resources staff.

Senior and principal-level roles are among those being cut, aligning with a company-wide push to use the cutbacks to help reduce bureaucracy and operate more efficiently.

Amazon announced the cuts Tuesday morning, part of a larger push by CEO Andy Jassy to streamline the company. Jassy had previously told Amazon employees in June that efficiency gains from AI would likely lead to a smaller corporate workforce over time.

In a memo from HR chief Beth Galetti, the company signaled that further cutbacks will continue into 2026. Reuters reported Monday that the number of layoffs could ultimately total as many as 30,000 people, which is still possible as the layoffs continue into next year.

Lilly Blackwell Drug Discovery: A New Era

The post Lilly Blackwell Drug Discovery: A New Era appeared first on StartupHub.ai.

Lilly's new AI factory, powered by NVIDIA Blackwell GPUs, marks a pivotal shift in drug discovery, promising unprecedented speed and scale in pharmaceutical innovation.

The post Lilly Blackwell Drug Discovery: A New Era appeared first on StartupHub.ai.

NVIDIA AI-RAN: Open Source Rewrites Wireless Innovation

The post NVIDIA AI-RAN: Open Source Rewrites Wireless Innovation appeared first on StartupHub.ai.

NVIDIA's open-sourcing of Aerial software, coupled with DGX Spark, is democratizing AI-native 5G and 6G development, accelerating wireless innovation at an unprecedented pace.

The post NVIDIA AI-RAN: Open Source Rewrites Wireless Innovation appeared first on StartupHub.ai.

Celestica CEO Mionis: AI is a Must-Have Utility, Not a Bubble

The post Celestica CEO Mionis: AI is a Must-Have Utility, Not a Bubble appeared first on StartupHub.ai.

“AI right now used to be a nice-to-have. It’s a utility, it’s a must-have.” This declarative statement from Celestica President and CEO Rob Mionis on CNBC’s Mad Money with Jim Cramer cuts directly to the core of the current technological zeitgeist. It frames artificial intelligence not as a speculative fad or a nascent technology still […]

The post Celestica CEO Mionis: AI is a Must-Have Utility, Not a Bubble appeared first on StartupHub.ai.

Google AI Studio Unleashes “Vibe Coding” Revolutionizing AI Agent Development

The post Google AI Studio Unleashes “Vibe Coding” Revolutionizing AI Agent Development appeared first on StartupHub.ai.

The era of complex, code-heavy AI development is rapidly giving way to an intuitive, natural language-driven approach, dramatically democratizing creation. At the forefront of this shift is Google AI Studio, a platform designed to accelerate the journey from concept to fully functional AI application in minutes. This new “vibe coding” experience, showcased by Logan Kilpatrick, […]

The post Google AI Studio Unleashes “Vibe Coding” Revolutionizing AI Agent Development appeared first on StartupHub.ai.

AI Fusion Energy: NVIDIA, GA Unveil Digital Twin Breakthrough

The post AI Fusion Energy: NVIDIA, GA Unveil Digital Twin Breakthrough appeared first on StartupHub.ai.

NVIDIA and General Atomics have launched an AI-enabled digital twin for fusion reactors, dramatically accelerating the path to commercial AI fusion energy.

The post AI Fusion Energy: NVIDIA, GA Unveil Digital Twin Breakthrough appeared first on StartupHub.ai.

CyberRidge raises $26M to advance optical security for fiber networks

The post CyberRidge raises $26M to advance optical security for fiber networks appeared first on StartupHub.ai.

CyberRidge launched with $26 million to develop its optical security system that protects data in fiber-optic networks from eavesdropping.

The post CyberRidge raises $26M to advance optical security for fiber networks appeared first on StartupHub.ai.

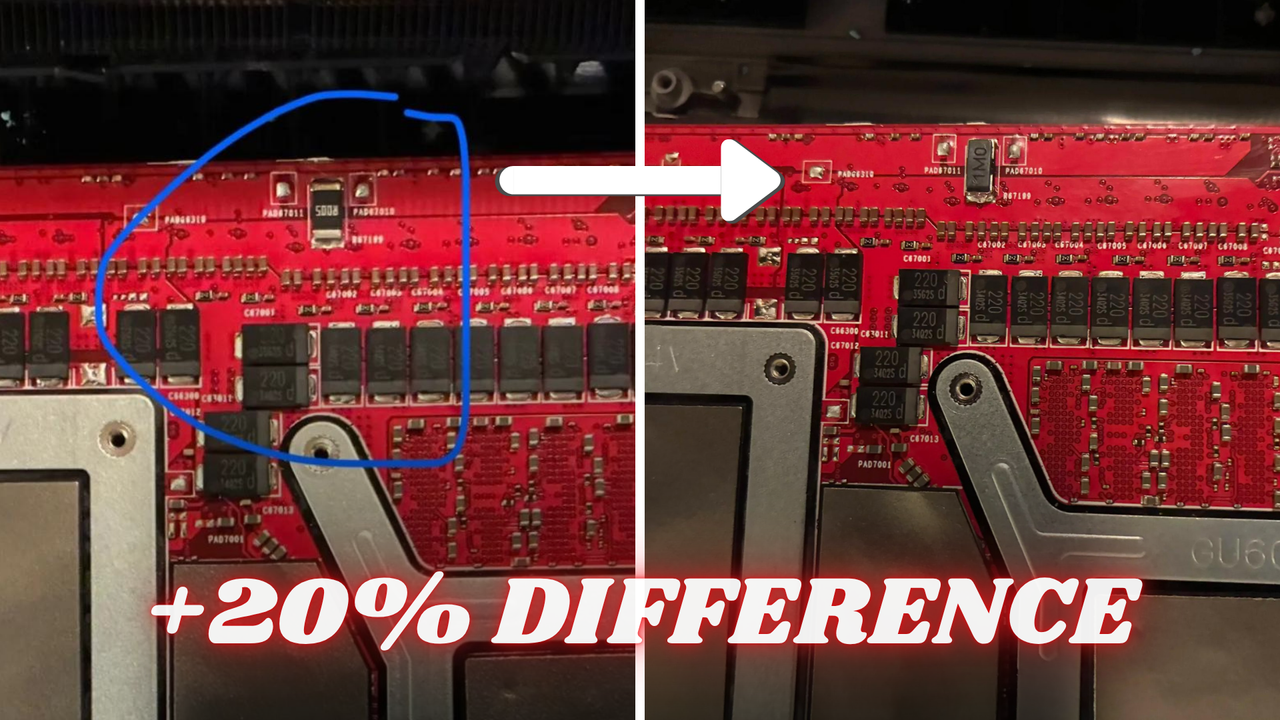

MSI GeForce RTX 5050 Shadow 2X Review: Budget Blackwell Gaming Tested

MSI GeForce RTX 5050 Shadow 2X 8GB: $249 The GeForce RTX 5050 is the most-affordable graphics card based on NVIDIA's Blackwell architecture that targets mainstream gamers, and is the first RTX XX50 series GPU for the desktop in years. Blackwell Architecture Power Efficient Smaller Form Factor Cool And Quiet DLSS4 And RTX Neural Rendering Latest...

MSI GeForce RTX 5050 Shadow 2X 8GB: $249 The GeForce RTX 5050 is the most-affordable graphics card based on NVIDIA's Blackwell architecture that targets mainstream gamers, and is the first RTX XX50 series GPU for the desktop in years. Blackwell Architecture Power Efficient Smaller Form Factor Cool And Quiet DLSS4 And RTX Neural Rendering Latest... Arm Ethos NPU Accelerator Driver Expected To Be Merged For Linux 6.19

Red Hat Affirms Plans To Distribute NVIDIA CUDA Across RHEL, Red Hat AI & OpenShift

TrueNAS 25.10 Released With NVMe-oF Support, OpenZFS Performance Improvements

Microsoft begins rolling out new Start menu on Windows 11 — here's everything you should know

Battlefield 6 REDSEC reaches all-new player counts — Is it the Warzone crusher?

Battlefield 6 REDSEC's mixed Steam reviews ignite a firestorm — "This is not why I bought Battlefield 6."

ID@Xbox showcase recap: Every indie game announcement from the Fall 2025 event

Microsoft CEO Satya Nadella is "looking forward," to the next Xbox — "We want to do innovative work on the system side, on both console and PC."

Battlefield 6 Redsec looks like the battle royale game that might finally win me over — but one missing feature is really killing my buzz

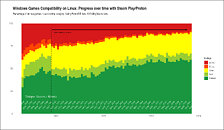

Nine out of ten Windows games can now run on Linux, data shows

Aggregated data from the Linux community highlights the significant progress made in gaming on Linux. Compatibility between titles originally designed for Windows and the wider free and open source ecosystem (FOSS) built on the Linux kernel is now at an all time high, although the pace of improvement has slowed.

Read Entire Article

Apple Bringing Water Resistance To iPad mini, OLED Displays To The MacBook Air, iPad Air, And iPad mini

Apple is finally getting ready to introduce OLED displays in a wider range of its products. However, don't expect a broad-based debut soon, especially given the Cupertino giant's tendency to move at a glacial pace when introducing new technology. Apple is gearing up to introduce OLED displays in the future versions of the MacBook Air, iPad Air, and iPad mini, with water resistance added for good measure Bloomberg's legendary tipster, Mark Gurman, is out with another scoop today, focusing on a much-anticipated display overhaul for the MacBook Air, iPad Air, and iPad mini, all of which are now slated to […]

Read full article at https://wccftech.com/apple-is-testing-oled-displays-for-the-macbook-air-ipad-air-and-ipad-mini-with-water-resistance-also-in-the-offing/

IBM's open source Granite 4.0 Nano AI models are small enough to run locally directly in your browser

In an industry where model size is often seen as a proxy for intelligence, IBM is charting a different course — one that values efficiency over enormity, and accessibility over abstraction.

The 114-year-old tech giant's four new Granite 4.0 Nano models, released today, range from just 350 million to 1.5 billion parameters, a fraction of the size of their server-bound cousins from the likes of OpenAI, Anthropic, and Google.

These models are designed to be highly accessible: the 350M variants can run comfortably on a modern laptop CPU with 8–16GB of RAM, while the 1.5B models typically require a GPU with at least 6–8GB of VRAM for smooth performance — or sufficient system RAM and swap for CPU-only inference. This makes them well-suited for developers building applications on consumer hardware or at the edge, without relying on cloud compute.

In fact, the smallest ones can even run locally on your own web browser, as Joshua Lochner aka Xenova, creator of Transformer.js and a machine learning engineer at Hugging Face, wrote on the social network X.

All the Granite 4.0 Nano models are released under the Apache 2.0 license — perfect for use by researchers and enterprise or indie developers, even for commercial usage.

They are natively compatible with llama.cpp, vLLM, and MLX and are certified under ISO 42001 for responsible AI development — a standard IBM helped pioneer.

But in this case, small doesn't mean less capable — it might just mean smarter design.

These compact models are built not for data centers, but for edge devices, laptops, and local inference, where compute is scarce and latency matters.

And despite their small size, the Nano models are showing benchmark results that rival or even exceed the performance of larger models in the same category.

The release is a signal that a new AI frontier is rapidly forming — one not dominated by sheer scale, but by strategic scaling.

What Exactly Did IBM Release?

The Granite 4.0 Nano family includes four open-source models now available on Hugging Face:

Granite-4.0-H-1B (~1.5B parameters) – Hybrid-SSM architecture

Granite-4.0-H-350M (~350M parameters) – Hybrid-SSM architecture

Granite-4.0-1B – Transformer-based variant, parameter count closer to 2B

Granite-4.0-350M – Transformer-based variant

The H-series models — Granite-4.0-H-1B and H-350M — use a hybrid state space architecture (SSM) that combines efficiency with strong performance, ideal for low-latency edge environments.

Meanwhile, the standard transformer variants — Granite-4.0-1B and 350M — offer broader compatibility with tools like llama.cpp, designed for use cases where hybrid architecture isn’t yet supported.

In practice, the transformer 1B model is closer to 2B parameters, but aligns performance-wise with its hybrid sibling, offering developers flexibility based on their runtime constraints.

“The hybrid variant is a true 1B model. However, the non-hybrid variant is closer to 2B, but we opted to keep the naming aligned to the hybrid variant to make the connection easily visible,” explained Emma, Product Marketing lead for Granite, during a Reddit "Ask Me Anything" (AMA) session on r/LocalLLaMA.

A Competitive Class of Small Models

IBM is entering a crowded and rapidly evolving market of small language models (SLMs), competing with offerings like Qwen3, Google's Gemma, LiquidAI’s LFM2, and even Mistral’s dense models in the sub-2B parameter space.

While OpenAI and Anthropic focus on models that require clusters of GPUs and sophisticated inference optimization, IBM’s Nano family is aimed squarely at developers who want to run performant LLMs on local or constrained hardware.

In benchmark testing, IBM’s new models consistently top the charts in their class. According to data shared on X by David Cox, VP of AI Models at IBM Research:

On IFEval (instruction following), Granite-4.0-H-1B scored 78.5, outperforming Qwen3-1.7B (73.1) and other 1–2B models.

On BFCLv3 (function/tool calling), Granite-4.0-1B led with a score of 54.8, the highest in its size class.

On safety benchmarks (SALAD and AttaQ), the Granite models scored over 90%, surpassing similarly sized competitors.

Overall, the Granite-4.0-1B achieved a leading average benchmark score of 68.3% across general knowledge, math, code, and safety domains.

This performance is especially significant given the hardware constraints these models are designed for.

They require less memory, run faster on CPUs or mobile devices, and don’t need cloud infrastructure or GPU acceleration to deliver usable results.

Why Model Size Still Matters — But Not Like It Used To

In the early wave of LLMs, bigger meant better — more parameters translated to better generalization, deeper reasoning, and richer output.

But as transformer research matured, it became clear that architecture, training quality, and task-specific tuning could allow smaller models to punch well above their weight class.

IBM is banking on this evolution. By releasing open, small models that are competitive in real-world tasks, the company is offering an alternative to the monolithic AI APIs that dominate today’s application stack.

In fact, the Nano models address three increasingly important needs:

Deployment flexibility — they run anywhere, from mobile to microservers.

Inference privacy — users can keep data local with no need to call out to cloud APIs.

Openness and auditability — source code and model weights are publicly available under an open license.

Community Response and Roadmap Signals

IBM’s Granite team didn’t just launch the models and walk away — they took to Reddit’s open source community r/LocalLLaMA to engage directly with developers.

In an AMA-style thread, Emma (Product Marketing, Granite) answered technical questions, addressed concerns about naming conventions, and dropped hints about what’s next.

Notable confirmations from the thread:

A larger Granite 4.0 model is currently in training

Reasoning-focused models ("thinking counterparts") are in the pipeline

IBM will release fine-tuning recipes and a full training paper soon

More tooling and platform compatibility is on the roadmap

Users responded enthusiastically to the models’ capabilities, especially in instruction-following and structured response tasks. One commenter summed it up:

“This is big if true for a 1B model — if quality is nice and it gives consistent outputs. Function-calling tasks, multilingual dialog, FIM completions… this could be a real workhorse.”

Another user remarked:

“The Granite Tiny is already my go-to for web search in LM Studio — better than some Qwen models. Tempted to give Nano a shot.”

Background: IBM Granite and the Enterprise AI Race

IBM’s push into large language models began in earnest in late 2023 with the debut of the Granite foundation model family, starting with models like Granite.13b.instruct and Granite.13b.chat. Released for use within its Watsonx platform, these initial decoder-only models signaled IBM’s ambition to build enterprise-grade AI systems that prioritize transparency, efficiency, and performance. The company open-sourced select Granite code models under the Apache 2.0 license in mid-2024, laying the groundwork for broader adoption and developer experimentation.

The real inflection point came with Granite 3.0 in October 2024 — a fully open-source suite of general-purpose and domain-specialized models ranging from 1B to 8B parameters. These models emphasized efficiency over brute scale, offering capabilities like longer context windows, instruction tuning, and integrated guardrails. IBM positioned Granite 3.0 as a direct competitor to Meta’s Llama, Alibaba’s Qwen, and Google's Gemma — but with a uniquely enterprise-first lens. Later versions, including Granite 3.1 and Granite 3.2, introduced even more enterprise-friendly innovations: embedded hallucination detection, time-series forecasting, document vision models, and conditional reasoning toggles.

The Granite 4.0 family, launched in October 2025, represents IBM’s most technically ambitious release yet. It introduces a hybrid architecture that blends transformer and Mamba-2 layers — aiming to combine the contextual precision of attention mechanisms with the memory efficiency of state-space models. This design allows IBM to significantly reduce memory and latency costs for inference, making Granite models viable on smaller hardware while still outperforming peers in instruction-following and function-calling tasks. The launch also includes ISO 42001 certification, cryptographic model signing, and distribution across platforms like Hugging Face, Docker, LM Studio, Ollama, and watsonx.ai.

Across all iterations, IBM’s focus has been clear: build trustworthy, efficient, and legally unambiguous AI models for enterprise use cases. With a permissive Apache 2.0 license, public benchmarks, and an emphasis on governance, the Granite initiative not only responds to rising concerns over proprietary black-box models but also offers a Western-aligned open alternative to the rapid progress from teams like Alibaba’s Qwen. In doing so, Granite positions IBM as a leading voice in what may be the next phase of open-weight, production-ready AI.

A Shift Toward Scalable Efficiency

In the end, IBM’s release of Granite 4.0 Nano models reflects a strategic shift in LLM development: from chasing parameter count records to optimizing usability, openness, and deployment reach.

By combining competitive performance, responsible development practices, and deep engagement with the open-source community, IBM is positioning Granite as not just a family of models — but a platform for building the next generation of lightweight, trustworthy AI systems.

For developers and researchers looking for performance without overhead, the Nano release offers a compelling signal: you don’t need 70 billion parameters to build something powerful — just the right ones.

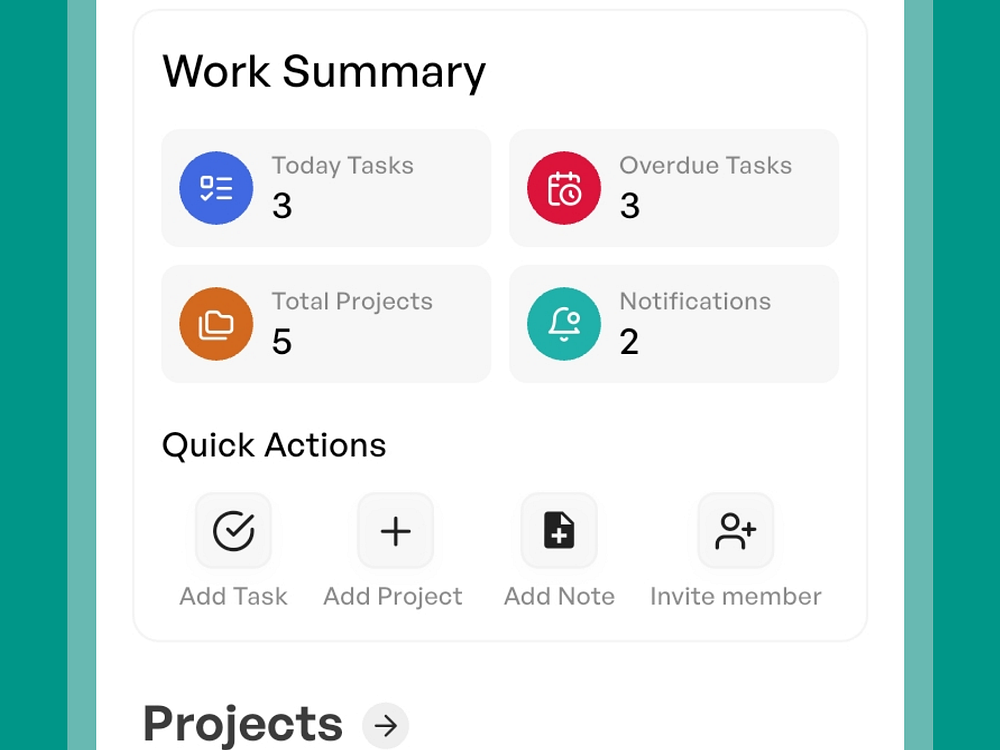

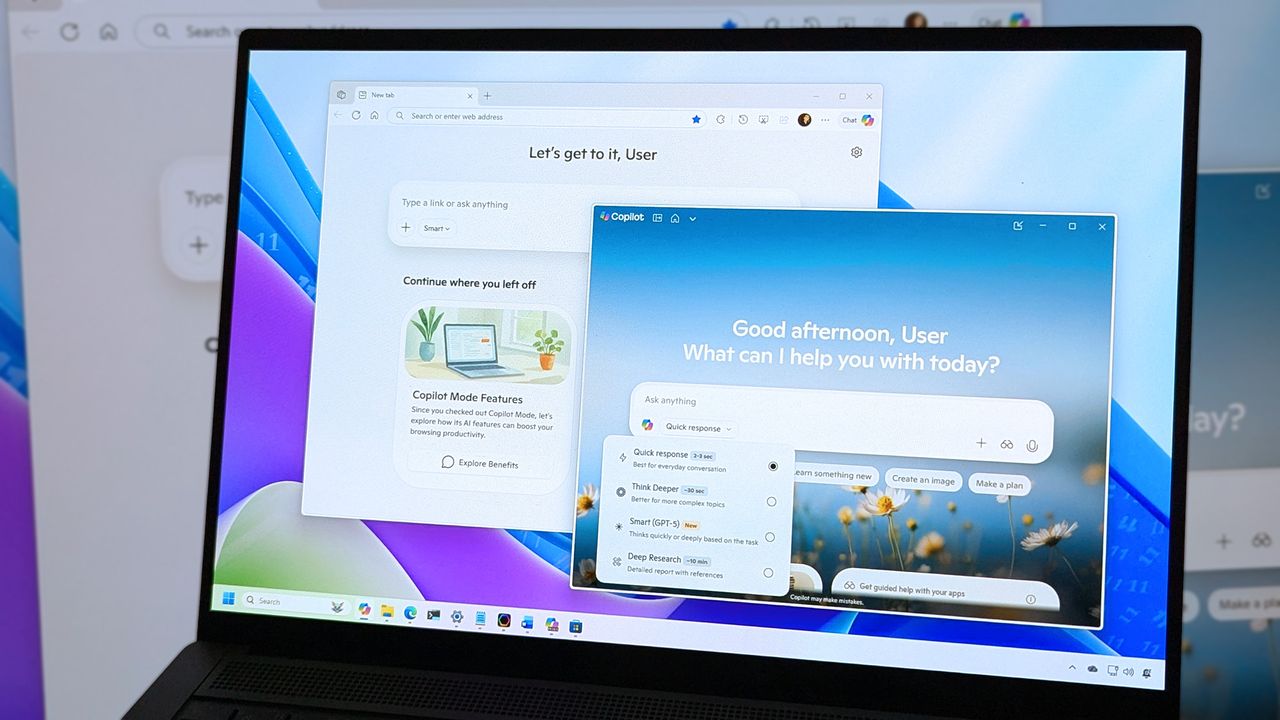

Microsoft’s Copilot can now build apps and automate your job — here’s how it works

Microsoft is launching a significant expansion of its Copilot AI assistant on Tuesday, introducing tools that let employees build applications, automate workflows, and create specialized AI agents using only conversational prompts — no coding required.

The new capabilities, called App Builder and Workflows, mark Microsoft's most aggressive attempt yet to merge artificial intelligence with software development, enabling the estimated 100 million Microsoft 365 users to create business tools as easily as they currently draft emails or build spreadsheets.

"We really believe that a main part of an AI-forward employee, not just developers, will be to create agents, workflows and apps," Charles Lamanna, Microsoft's president of business and industry Copilot, said in an interview with VentureBeat. "Part of the job will be to build and create these things."

The announcement comes as Microsoft deepens its commitment to AI-powered productivity tools while navigating a complex partnership with OpenAI, the creator of the underlying technology that powers Copilot. On the same day, OpenAI completed its restructuring into a for-profit entity, with Microsoft receiving a 27% ownership stake valued at approximately $135 billion.

How natural language prompts now create fully functional business applications

The new features transform Copilot from a conversational assistant into what Microsoft envisions as a comprehensive development environment accessible to non-technical workers. Users can now describe an application they need — such as a project tracker with dashboards and task assignments — and Copilot will generate a working app complete with a database backend, user interface, and security controls.

"If you're right inside of Copilot, you can now have a conversation to build an application complete with a backing database and a security model," Lamanna explained. "You can make edit requests and update requests and change requests so you can tune the app to get exactly the experience you want before you share it with other users."

The App Builder stores data in Microsoft Lists, the company's lightweight database system, and allows users to share finished applications via a simple link—similar to sharing a document. The Workflows agent, meanwhile, automates routine tasks across Microsoft's ecosystem of products, including Outlook, Teams, SharePoint, and Planner, by converting natural language descriptions into automated processes.

A third component, a simplified version of Microsoft's Copilot Studio agent-building platform, lets users create specialized AI assistants tailored to specific tasks or knowledge domains, drawing from SharePoint documents, meeting transcripts, emails, and external systems.

All three capabilities are included in the existing $30-per-month Microsoft 365 Copilot subscription at no additional cost — a pricing decision Lamanna characterized as consistent with Microsoft's historical approach of bundling significant value into its productivity suite.

"That's what Microsoft always does. We try to do a huge amount of value at a low price," he said. "If you go look at Office, you think about Excel, Word, PowerPoint, Exchange, all that for like eight bucks a month. That's a pretty good deal."

Why Microsoft's nine-year bet on low-code development is finally paying off

The new tools represent the culmination of a nine-year effort by Microsoft to democratize software development through its Power Platform — a collection of low-code and no-code development tools that has grown to 56 million monthly active users, according to figures the company disclosed in recent earnings reports.

Lamanna, who has led the Power Platform initiative since its inception, said the integration into Copilot marks a fundamental shift in how these capabilities reach users. Rather than requiring workers to visit a separate website or learn a specialized interface, the development tools now exist within the same conversational window they already use for AI-assisted tasks.

"One of the big things that we're excited about is Copilot — that's a tool for literally every office worker," Lamanna said. "Every office worker, just like they research data, they analyze data, they reason over topics, they also will be creating apps, agents and workflows."

The integration offers significant technical advantages, he argued. Because Copilot already indexes a user's Microsoft 365 content — emails, documents, meetings, and organizational data — it can incorporate that context into the applications and workflows it builds. If a user asks for "an app for Project Spartan," Copilot can draw from existing communications to understand what that project entails and suggest relevant features.

"If you go to those other tools, they have no idea what the heck Project Spartan is," Lamanna said, referencing competing low-code platforms from companies like Google, Salesforce, and ServiceNow. "But if you do it inside of Copilot and inside of the App Builder, it's able to draw from all that information and context."

Microsoft claims the apps created through these tools are "full-stack applications" with proper databases secured through the same identity systems used across its enterprise products — distinguishing them from simpler front-end tools offered by competitors. The company also emphasized that its existing governance, security, and data loss prevention policies automatically apply to apps and workflows created through Copilot.

Where professional developers still matter in an AI-powered workplace

While Microsoft positions the new capabilities as accessible to all office workers, Lamanna was careful to delineate where professional developers remain essential. His dividing line centers on whether a system interacts with parties outside the organization.

"Anything that leaves the boundaries of your company warrants developer involvement," he said. "If you want to build an agent and put it on your website, you should have developers involved. Or if you want to build an automation which interfaces directly with your customers, or an app or a website which interfaces directly with your customers, you want professionals involved."

The reasoning is risk-based: external-facing systems carry greater potential for data breaches, security vulnerabilities, or business errors. "You don't want people getting refunds they shouldn't," Lamanna noted.

For internal use cases — approval workflows, project tracking, team dashboards — Microsoft believes the new tools can handle the majority of needs without IT department involvement. But the company has built "no cliffs," in Lamanna's terminology, allowing users to migrate simple apps to more sophisticated platforms as needs grow.

Apps created in the conversational App Builder can be opened in Power Apps, Microsoft's full development environment, where they can be connected to Dataverse, the company's enterprise database, or extended with custom code. Similarly, simple workflows can graduate to the full Power Automate platform, and basic agents can be enhanced in the complete Copilot Studio.

"We have this mantra called no cliffs," Lamanna said. "If your app gets too complicated for the App Builder, you can always edit and open it in Power Apps. You can jump over to the richer experience, and if you're really sophisticated, you can even go from those experiences into Azure."

This architecture addresses a problem that has plagued previous generations of easy-to-use development tools: users who outgrow the simplified environment often must rebuild from scratch on professional platforms. "People really do not like easy-to-use development tools if I have to throw everything away and start over," Lamanna said.

What happens when every employee can build apps without IT approval

The democratization of software development raises questions about governance, maintenance, and organizational complexity — issues Microsoft has worked to address through administrative controls.

IT administrators can view all applications, workflows, and agents created within their organization through a centralized inventory in the Microsoft 365 admin center. They can reassign ownership, disable access at the group level, or "promote" particularly useful employee-created apps to officially supported status.

"We have a bunch of customers who have this approach where it's like, let 1,000 apps bloom, and then the best ones, I go upgrade and make them IT-governed or central," Lamanna said.

The system also includes provisions for when employees leave. Apps and workflows remain accessible for 60 days, during which managers can claim ownership — similar to how OneDrive files are handled when someone departs.

Lamanna argued that most employee-created apps don't warrant significant IT oversight. "It's just not worth inspecting an app that John, Susie, and Bob use to do their job," he said. "It should concern itself with the app that ends up being used by 2,000 people, and that will pop up in that dashboard."

Still, the proliferation of employee-created applications could create challenges. Users have expressed frustration with Microsoft's increasing emphasis on AI features across its products, with some giving the Microsoft 365 mobile app one-star ratings after a recent update prioritized Copilot over traditional file access.

The tools also arrive as enterprises grapple with "shadow IT" — unsanctioned software and systems that employees adopt without official approval. While Microsoft's governance controls aim to provide visibility, the ease of creating new applications could accelerate the pace at which these systems multiply.

The ambitious plan to turn 500 million workers into software builders

Microsoft's ambitions for the technology extend far beyond incremental productivity gains. Lamanna envisions a fundamental transformation of what it means to be an office worker — one where building software becomes as routine as creating spreadsheets.

"Just like how 20 years ago you put on your resume that you could use pivot tables in Excel, people are going to start saying that they can use App Builder and workflow agents, even if they're just in the finance department or the sales department," he said.

The numbers he's targeting are staggering. With 56 million people already using Power Platform, Lamanna believes the integration into Copilot could eventually reach 500 million builders. "Early days still, but I think it's certainly encouraging," he said.

The features are currently available only to customers in Microsoft's Frontier Program — an early access initiative for Microsoft 365 Copilot subscribers. The company has not disclosed how many organizations participate in the program or when the tools will reach general availability.

The announcement fits within Microsoft's larger strategy of embedding AI capabilities throughout its product portfolio, driven by its partnership with OpenAI. Under the restructured agreement announced Tuesday, Microsoft will have access to OpenAI's technology through 2032, including models that achieve artificial general intelligence (AGI) — though such systems do not yet exist. Microsoft has also begun integrating Copilot into its new companion apps for Windows 11, which provide quick access to contacts, files, and calendar information.

The aggressive integration of AI features across Microsoft's ecosystem has drawn mixed reactions. While enterprise customers have shown interest in productivity gains, the rapid pace of change and ubiquity of AI prompts have frustrated some users who prefer traditional workflows.

For Microsoft, however, the calculation is clear: if even a fraction of its user base begins creating applications and automations, it would represent a massive expansion of the effective software development workforce — and further entrench customers in Microsoft's ecosystem. The company is betting that the same natural language interface that made ChatGPT accessible to millions can finally unlock the decades-old promise of empowering everyday workers to build their own tools.

The App Builder and Workflows agents are available starting today through the Microsoft 365 Copilot Agent Store for Frontier Program participants.

Whether that future arrives depends not just on the technology's capabilities, but on a more fundamental question: Do millions of office workers actually want to become part-time software developers? Microsoft is about to find out if the answer is yes — or if some jobs are better left to the professionals.

TikTok Adds AI Assistance Tools for Creators

Also, expanded revenue opportunities via creator subscriptions.

Sole Purpose: Unless Collective and Under Armour’s Plastic-Free Sportswear

PepsiCo’s First New Logo in 25 Years Marks Its Shift to a ‘Branded House’

Liquid Death’s Benoit Vatere on Creative Risk and Retail Collaboration

Why Ugg Keeps Inviting Fans Into Its Warm, Fuzzy Universe IRL

Google DeepMind’s BlockRank could reshape how AI ranks information

Google DeepMind researchers have developed BlockRank, a new method for ranking and retrieving information more efficiently in large language models (LLMs).

- BlockRank is detailed in a new research paper, Scalable In-Context Ranking with Generative Models.

- BlockRank is designed to solve a challenge called In-context Ranking (ICR), or the process of having a model read a query and multiple documents at once to decide which ones matter most.

- As far as we know, BlockRank is not being used by Google (e.g., Search, Gemini, AI Mode, AI Overviews) right now – but it could be used at some point in the future.

What BlockRank changes. ICR is expensive and slow. Models use a process called “attention,” where every word compares itself to every other word. Ranking hundreds of documents at once gets exponentially harder for LLMs.

How BlockRank works. BlockRank restructures how an LLM “pays attention” to text. Instead of every document attending to every other document, each one focuses only on itself and the shared instructions.

- The model’s query section has access to all the documents, allowing it to compare them and decide which one best answers the question.

- This transforms the model’s attention cost from quadratic (very slow) to linear (much faster) growth.

By the numbers. In experiments using Mistral-7B, Google’s team found that BlockRank:

- Ran 4.7× faster than standard fine-tuned models when ranking 100 documents.

- Scaled smoothly to 500 documents (about 100,000 tokens) in roughly one second.

- Matched or beat leading listwise rankers like RankZephyr and FIRST on benchmarks such as MSMARCO, Natural Questions (NQ), and BEIR.

Why we care. BlockRank could change how future AI-driven retrieval and ranking systems work to reward user intent, clarity, and relevance. That means (in theory) clear, focused content that aligns with why a person is searching (not just what they type) should increasingly win.

What’s next. Google/DeepMind researchers are continuing to redefine what it means to “rank” information in the age of generative AI. The future of search is advancing fast – and it’s fascinating to watch it evolve in real time.

Red flag or red herring? Here's how AI’s power, water and carbon footprints stack up on a global scale

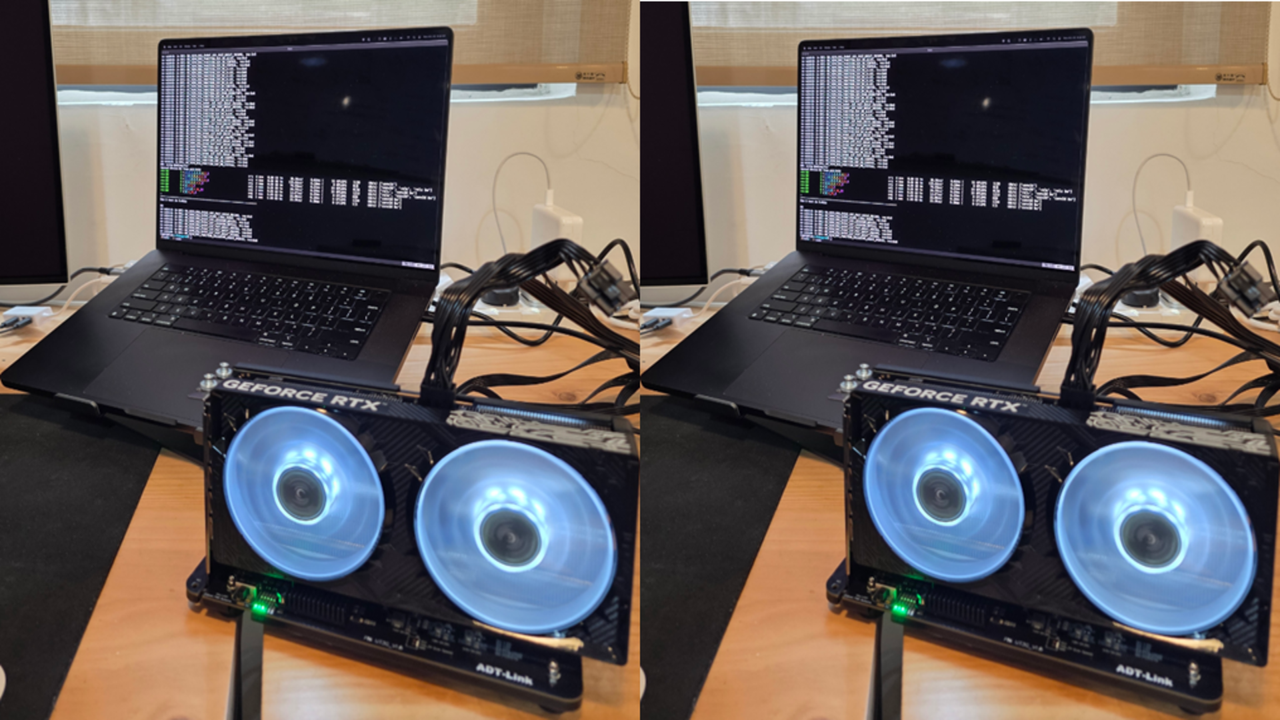

Apple MacBooks running Nvidia RTX GPUs are not a fantasy anymore - TinyCorp unlocks a whole new world of possibilities in a surprisingly low-tech way

I tested the JBL Grip and JBL Clip 5 small Bluetooth speakers – here’s which one I’d recommend for you

Backbone One vs Backbone Pro: Is the more expensive mobile controller actually worth it?

Time for Nvidia to worry? Apple has started shipping its AI servers way ahead of schedule - but how will they stand up to real-life performance?

I tested a 3-in-1 MagGo iPhone and Apple Watch charger that juiced my watch so fast, I'm never going back to anything slower

I tested the Asus ProArt P16 H7606WX creative laptop with RTX 5090, and I might never go back to a MacBook Pro

Data centers in spaaaaace - space tech firms want to take Nvidia H100 GPUs into orbit to power the next generation of compute

Waabi unveils autonomous truck made in partnership with Volvo

Unlisted connects homeowners with prospective buyers before they even put their homes up for sale and is part of TechCrunch Disrupt 2025

Super Teacher is building an AI tutor for elementary schools — catch it at Disrupt 2025

Mappa’s AI voice analysis helps you find the best job candidates and will show off its tech at TechCrunch Disrupt 2025

Inside CampusAI’s mission to close the AI training gap for everyday workers — check it out at TechCrunch Disrupt 2025

Unthread has a plan for cleaning up Slack and will show off its tech at TechCrunch Disrupt 2025

Khosla-backed Mazama taps super-hot rocks in race to deliver 24/7 power

Aurora expands self-driving trucks route to El Paso

Mirror’s founder is back with a new ‘connected screen’ startup: a gaming device called ‘Board’

Netflix CTO says more vertical video experiments are coming, but streamer is not competing with TikTok

Trust In AI Shopping Is Limited As Shoppers Verify On Websites

An IAB report finds AI speeds discovery but adds steps, sending shoppers to retailers, search, reviews, and forums to verify details before buying.

The post Trust In AI Shopping Is Limited As Shoppers Verify On Websites appeared first on Search Engine Journal.

US and Japan move to loosen China’s rare earths grip — nations partner to build alternative pathways to power, resource independence

NVIDIA Boosts Navy AI Training with DGX GB300

The post NVIDIA Boosts Navy AI Training with DGX GB300 appeared first on StartupHub.ai.

NVIDIA's DGX GB300 system is empowering the Naval Postgraduate School with advanced NVIDIA Navy AI training, enabling secure, on-premises generative AI and high-fidelity digital twin simulations for critical defense applications.

The post NVIDIA Boosts Navy AI Training with DGX GB300 appeared first on StartupHub.ai.

NVIDIA Charts America’s AI Future with Industrial-Scale Vision

The post NVIDIA Charts America’s AI Future with Industrial-Scale Vision appeared first on StartupHub.ai.

NVIDIA's GTC Washington, D.C., keynote unveiled a strategic blueprint for America's AI future, emphasizing national infrastructure, physical AI, and industry transformation.

The post NVIDIA Charts America’s AI Future with Industrial-Scale Vision appeared first on StartupHub.ai.

NVIDIA AI Fuels US Economic Development

The post NVIDIA AI Fuels US Economic Development appeared first on StartupHub.ai.

NVIDIA is driving significant AI economic development across the US by partnering with states, cities, and universities to democratize AI access and foster innovation.

The post NVIDIA AI Fuels US Economic Development appeared first on StartupHub.ai.

Microsoft’s OpenAI Bet Yields 10x Return, Igniting AI Infrastructure Race

The post Microsoft’s OpenAI Bet Yields 10x Return, Igniting AI Infrastructure Race appeared first on StartupHub.ai.

Microsoft’s staggering ten-fold return on its OpenAI investment, now valued at $135 billion, signals a new era where strategic AI stakes redefine corporate power and valuation. This monumental gain, highlighted by CNBC’s MacKenzie Sigalos, follows a significant corporate restructure at OpenAI that redefines its partnership terms with Microsoft, granting the tech giant a 27% equity […]

The post Microsoft’s OpenAI Bet Yields 10x Return, Igniting AI Infrastructure Race appeared first on StartupHub.ai.

Desktop Commander raises €1.1M to advance AI desktop automation

The post Desktop Commander raises €1.1M to advance AI desktop automation appeared first on StartupHub.ai.

Desktop Commander raised €1.1 million to develop its AI tool that allows non-technical users to automate computer tasks using natural language.

The post Desktop Commander raises €1.1M to advance AI desktop automation appeared first on StartupHub.ai.

Grasp raises $7M to advance its multi-agent AI for finance

The post Grasp raises $7M to advance its multi-agent AI for finance appeared first on StartupHub.ai.

AI startup Grasp raised $7 million to expand its multi-agent platform that automates complex financial analysis and reporting for consultants and investment banks.

The post Grasp raises $7M to advance its multi-agent AI for finance appeared first on StartupHub.ai.

SalesPatriot raises $5M to advance AI defence procurement

The post SalesPatriot raises $5M to advance AI defence procurement appeared first on StartupHub.ai.

SalesPatriot is developing AI-driven procurement software to help defence and aerospace suppliers automate orders and increase processing speeds.

The post SalesPatriot raises $5M to advance AI defence procurement appeared first on StartupHub.ai.

Dott extends funding to $150M to expand its e-bike fleet

The post Dott extends funding to $150M to expand its e-bike fleet appeared first on StartupHub.ai.

Micro-mobility company Dott extended its funding to over $150 million to expand its e-bike fleet and enter new European markets.

The post Dott extends funding to $150M to expand its e-bike fleet appeared first on StartupHub.ai.

Nokia secures $1B from Nvidia to build AI telecoms networks

The post Nokia secures $1B from Nvidia to build AI telecoms networks appeared first on StartupHub.ai.

Nvidia is investing $1 billion in Nokia to accelerate the development of AI-powered 5G and 6G telecommunications networks.

The post Nokia secures $1B from Nvidia to build AI telecoms networks appeared first on StartupHub.ai.

Socratix AI raises $4.1M to build autonomous AI coworkers

The post Socratix AI raises $4.1M to build autonomous AI coworkers appeared first on StartupHub.ai.

Socratix AI raised $4.1M to build autonomous AI coworkers that automate investigations for fraud and risk teams at financial institutions.

The post Socratix AI raises $4.1M to build autonomous AI coworkers appeared first on StartupHub.ai.

NVIDIA AI Physics Simulation Reshapes Engineering

The post NVIDIA AI Physics Simulation Reshapes Engineering appeared first on StartupHub.ai.

NVIDIA AI physics simulation, powered by the PhysicsNeMo framework, is accelerating engineering design by up to 500x in aerospace and automotive.

The post NVIDIA AI Physics Simulation Reshapes Engineering appeared first on StartupHub.ai.

Energy as the New Geopolitical Currency in the AI Race

The post Energy as the New Geopolitical Currency in the AI Race appeared first on StartupHub.ai.

“Knowledge used to be power, now power is knowledge.” This stark redefinition, articulated by U.S. Secretary of the Interior Doug Burgum during a CNBC “Power Lunch” interview, cuts to the core of the contemporary global power struggle. Speaking with Brian Sullivan, Burgum outlined a comprehensive strategy for the United States to secure its position in […]

The post Energy as the New Geopolitical Currency in the AI Race appeared first on StartupHub.ai.

CoreStory raises $32M to advance AI legacy code modernization

The post CoreStory raises $32M to advance AI legacy code modernization appeared first on StartupHub.ai.

AI startup CoreStory raised $32 million to help enterprises modernize legacy software with its platform that automatically documents and analyzes old code.

The post CoreStory raises $32M to advance AI legacy code modernization appeared first on StartupHub.ai.

Microsoft Windows Server Update Service Is Under Attack, What You Need To Know

Windows Server 2025 is currently open to a Remote Code Execution exploit via the Windows Update Service, and at the time of this writing a fix from Microsoft has yet to fully patch the issue. Reports to The Register indicate that Microsoft's attempt to patch the exploit earlier this month didn't stop any active exploitation, contrary to Microsoft's

Windows Server 2025 is currently open to a Remote Code Execution exploit via the Windows Update Service, and at the time of this writing a fix from Microsoft has yet to fully patch the issue. Reports to The Register indicate that Microsoft's attempt to patch the exploit earlier this month didn't stop any active exploitation, contrary to Microsoft's UK's Skyrora and ESA to Test new Tanbium Alloy to Cut Waste and Improve Component Lifespan

(PR) HPE to Build "Mission" and "Vision" Supercomputers Featuring NVIDIA Vera Rubin

"For decades, HPE and Los Alamos National Laboratory have collaborated on innovative supercomputing designs that deliver powerful capabilities to solve complex scientific challenges and bolster national security efforts," said Trish Damkroger, senior vice president and general manager, HPC & AI Infrastructure Solutions at HPE. "We are proud to continue powering the lab's journey with the upcoming Mission and Vision systems. These innovations will be among the first to feature next-generation HPE Cray supercomputing architecture to drive AI innovation and scientific impact."

(PR) Sandisk Launches Officially Licensed FIFA World Cup 2026 Product Lineup

Blending heritage with innovation, the design-led products honor host nations and iconic moments through whistle-inspired USB-C drives to SSDs in tournament colors and pro-level memory cards to capture history-making moments. Each product proudly bears official FIFA World Cup 2026 licensing marks and host nation-inspired details, making them authentic pieces of football history.

(PR) Supermicro Expands NVIDIA Collaboration, Focuses on U.S.-Made AI Systems for Government Use

"Our expanded collaboration with NVIDIA and our focus on U.S.-based manufacturing position Supermicro as a trusted partner for federal AI deployments. With our corporate headquarters, manufacturing, and R&D all based in San Jose, California, in the heart of Silicon Valley, we have an unparalleled ability and capacity to deliver first-to-market solutions are developed, constructed, validated (and manufactured) for American federal customers," said Charles Liang, president and CEO, Supermicro. "The result of many years of working hand-in-hand with our close partner NVIDIA—also based in Silicon Valley—Supermicro has cemented its position as a pioneer of American AI infrastructure development."

(PR) Don't Nod Reveals New Trailer for Aphelion

Aphelion is a sci-fi action-adventure on the edge of the solar system. In the shoes of ESA astronauts Ariane and Thomas, players will explore and survey the uncharted planet Persephone and solve the mystery of the crash, all while trying to survive in the terrifying presence of an unknown enemy. At its heart, the game is an emotional tale about love, resilience, hope, and what we bring with us when everything is lost.

(PR) Creative Technology Launches Kickstarter Campaign for Sound Blaster Re:Imagine

Since its debut in 1989, Sound Blaster has shipped more than 400 million devices worldwide, shaping the soundtrack of the digital age. The original Sound Blaster gave PCs a voice, powering the rise of multimedia, gaming, and digital creativity. Sound Blaster Re:Imagine builds on that heritage - taking the DNA of Sound Blaster and evolving it into a modern, modular platform designed for creators, gamers, and anyone who lives at the intersection of work and play.

(PR) MAINGEAR Introduces aiDAPTIV+ Package for Pro RS & Pro WS Workstations Co-Developed With Phison

AI teams need on-prem training and inference performance without the unpredictability of cloud costs or the exposure of sensitive data. The aiDAPTIV+ package combines MAINGEAR's powerful, enterprise-ready workstations with Phison's aiDAPTIV+ intelligent SSD caching to expand effective VRAM, enabling larger models and longer contexts at the edge, with predictable costs and IT-friendly deployment.

(PR) Giga Computing Showcases Scalable Next-Gen AI and Visualization Solutions at NVIDIA GTC DC 2025

The booth features four flagship GIGABYTE systems: the AI TOP ATOM, the W775-V10 workstation, the XL44-SX2 (NVIDIA RTX PRO Server), and a liquid-cooled G4L4-SD3 AI server. Together, these GIGABYTE solutions built on the NVIDIA Blackwell architecture to enable efficient, high-performance AI and visualization workloads spanning every compute tier.

(PR) ASUS IoT Announces PE3000N Based On NVIDIA Jetson Thor

Rugged reliability for challenging environments