A New Security Layer for macOS Takes Aim at Admin Errors Before Hackers Do

The post Amazon’s Cloud and AI Crossroads: Navigating Intense Competition and Infrastructure Demands appeared first on StartupHub.ai.

The burgeoning demands of generative AI are fundamentally reshaping the competitive landscape of cloud computing, compelling even market leaders like Amazon to critically assess their strategic investments. CNBC’s MacKenzie Sigalos, reporting on Amazon’s third-quarter earnings, underscored that the company’s cloud momentum and substantial AI infrastructure spending are now under intense scrutiny, with investors eager to […]

The post Amazon’s Cloud and AI Crossroads: Navigating Intense Competition and Infrastructure Demands appeared first on StartupHub.ai.

The post Salesforce Agentic Commerce: AI Redefines Retail appeared first on StartupHub.ai.

Salesforce Agentic Commerce introduces AI-powered tools and strategic partnerships to redefine retail, enhancing discovery, personalization, and operational efficiency.

The post Salesforce Agentic Commerce: AI Redefines Retail appeared first on StartupHub.ai.

OpenAI has introduced Aardvark, a GPT-5-powered autonomous security researcher agent now available in private beta.

Designed to emulate how human experts identify and resolve software vulnerabilities, Aardvark offers a multi-stage, LLM-driven approach for continuous, 24/7/365 code analysis, exploit validation, and patch generation!

Positioned as a scalable defense tool for modern software development environments, Aardvark is being tested across internal and external codebases.

OpenAI reports high recall and real-world effectiveness in identifying known and synthetic vulnerabilities, with early deployments surfacing previously undetected security issues.

Aardvark comes on the heels of OpenAI’s release of the gpt-oss-safeguard models yesterday, extending the company’s recent emphasis on agentic and policy-aligned systems.

Aardvark operates as an agentic system that continuously analyzes source code repositories. Unlike conventional tools that rely on fuzzing or software composition analysis, Aardvark leverages LLM reasoning and tool-use capabilities to interpret code behavior and identify vulnerabilities.

It simulates a security researcher’s workflow by reading code, conducting semantic analysis, writing and executing test cases, and using diagnostic tools.

Its process follows a structured multi-stage pipeline:

Threat Modeling – Aardvark initiates its analysis by ingesting an entire code repository to generate a threat model. This model reflects the inferred security objectives and architectural design of the software.

Commit-Level Scanning – As code changes are committed, Aardvark compares diffs against the repository’s threat model to detect potential vulnerabilities. It also performs historical scans when a repository is first connected.

Validation Sandbox – Detected vulnerabilities are tested in an isolated environment to confirm exploitability. This reduces false positives and enhances report accuracy.

Automated Patching – The system integrates with OpenAI Codex to generate patches. These proposed fixes are then reviewed and submitted via pull requests for developer approval.

Aardvark integrates with GitHub, Codex, and common development pipelines to provide continuous, non-intrusive security scanning. All insights are intended to be human-auditable, with clear annotations and reproducibility.

According to OpenAI, Aardvark has been operational for several months on internal codebases and with select alpha partners.

In benchmark testing on “golden” repositories—where known and synthetic vulnerabilities were seeded—Aardvark identified 92% of total issues.

OpenAI emphasizes that its accuracy and low false positive rate are key differentiators.

The agent has also been deployed on open-source projects. To date, it has discovered multiple critical issues, including ten vulnerabilities that were assigned CVE identifiers.

OpenAI states that all findings were responsibly disclosed under its recently updated coordinated disclosure policy, which favors collaboration over rigid timelines.

In practice, Aardvark has surfaced complex bugs beyond traditional security flaws, including logic errors, incomplete fixes, and privacy risks. This suggests broader utility beyond security-specific contexts.

During the private beta, Aardvark is only available to organizations using GitHub Cloud (github.com). OpenAI invites beta testers to sign up here online by filling out a web form. Participation requirements include:

Integration with GitHub Cloud

Commitment to interact with Aardvark and provide qualitative feedback

Agreement to beta-specific terms and privacy policies

OpenAI confirmed that code submitted to Aardvark during the beta will not be used to train its models.

The company is also offering pro bono vulnerability scanning for selected non-commercial open-source repositories, citing its intent to contribute to the health of the software supply chain.

The launch of Aardvark signals OpenAI’s broader movement into agentic AI systems with domain-specific capabilities.

While OpenAI is best known for its general-purpose models (e.g., GPT-4 and GPT-5), Aardvark is part of a growing trend of specialized AI agents designed to operate semi-autonomously within real-world environments. In fact, it joins two other active OpenAI agents now:

ChatGPT agent, unveiled back in July 2025, which controls a virtual computer and web browser and can create and edit common productivity files

Codex — previously the name of OpenAI's open source coding model, which it took and re-used as the name of its new GPT-5 variant-powered AI coding agent unveiled back in May 2025

But a security-focused agent makes a lot of sense, especially as demands on security teams grow.

In 2024 alone, over 40,000 Common Vulnerabilities and Exposures (CVEs) were reported, and OpenAI’s internal data suggests that 1.2% of all code commits introduce bugs.

Aardvark’s positioning as a “defender-first” AI aligns with a market need for proactive security tools that integrate tightly with developer workflows rather than operate as post-hoc scanning layers.

OpenAI’s coordinated disclosure policy updates further reinforce its commitment to sustainable collaboration with developers and the open-source community, rather than emphasizing adversarial vulnerability reporting.

While yesterday's release of oss-safeguard uses chain-of-thought reasoning to apply safety policies during inference, Aardvark applies similar LLM reasoning to secure evolving codebases.

Together, these tools signal OpenAI’s shift from static tooling toward flexible, continuously adaptive systems — one focused on content moderation, the other on proactive vulnerability detection and automated patching within real-world software development environments.

Aardvark represents OpenAI’s entry into automated security research through agentic AI. By combining GPT-5’s language understanding with Codex-driven patching and validation sandboxes, Aardvark offers an integrated solution for modern software teams facing increasing security complexity.

While currently in limited beta, the early performance indicators suggest potential for broader adoption. If proven effective at scale, Aardvark could contribute to a shift in how organizations embed security into continuous development environments.

For security leaders tasked with managing incident response, threat detection, and day-to-day protections—particularly those operating with limited team capacity—Aardvark may serve as a force multiplier. Its autonomous validation pipeline and human-auditable patch proposals could streamline triage and reduce alert fatigue, enabling smaller security teams to focus on strategic incidents rather than manual scanning and follow-up.

AI engineers responsible for integrating models into live products may benefit from Aardvark’s ability to surface bugs that arise from subtle logic flaws or incomplete fixes, particularly in fast-moving development cycles. Because Aardvark monitors commit-level changes and tracks them against threat models, it may help prevent vulnerabilities introduced during rapid iteration, without slowing delivery timelines.

For teams orchestrating AI across distributed environments, Aardvark’s sandbox validation and continuous feedback loops could align well with CI/CD-style pipelines for ML systems. Its ability to plug into GitHub workflows positions it as a compatible addition to modern AI operations stacks, especially those aiming to integrate robust security checks into automation pipelines without additional overhead.

And for data infrastructure teams maintaining critical pipelines and tooling, Aardvark’s LLM-driven inspection capabilities could offer an added layer of resilience. Vulnerabilities in data orchestration layers often go unnoticed until exploited; Aardvark’s ongoing code review process may surface issues earlier in the development lifecycle, helping data engineers maintain both system integrity and uptime.

In practice, Aardvark represents a shift in how security expertise might be operationalized—not just as a defensive perimeter, but as a persistent, context-aware participant in the software lifecycle. Its design suggests a model where defenders are no longer bottlenecked by scale, but augmented by intelligent agents working alongside them.

Researchers at Meta FAIR and the University of Edinburgh have developed a new technique that can predict the correctness of a large language model's (LLM) reasoning and even intervene to fix its mistakes. Called Circuit-based Reasoning Verification (CRV), the method looks inside an LLM to monitor its internal “reasoning circuits” and detect signs of computational errors as the model solves a problem.

Their findings show that CRV can detect reasoning errors in LLMs with high accuracy by building and observing a computational graph from the model's internal activations. In a key breakthrough, the researchers also demonstrated they can use this deep insight to apply targeted interventions that correct a model’s faulty reasoning on the fly.

The technique could help solve one of the great challenges of AI: Ensuring a model’s reasoning is faithful and correct. This could be a critical step toward building more trustworthy AI applications for the enterprise, where reliability is paramount.

Chain-of-thought (CoT) reasoning has been a powerful method for boosting the performance of LLMs on complex tasks and has been one of the key ingredients in the success of reasoning models such as the OpenAI o-series and DeepSeek-R1.

However, despite the success of CoT, it is not fully reliable. The reasoning process itself is often flawed, and several studies have shown that the CoT tokens an LLM generates is not always a faithful representation of its internal reasoning process.

Current remedies for verifying CoT fall into two main categories. “Black-box” approaches analyze the final generated token or the confidence scores of different token options. “Gray-box” approaches go a step further, looking at the model's internal state by using simple probes on its raw neural activations.

But while these methods can detect that a model’s internal state is correlated with an error, they can't explain why the underlying computation failed. For real-world applications where understanding the root cause of a failure is crucial, this is a significant gap.

CRV is based on the idea that models perform tasks using specialized subgraphs, or "circuits," of neurons that function like latent algorithms. So if the model’s reasoning fails, it is caused by a flaw in the execution of one of these algorithms. This means that by inspecting the underlying computational process, we can diagnose the cause of the flaw, similar to how developers examine execution traces to debug traditional software.

To make this possible, the researchers first make the target LLM interpretable. They replace the standard dense layers of the transformer blocks with trained "transcoders." A transcoder is a specialized deep learning component that forces the model to represent its intermediate computations not as a dense, unreadable vector of numbers, but as a sparse and meaningful set of features. Transcoders are similar to the sparse autoencoders (SAE) used in mechanistic interpretability research with the difference that they also preserve the functionality of the network they emulate. This modification effectively installs a diagnostic port into the model, allowing researchers to observe its internal workings.

With this interpretable model in place, the CRV process unfolds in a few steps. For each reasoning step the model takes, CRV constructs an "attribution graph" that maps the causal flow of information between the interpretable features of the transcoder and the tokens it is processing. From this graph, it extracts a "structural fingerprint" that contains a set of features describing the graph's properties. Finally, a “diagnostic classifier” model is trained on these fingerprints to predict whether the reasoning step is correct or not.

At inference time, the classifier monitors the activations of the model and provides feedback on whether the model’s reasoning trace is on the right track.

The researchers tested their method on a Llama 3.1 8B Instruct model modified with the transcoders, evaluating it on a mix of synthetic (Boolean and Arithmetic) and real-world (GSM8K math problems) datasets. They compared CRV against a comprehensive suite of black-box and gray-box baselines.

The results provide strong empirical support for the central hypothesis: the structural signatures in a reasoning step's computational trace contain a verifiable signal of its correctness. CRV consistently outperformed all baseline methods across every dataset and metric, demonstrating that a deep, structural view of the model's computation is more powerful than surface-level analysis.

Interestingly, the analysis revealed that the signatures of error are highly domain-specific. This means failures in different reasoning tasks (formal logic versus arithmetic calculation) manifest as distinct computational patterns. A classifier trained to detect errors in one domain does not transfer well to another, highlighting that different types of reasoning rely on different internal circuits. In practice, this means that you might need to train a separate classifier for each task (though the transcoder remains unchanged).

The most significant finding, however, is that these error signatures are not just correlational but causal. Because CRV provides a transparent view of the computation, a predicted failure can be traced back to a specific component. In one case study, the model made an order-of-operations error. CRV flagged the step and identified that a "multiplication" feature was firing prematurely. The researchers intervened by manually suppressing that single feature, and the model immediately corrected its path and solved the problem correctly.

This work represents a step toward a more rigorous science of AI interpretability and control. As the paper concludes, “these findings establish CRV as a proof-of-concept for mechanistic analysis, showing that shifting from opaque activations to interpretable computational structure enables a causal understanding of how and why LLMs fail to reason correctly.” To support further research, the team plans to release its datasets and trained transcoders to the public.

While CRV is a research proof-of-concept, its results hint at a significant future for AI development. AI models learn internal algorithms, or "circuits," for different tasks. But because these models are opaque, we can't debug them like standard computer programs by tracing bugs to specific steps in the computation. Attribution graphs are the closest thing we have to an execution trace, showing how an output is derived from intermediate steps.

This research suggests that attribution graphs could be the foundation for a new class of AI model debuggers. Such tools would allow developers to understand the root cause of failures, whether it's insufficient training data or interference between competing tasks. This would enable precise mitigations, like targeted fine-tuning or even direct model editing, instead of costly full-scale retraining. They could also allow for more efficient intervention to correct model mistakes during inference.

The success of CRV in detecting and pinpointing reasoning errors is an encouraging sign that such debuggers could become a reality. This would pave the way for more robust LLMs and autonomous agents that can handle real-world unpredictability and, much like humans, correct course when they make reasoning mistakes.

LinkedIn's also taking more action to crack down on fake engagement activity.

The post Stripe’s AI Backbone: Powering the Agent Economy with Financial Infrastructure appeared first on StartupHub.ai.

Stripe, under the leadership of Emily Glassberg Sands, Head of Data & AI, is not merely adapting to the artificial intelligence revolution; it is actively constructing the financial infrastructure upon which this burgeoning agent economy will operate. In a recent Latent Space podcast interview with hosts Shawn Wang and Alessio Fanelli, Sands articulated Stripe’s ambitious […]

The post Stripe’s AI Backbone: Powering the Agent Economy with Financial Infrastructure appeared first on StartupHub.ai.

The post Poolside reportedly raising up to $1B to advance AI code generation appeared first on StartupHub.ai.

AI code generation startup Poolside is reportedly raising up to $1 billion from investor Nvidia to build tools that accelerate software development.

The post Poolside reportedly raising up to $1B to advance AI code generation appeared first on StartupHub.ai.

The post AI’s Relentless March: Efficiency, Autonomy, and Economic Reshaping appeared first on StartupHub.ai.

The accelerating integration of artificial intelligence into daily life and industrial infrastructure is no longer a distant vision but a tangible reality, as evidenced by the rapid-fire developments discussed in Matthew Berman’s latest Forward Future AI news briefing. From the nascent stages of consumer robotics to revolutionary computing paradigms, the AI landscape is undergoing a […]

The post AI’s Relentless March: Efficiency, Autonomy, and Economic Reshaping appeared first on StartupHub.ai.

The post XPO’s AI-Driven Efficiency in a Soft Freight Market appeared first on StartupHub.ai.

In an era where artificial intelligence often conjures images of job displacement, XPO CEO Mario Harik offers a refreshingly pragmatic perspective: AI, for his logistics giant, is fundamentally about efficiency and optimization, not headcount reduction. This insight anchored a recent interview on CNBC’s Worldwide Exchange with anchor Frank Hollan, where Harik detailed XPO’s latest earnings […]

The post XPO’s AI-Driven Efficiency in a Soft Freight Market appeared first on StartupHub.ai.

Amazon’s third-quarter profits rose 38% to $21.2 billion, but a big part of the jump had nothing to do with its core businesses of selling good or cloud services.

The company reported a $9.5 billion pre-tax gain from its investment in the AI startup Anthropic, which was included in its non-operating income for the quarter.

The windfall wasn’t the result of a sale or cash transaction, but rather accounting rules. After Anthropic raised new funding in September at a $183 billion valuation, Amazon was required to revalue its equity stake to reflect the higher market price, a process known as a “mark-to-market” adjustment.

To put the $9.5 billion paper gain in perspective, the Amazon Web Services cloud business — historically Amazon’s primary profit engine — generated $11.4 billion in quarterly operating profits.

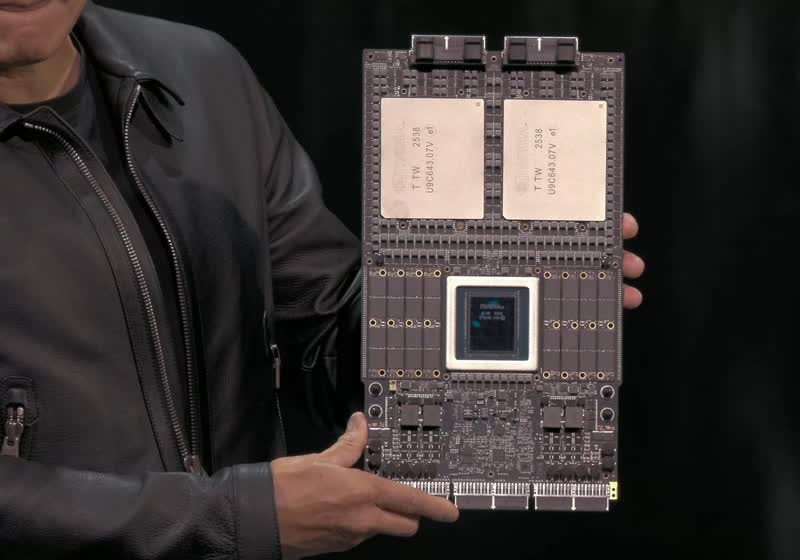

At the same time, Amazon is spending big on its AI infrastructure buildout for Anthropic and others. The company just opened an $11 billion AI data center complex, dubbed Project Rainier, where Anthropic’s Claude models run on hundreds of thousands of Amazon’s Trainium 2 chips.

Amazon is going head-to-head against Microsoft, which just re-upped its partnership with ChatGPT maker OpenAI; and Google, which reported record cloud revenue for its recent quarter, driven by AI. The AI infrastructure race is fueling a big surge in capital spending for all three cloud giants.

Amazon spent $35.1 billion on property and equipment in the third quarter alone, up 55% from a year earlier. Andy Jassy, the Amazon CEO, sought to reassure Wall Street that the big outlay will be worth it.

“You’re going to see us continue to be very aggressive investing in capacity, because we see the demand,” Jassy said on the company’s conference call. “As fast as we’re adding capacity right now, we’re monetizing it. It’s still quite early, and represents an unusual opportunity for customers and AWS.”

The cash for new data centers doesn’t hit the bottom line immediately, but it comes into play as depreciation and amortization costs are recorded on the income statement over time.

And in that way, the spending is starting to impact on AWS results: sales rose 20% to $33 billion in the quarter, yet operating income increased only 9.6% to $11.4 billion. The gap indicates that Amazon’s heavy AI investments are compressing profit margins in the near term, even as the company bets on the infrastructure build-out to expand its business significantly over time.

Those investments are also weighing on cash generation: Amazon’s free cash flow dropped 69% over the past year to $14.8 billion, reflecting the massive outlays for data centers and infrastructure.

Amazon has invested and committed a total of $8 billion in Anthropic, initially structured as convertible notes. A portion of that investment converted to equity with Anthropic’s prior funding round in March.

Corsair just announced its entrance into the leverless fightstick scene first popularized by Hit Box, the new Corsair Novablade Pro. Or to be more specific, the Corsair Novablade Pro Wireless Hall Effect Leverless Fight Controller—but we'll stick with Novablade Pro for now. The Novablade Pro is a direct competitor to the likes of Hit Box's

Corsair just announced its entrance into the leverless fightstick scene first popularized by Hit Box, the new Corsair Novablade Pro. Or to be more specific, the Corsair Novablade Pro Wireless Hall Effect Leverless Fight Controller—but we'll stick with Novablade Pro for now. The Novablade Pro is a direct competitor to the likes of Hit Box's

Amazon CEO Andy Jassy says the company’s latest big round of layoffs — about 14,000 corporate jobs — wasn’t triggered by financial strain or artificial intelligence replacing workers, but rather a push to stay nimble.

Speaking with analysts on Amazon’s quarterly earnings call Thursday, Jassy said the decision stemmed from a belief that the company had grown too big and too layered.

“The announcement that we made a few days ago was not really financially driven, and it’s not even really AI-driven — not right now, at least,” he said. “Really, it’s culture.”

Jassy’s comments are his first public explanation of the layoffs, which reportedly could ultimately total as many as 30,000 people — and would be the largest workforce reduction in Amazon’s history.

The news this week prompted speculation that the cuts were tied to automation or AI-related restructuring. Earlier this year, Jassy wrote in a memo to employees that he expected Amazon’s total corporate workforce to shrink over time due to efficiency gains from AI.

But his comments Thursday framed the layoffs as a cultural reset aimed at keeping the company fast-moving amid what he called “the technology transformation happening right now.”

Jassy, who succeeded founder Jeff Bezos as CEO in mid-2021, has pushed to reduce management layers and eliminate bureaucracy inside the company.

Amazon’s corporate headcount tripled between 2017 and 2022, according to The Information, before the company adopted a more cautious hiring approach.

Bloomberg News reported this week that Jassy has told colleagues parts of the company remain “unwieldy” despite efforts to streamline operations — including significant layoffs in 2023 when Amazon cut 27,000 corporate workers in multiple stages.

On Thursday’s call, Jassy said Amazon’s rapid growth led to extra layers of management that slowed decision-making.

“When that happens, sometimes without realizing it, you can weaken the ownership of the people that you have who are doing the actual work and who own most of the two-way door decisions — the ones that should be made quickly and right at the front line,” Jassy said, using a phrase popularized by Bezos to help determine how much thought and planning to put into big and small decisions.

The layoffs, he said, are meant to restore the kind of ownership and agility that defined Amazon’s early years.

“We are committed to operating like the world’s largest startup,” Jassy said, repeating a line he’s used recently.

Given the “transformation” he described happening across the business world, Jassy said it’s more important than ever to be lean, flat, and fast-moving. “That’s what we’re going to do,” he said.

Jassy’s comments came as Amazon reported quarterly revenue of $180.2 billion, up 13% year-over-year, with AWS revenue growth accelerating to 20% — its fastest pace since 2022.

Amazon said it took a $1.8 billion severance-related charge in the quarter related to the layoffs.

Amazon joins other tech giants including Microsoft that have trimmed headcount this year while investing heavily in AI infrastructure.

Related coverage:

Canva has launched a new unified Affinity application that combines the previously separate Designer, Photo, and Publisher tools into one platform. The app offers a complete suite of professional features for vector design, image editing, and desktop publishing, now available free of charge.

Apple has deftly managed its geopolitical risk exposure by negotiating a broad-based import tariff exemption from the Trump Administration. Even so, the Cupertino giant has not been able to fully neutralize the impact of the US import tariffs, courtesy of its labyrinthine and sprawling global supply chain. Apple faced $1.1 billion in tariff-related costs in its fiscal Q4 2025 Apple adopted a 2-pronged strategy to deal with US import tariffs and trade war: Moreover, Apple is also planning to: As such, Apple has already started shipping its US-made servers to its datacenters, where they will help power features such as […]

Read full article at https://wccftech.com/apple-1-1-billion-hit-in-q4-2025/

Apple's iPhone product segment missed consensus expectations of analysts for the just-concluded fiscal fourth quarter of 2025, largely due to transitory weakness in iPhone 17 sales. Now, however, Apple has not only given a reasonable explanation behind this miss, but also offered surprising guidance for the ongoing December-ending quarter. Apple to experience its best ever December-ending quarter As we noted in our dedicated post on the topic, Apple's iPhone revenue missed expectations for its fiscal Q4 2025, which were pegged at $50.19 billion vs. the $49.03 billion haul that the Cupertino giant reported for the three-month period. During the earnings call, […]

Read full article at https://wccftech.com/tim-cook-apple-will-have-its-best-ever-december-quarter-thanks-to-the-iphone-17-lineup/

Ever since Apple announced its AI strategy revamp under the Apple Intelligence banner, there has been a perception that the company is struggling to maintain a swift pace with its lofty ambitions. There are increasing signs, however, that Apple is making some much-needed headway in this sphere, as per the tidbits gleaned from Apple's Q3 2025 Earnings Call. Apple Intelligence: A winding road ahead Do note that Apple has been working to introduce a number of key Apple Intelligence features with its Spring 2026 iOS update (iOS 26.4 most likely). These include: Of course, Apple Mac users can already enjoy […]

Read full article at https://wccftech.com/tim-cook-the-new-siri-under-the-apple-intelligence-banner-to-debut-in-2026/

Apple has just announced the earnings for its fiscal Q4 2025, reporting $102.47 billion in total revenue, which includes $49.03 billion from iPhones and $28.75 billion from services, and $27.47 billion in net profit. Apple Fiscal Q4 2025 Earnings: iPhones and services show growth Here are the key highlights from Apple's latest quarterly earnings release: For the full fiscal year 2025, Apple earned $416.16 billion in revenue, corresponding to a year-over-year increase of 6.42 percent relative to $391.04 billion that it earned in its last fiscal year. During the just-concluded fiscal year 2025, Apple earned $307 billion from its products […]

Read full article at https://wccftech.com/apple-fiscal-q4-2025-earnings-revenue-from-iphones-and-ipads-disappoints/

Maintaining its tradition for every Apple Silicon Mac that has launched so far, Amazon has introduced a discount for the 14-inch M5 MacBook Pro, shaving off $50 from the base and 1TB storage model, meaning that the new lineup starts from $1,549 instead of $1,599, with the price cut applied to both the Space Black and Silver colors. While the discount will slowly creep up as the months go by, you might want to keep the 14-inch M4 MacBook Pro a viable option because it is oozing tremendous value right now. For those looking to save money, the 14-inch M4 […]

Read full article at https://wccftech.com/m5-macbook-pro-in-512gb-and-1tb-options-now-50-cheaper-on-amazon/

This story originally appeared on Real Estate News.

Zillow continues to be an overachiever, at least with its financial performance.

The home search giant’s revenue has consistently beat expectations for the past two years, and Q3 was no different: Revenue was $676 million for the third quarter, up 16% year-over-year and above the company’s previous guidance, driven by the strength of its rentals and mortgage divisions.

Rentals revenue was up 41% year-over-year to $174 million, while mortgage revenue increased 36% to $53 million, according to Zillow’s shareholder letter. The company’s main revenue stream, residential, rose 7% to $435 million.

Zillow also turned a profit, netting $10 million during the quarter and sustaining its run of profitability for a third consecutive quarter.

“Zillow’s Q3 results show how well we’re delivering on our mission to make buying, selling, financing and renting easier,” Zillow CEO Jeremy Wacksman said in a news release. “Zillow is leading the industry toward a more transparent, consumer-first future.”

The real estate portal also continues to see growth in its website traffic, hitting 250 million average monthly unique visitors in the third quarter, up 7% year-over-year.

Wacksman and CFO Jeremy Hofmann acknowledged that they are also aware of the “external noise” that has gotten louder in recent months, possibly referring to recent lawsuits involving the company and the debate over exclusive listings, including Zillow’s private listing ban.

Revenue: $676 million, up 16% year-over-year. Residential increased 7% to $435 million; mortgage revenue was up 36% to $53 million; and rentals revenue climbed 41% to $174 million.

Cash and investments: $1.4 billion at the end of September, up from $1.2 billion at the end of June.

Adjusted EBITDA (earnings before interest, taxes, depreciation and amortization): $165 million in Q3, up from $127 million a year earlier.

Net income/loss: A gain of $10 million in Q3, up from $2 million the previous quarter, an improvement over its $20 million loss a year ago.

Traffic and visits: Traffic across all Zillow Group websites and apps totaled 250 million average monthly unique users in Q3, up 7% year-over-year, the company said. Total visits were 2.5 billion in Q3, up 4% year-over-year.

Q4 outlook: For the fourth quarter, Zillow estimates revenue will be in the $645 million to $655 million range, which would represent high single-digit year-over-year growth.

The rise of AI marks a critical shift away from decades defined by information-chasing and a push for more and more compute power.

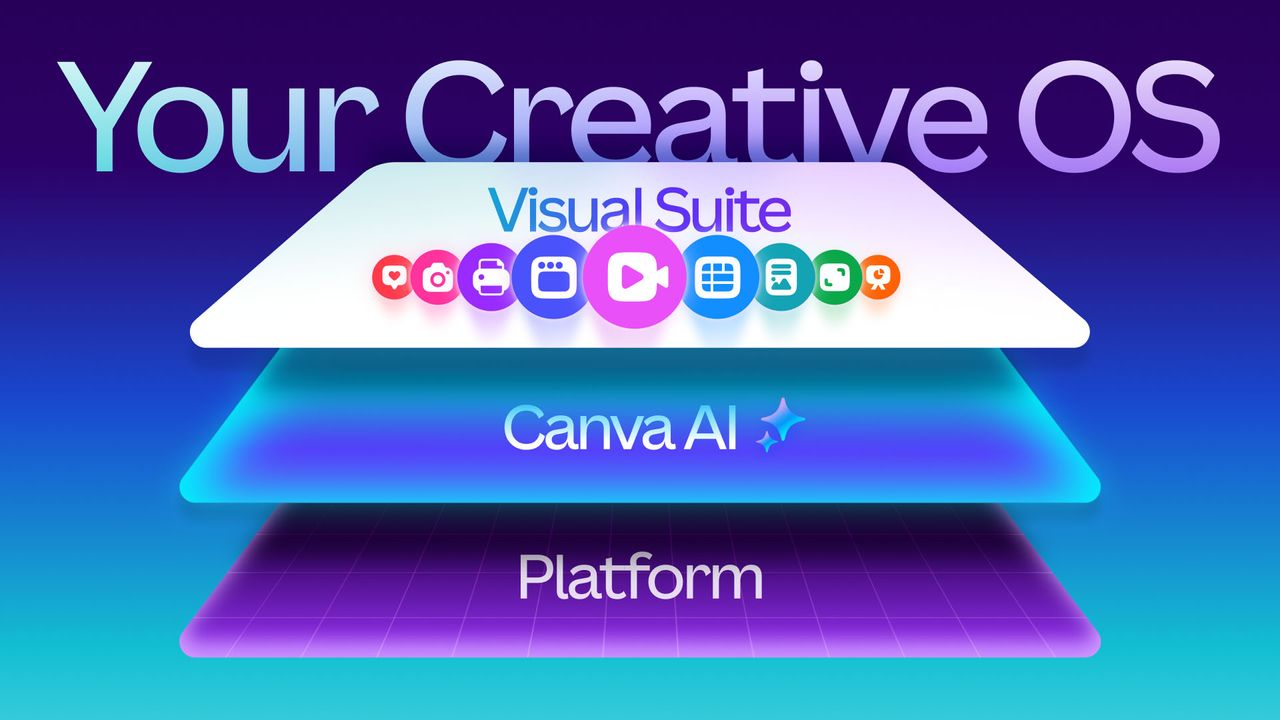

Canva co-founder and CPO Cameron Adams refers to this dawning time as the “imagination era.” Meaning: Individuals and enterprises must be able to turn creativity into action with AI.

Canva hopes to position itself at the center of this shift with a sweeping new suite of tools. The company’s new Creative Operating System (COS) integrates AI across every layer of content creation, creating a single, comprehensive creativity platform rather than a simple, template-based design tool.

“We’re entering a new era where we need to rethink how we achieve our goals,” said Adams. “We’re enabling people’s imagination and giving them the tools they need to take action.”

Adams describes Canva’s platform as a three-layer stack: The top Visual Suite layer containing designs, images and other content; a collaborative Canva AI plane at center; and a foundational proprietary model holding it all up.

At the heart of Canva’s strategy is its Creative Operating System (COS) underlying. This “engine,” as Adams describes it, integrates documents, websites, presentations, sheets, whiteboards, videos, social content, hundreds of millions of photos, illustrations, a rich sound library, and numerous templates, charts, and branded elements.

The COS is getting a 2.0 upgrade, but the crucial advance is the “middle, crucial layer” that fully integrates AI and makes it accessible throughout various workflows, Adams explained. This gives creative and technical teams a single dashboard for generating, editing and launching all types of content.

The underlying model is trained to understand the “complexity of design” so the platform can build out various elements — such as photos, videos, textures, or 3D graphics — in real time, matching branding style without the need for manual adjustments. It also supports live collaboration, meaning teams across departments can co-create.

With a unified dashboard, a user working on a specific design, for instance, can create a new piece of content (say, a presentation) within the same workflow, without having to switch to another window or platform. Also, if they generate an image and aren’t pleased with it, they don’t have to go back and create from scratch; they can immediately begin editing, changing colors or tone.

Another new capability in COS, “Ask Canva,” provides direct design advice. Users can tag @Canva to get copy suggestions and smart edits; or, they can highlight an image and direct the AI assistant to modify it or generate variants.

“It’s a really unique interaction,” said Adams, noting that this AI design partner is always present. “It’s a real collaboration between people and AI, and we think it’s a revolutionary change.”

Other new features include a 2.0 video editor and interactive form and email design with drag-and-drop tools. Further, Canva is now incorporated with Affinity, its unified app for pro designers incorporating vector, pixel and layer workflows, and Affinity is “free forever.”

Branding is critical for enterprise; Canva has introduced new tools to help organizations consistently showcase theirs across platforms. The new Canva Grow engine integrates business objectives into the creative process so teams can workshop, create, distribute and refine ads and other materials.

As Adams explained: “It automatically scans your website, figures out who your audience is, what assets you use to promote your products, the message it needs to send out, the formats you want to send it out in, makes a creative for you, and you can deploy it directly to the platform without having to leave Canva.”

Marketing teams can now design and launch ads across platforms like Meta, track insights as they happen and refine future content based on performance metrics. “Your brand system is now available inside the AI you’re working with,” Adams noted.

The impact of Canva’s COS is reflected in notable user metrics: More than 250 million people use Canva every month, just over 29 million of which are paid subscribers. Adams reports that 41 billion designs have been created on Canva since launch, which equates to 1 billion each month.

“If you break that down, it turns into the crazy number of 386 designs being created every single second,” said Adams. Whereas in the early days, it took roughly an hour for users to create a single design.

Canva customers include Walmart, Disney, Virgin Voyages, Pinterest, FedEx, Expedia and eXp Realty. DocuSign, for one, reported that it unlocked more than 500 hours of team capacity and saved $300,000-plus in design hours by fully integrating Canva into its content creation. Disney, meanwhile, uses translation capabilities for its internationalization work, Adams said.

Canva plays in an evolving landscape of professional design tools including Adobe Express and Figma; AI-powered challengers led by Microsoft Designer; and direct consumer alternatives like Visme and Piktochart.

Adobe Express (starting at $9.99 a month for premium features) is known for its ease of use and integration with the broader Adobe Creative Cloud ecosystem. It features professional-grade templates and access to Adobe’s extensive stock library, and has incorporated Google's Gemini 2.5 Flash image model and other gen AI features so that designers can create graphics via natural language prompts. Users with some design experience say they prefer its interface, controls and technical advantages over Canva (such as the ability to import high-fidelity PDFs).

Figma (starting at $3 a month for professional plans) is touted for its real-time collaboration, advanced prototyping capabilities and deep integration with dev workflows; however, some say it has a steeper learning curve and higher-precision design tools, making it preferable for professional designers, developers and product teams working on more complex projects.

Microsoft Designer (free version available; although a Microsoft 365 subscription starting at $9.99 a month unlocks additional features) benefits from its integration with Microsoft’s AI capabilities, Copilot layout and text generation and Dall-E powered image generation. The platform’s “Inspire Me” and “New Ideas” buttons provide design variations, and users can also import data from Excel, add 3D models from PowerPoint and access images from OneDrive.

However, users report that its stock photos and template and image libraries are limited compared to Canva's extensive collection, and its visuals can come across as outdated.

Canva’s advantage seems to be in its extensive template library (more than 600,000 ready-to-use) and asset library (141 million-plus stock photos, videos, graphics, and audio elements). Its platform is also praised for its ease of use and interface friendly to non-designers, allowing them to begin quickly without training.

Canva has also expanded into a variety of content types — documents, websites, presentations, whiteboards, videos, and more — making its platform a comprehensive visual suite than just a graphics tool.

Canva has four pricing tiers: Canva Free for one user; Canva Pro for $120 a year for one person; Canva Teams for $100 a year for each team member; and the custom-priced Canva Enterprise.

Canva’s COS is underpinned by Canva’s frontier model, an in-house, proprietary engine based on years of R&D and research partnerships, including the acquisition of visual AI company Leonardo. Adams notes that Canva works with top AI providers including OpenAI, Anthropic and Google.

For technology teams, Canva’s approach offers important lessons, including a commitment to openness. “There are so many models floating around,” Adams noted; it’s important for enterprises to recognize when they should work with top models and when they should develop their own proprietary ones, he advised.

For instance, OpenAI and Anthropic recently announced integrations with Canva as a visual layer because, as Adams explained, they realized they didn’t have the capability to create the same kinds of editable designs that Canva can. This creates a mutually-beneficial ecosystem.

Ultimately, Adams noted: “We have this underlying philosophy that the future is people and technology working together. It's not an either or. We want people to be at the center, to be the ones with the creative spark, and to use AI as a collaborator.”

Mixboard is an experimental, AI-powered concepting board designed to help you explore, expand and refine your ideas. You can bring your own images, or use AI to generate…

Mixboard is an experimental, AI-powered concepting board designed to help you explore, expand and refine your ideas. You can bring your own images, or use AI to generate… Reddit continues to drive more interest, as people come for human insights.

Another step towards creator monetization in the app,

Trump officials had hoped to secure a TikTok sell-off agreement from this meeting.

The option will add another padlock to your personal WhatsApp chest of secrets.

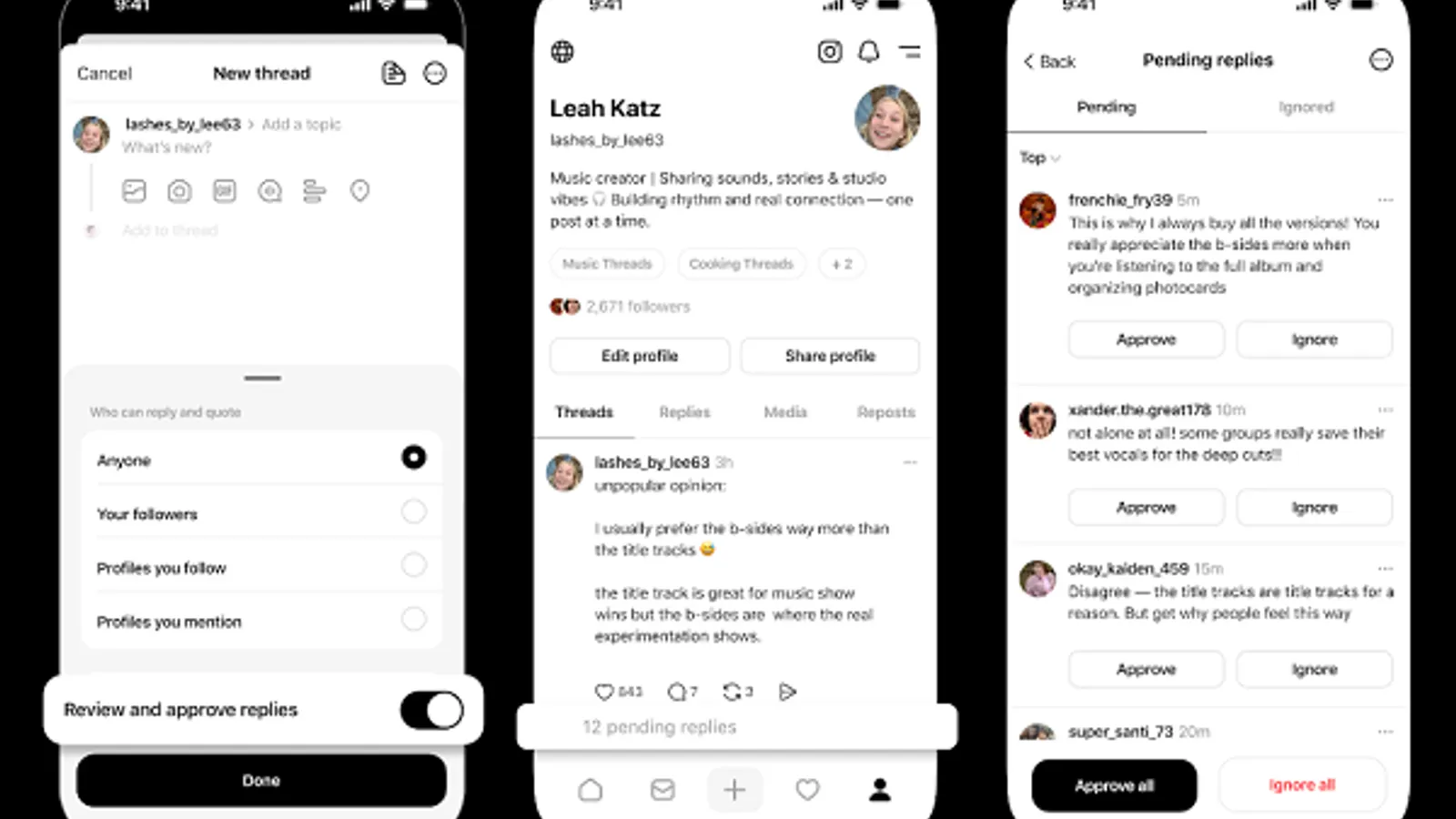

More ways to manage your Threads engagement, though it could also help to hide critics.

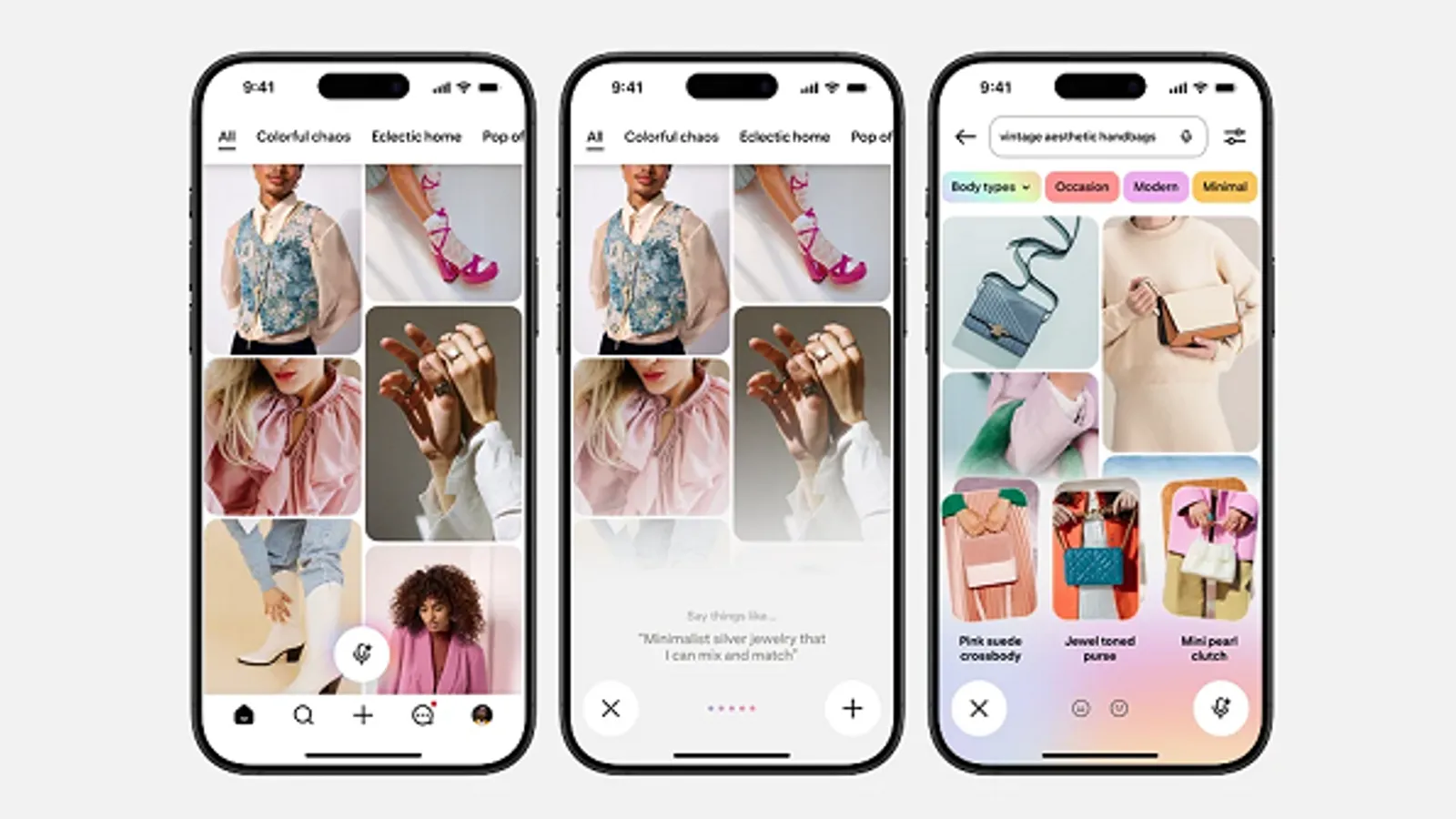

The tool is built to help you expand upon your visual examples with spoken queries.

Meta's giving advertisers more tools to help generate and qualify leads.

Amazon beat estimates for its third-quarter earnings with $180.2 billion in revenue, up 13% year-over-year, and earnings per share of $1.95, up from $1.43 in the year-ago period.

Amazon shares were up more than 11% in after-hours trading. Growth in the company’s stock has lagged behind rivals Microsoft and Google this year.

Investors were likely pleased with a re-acceleration in Amazon’s closely watched cloud computing unit, which reported $33 billion in sales, up 20% year-over-year and topping analyst estimates. In a press release, Amazon CEO Andy Jassy said AWS is “growing at a pace we haven’t seen since 2022.”

“We continue to see strong demand in AI and core infrastructure, and we’ve been focused on accelerating capacity — adding more than 3.8 gigawatts in the past 12 months,” Jassy added.

The cloud growth should help Amazon counter the Wall Street narrative that its cloud business is falling behind Microsoft and Google in pursuing the AI opportunity.

Amazon’s overall operating income reached $17.4 billion in the third quarter — flat compared to a year ago. The company had forecast operating income of $15.5 billion to $20.5 billion.

The company said its Q3 operating income reflected two special charges:

The workforce reduction comes amid an efficiency push at Amazon. Jassy has cited a need to reduce bureaucracy and become more efficient in the new era of artificial intelligence.

Online store sales were $67.4 billion, up 10%.

Here are more details from the second quarter earnings report:

Advertising: The company’s ad business brought in $17.7 billion in revenue in the quarter, up 24% from the year-ago period, topping estimates. Advertising, along with AWS, is a major profit engine.

Third-party seller services: Revenue from third-party seller services was up 12% to $42.5 billion.

Shipping costs: Amazon spent $25.4 billion on shipping in Q3, up 8%.

Physical stores: The category, which includes Whole Foods and other Amazon grocery stores, posted revenue of $5.6 billion, up 7%.

Headcount: Amazon employs 1.57 million people, up 2% year-over-year. That figure does not include seasonal and contract workers.

Prime: Subscription services revenue, which includes Prime memberships, came in at $12.6 billion, up 11%.

Guidance: The company forecasts Q4 sales between $206 billion and $213 billion. Operating income is expected to range between $21 billion and $26 billion, compared with $21.2 billion in the year-ago quarter.

$AMZN Amazon Q3 FY25:

— App Economy Insights (@EconomyApp) October 30, 2025

• Revenue +13% Y/Y to $180.2B ($2.4B beat).

• Operating margin 10% (+0.5pp Y/Y).

• EPS $1.95 ($0.39 beat).

• Q4 Guidance: ~$209.5B ($1.4B beat).

☁️ AWS:

• Revenue +20% Y/Y to $33.0B.

• Operating margin 35% (-3pp Y/Y). pic.twitter.com/2kaNIvC7oy

Anthropic's research explores how large language models perceive and structure text instead of simply producing it word by word.

The post Anthropic Research Shows How LLMs Perceive Text appeared first on Search Engine Journal.

The post Sora Unleashes New Era of AI Character Animation appeared first on StartupHub.ai.

The barrier between imagination and animated reality has just dissolved, fundamentally altering the landscape for content creators and AI developers alike. OpenAI’s latest announcement, “Sora Character Cameos,” showcased in a refreshingly unconventional promotional video, signals a profound shift in how digital characters can be conceived, generated, and deployed. This is not merely an incremental update; […]

The post Sora Unleashes New Era of AI Character Animation appeared first on StartupHub.ai.

The post Alphabet’s AI Advantage: A Bullish Outlook on Google’s Enduring Dominance appeared first on StartupHub.ai.

Alphabet is not merely participating in the artificial intelligence revolution; it is poised to be its definitive winner, a sentiment articulated by Michael Nathanson, founding partner and senior research analyst at MoffettNathanson, during a recent discussion on CNBC’s ‘Power Lunch.’ His perspective challenges the prevailing narrative that AI could destabilize Google’s foundational search business, instead […]

The post Alphabet’s AI Advantage: A Bullish Outlook on Google’s Enduring Dominance appeared first on StartupHub.ai.

The post OpenAI Aardvark is a GPT-5 agent that hunts security bugs appeared first on StartupHub.ai.

OpenAI's Aardvark is an autonomous AI agent that uses GPT-5 to hunt for software vulnerabilities like a human security researcher.

The post OpenAI Aardvark is a GPT-5 agent that hunts security bugs appeared first on StartupHub.ai.

The post Google’s Model Armor: The AI Bodyguard Preventing Digital Catastrophes appeared first on StartupHub.ai.

The proliferation of AI applications, while transformative, introduces an intricate web of new security vulnerabilities that demand a specialized defense. In a recent “Serverless Expeditions” episode, Google Cloud Developer Advocate Martin Omander spoke with Security Advocate Aron Eidelman about Model Armor, Google’s latest offering designed to shield AI applications from a range of emerging threats. […]

The post Google’s Model Armor: The AI Bodyguard Preventing Digital Catastrophes appeared first on StartupHub.ai.

The post Archy funding hits $20M to kill the dental server closet appeared first on StartupHub.ai.

Archy's AI platform aims to save dental practices 80 hours a month by automating the tedious admin work that leads to staff burnout.

The post Archy funding hits $20M to kill the dental server closet appeared first on StartupHub.ai.

The post Alphabet’s AI Investments Drive Record Revenue, Defying Cannibalization Fears appeared first on StartupHub.ai.

Alphabet’s recent earnings call revealed a pivotal moment for the tech giant: the tangible monetization of its extensive AI infrastructure bets, validating a long-term strategy that is now driving unprecedented growth across its core businesses. This robust performance underscores a critical shift in the AI landscape, where strategic investments are now yielding significant, measurable returns, […]

The post Alphabet’s AI Investments Drive Record Revenue, Defying Cannibalization Fears appeared first on StartupHub.ai.

The post Anthropic’s Latest: Claude Code on the Web and Haiku 4.5 Reshape Developer Workflows appeared first on StartupHub.ai.

The future of software development is not merely assisted by AI, but actively orchestrated by it, a vision Anthropic brings closer with its latest advancements: Claude Code on the Web and the powerful, cost-efficient Haiku 4.5 model. These releases, detailed by a company representative in a recent video, signal a profound shift towards more intuitive, […]

The post Anthropic’s Latest: Claude Code on the Web and Haiku 4.5 Reshape Developer Workflows appeared first on StartupHub.ai.

The post Cameo CEO: OpenAI’s Trademark Infringement Threatens Brand Authenticity appeared first on StartupHub.ai.

The burgeoning landscape of artificial intelligence, while promising innovation, is simultaneously exposing the critical fault lines in intellectual property law, particularly concerning brand identity. This tension was starkly illuminated when Steven Galanis, CEO of the personalized celebrity video platform Cameo, appeared on CNBC’s “Money Movers” to discuss his company’s trademark lawsuit against OpenAI. Galanis articulated […]

The post Cameo CEO: OpenAI’s Trademark Infringement Threatens Brand Authenticity appeared first on StartupHub.ai.

The post Esri AWS AI deal targets generative AI for maps appeared first on StartupHub.ai.

The Esri AWS AI collaboration aims to transform static maps into dynamic, predictive tools using generative AI foundation models.

The post Esri AWS AI deal targets generative AI for maps appeared first on StartupHub.ai.

AMD is on a mission: replace its proprietary AGESA firmware for its Ryzen and EPYC processors with openSIL, which stands for Open-Source Silicon Initialization Library. The move to openSIL will improve security and scalability while improving customization and control for AMD's customers, including end users. However, that's not what we're

AMD is on a mission: replace its proprietary AGESA firmware for its Ryzen and EPYC processors with openSIL, which stands for Open-Source Silicon Initialization Library. The move to openSIL will improve security and scalability while improving customization and control for AMD's customers, including end users. However, that's not what we're  Discussion surrounding the use of AI in game development is once again at the forefront, after EA recently announced its partnership with Stability AI. Those who work in the industry at a variety of levels have chimed in on the matter, and that now includes Strauss Zelnick, CEO of Take-Two Interactive, one of the biggest game publishers in

Discussion surrounding the use of AI in game development is once again at the forefront, after EA recently announced its partnership with Stability AI. Those who work in the industry at a variety of levels have chimed in on the matter, and that now includes Strauss Zelnick, CEO of Take-Two Interactive, one of the biggest game publishers in  Nintendo's ongoing patent lawsuit case against Palworld developers Pocketpair has hit a surprising speedbump, though the battle is far from over. For those unaware, Nintendo is suing Pocketpair for patent infringement in Japanese court, and two of the three patents it is claiming have been violated relate to game mechanics that revolve around

Nintendo's ongoing patent lawsuit case against Palworld developers Pocketpair has hit a surprising speedbump, though the battle is far from over. For those unaware, Nintendo is suing Pocketpair for patent infringement in Japanese court, and two of the three patents it is claiming have been violated relate to game mechanics that revolve around RDNA 1 and RDNA 2 graphics cards will continue to receive driver updates for critical security and bug fixes. To focus on optimizing and delivering new and improved technologies for the latest GPUs, AMD Software Adrenalin Edition 25.10.2 is placing Radeon RX 5000 and RX 6000 series graphics cards (RDNA 1 and RDNA 2) into maintenance mode. Future driver updates with targeted game optimizations will focus on RDNA 3 and RDNA 4 GPUs.

Leaders in the Pacific Northwest are largely bullish on the region’s continued economic success — but one threat to the region’s fiscal progress worries them in particular.

“What always strikes me, whether I’m in City Hall in Vancouver or Seattle or Portland, is that everybody talks about the same thing — the high cost of housing,” said Microsoft President Brad Smith at this week’s Cascadia Innovation Corridor conference in Seattle.

“It’s become an enormous barrier, not just for attracting new talent, but for enabling teachers and police officers and nurses and firefighters to live in the communities in which they serve,” he added.

Dr. Tom Lynch, president and director of Seattle’s Fred Hutch Cancer Center, was more succinct.

“My people can’t find places to live,” Lynch said during a Tuesday panel at the same event.

Those concerns are bolstered by research in a new report on the economic viability of the corridor running from Vancouver, B.C., through Seattle to Portland.

Housing costs were cited as one of the top threats to the region’s success, noting that Vancouver’s housing-cost-to-income-ratio disparity is among the worst in the world, while in Seattle median home prices relative to wages have doubled in the past 15 years. Portland reports a net out-migration as workers move to more affordable areas.

Other concerns include rising business costs and regulations, declining numbers of skilled workers and new restrictions on foreign talent immigrating to the U.S., and clean energy shortages.

“We’ve got to find ways to be able to increase the density of our housing, come up with creative solutions for allowing more families to be able to live close to where the jobs are,” Lynch said.

Smith agreed, adding, “The only way to dig ourselves out of this is to harness the power of the market through public-private partnerships, to recognize that zoning and permitting needs to be put to work to accelerate investment.”

Area tech giants have been pursuing those partnerships to tackle the challenge.

In 2019, Microsoft pledged $750 million to boost the affordable housing inventory and has helped build or retain 12,000 units in the region. Amazon in recent years has committed $3.4 billion for housing across three hubs nationally where it has large operations. The company in September celebrated a milestone of building or preserving 10,000 units in the Seattle area.

Despite the efforts, Smith said the shortage keeps worsening and in 2025, new construction starts are expected to be the lowest since before the Great Recession.

The city of Seattle, for one, is looking to sweeten a property-tax exemption deal for developers that could encourage construction and it’s also applying AI to permitting process in an effort to speed up projects.

Smith also promoted the long-held vision of a high-speed rail line in the Pacific Northwest that would make commutes much faster between growing urban hubs. But a panel Wednesday cautioned that dream is still many years out.

Shares of Navan closed at $20, down 20%, in first-day trading on Thursday, indicating lackluster investor demand for the long-awaited debut.

Navan, which operates an expense management platform with an emphasis on travel, had priced shares for its offering at $25 each late Wednesday. It was formerly called TripActions, with the company pivoting to a broader platform when revenue reached zero right after the COVID pandemic hit.

The offering raised $923.1 million for the company, whose shares are trading on the Nasdaq under the ticker NAVN. It set an initial valuation of around $6.2 billion.

The move to the public markets has been a long time coming for Palo Alto, California-based Navan, which reportedly first submitted confidential paperwork for a planned offering more than three years ago.

The company had raised $1.2 billion in debt financing and $1 billion in equity funding from venture investors and credit providers, per Crunchbase data. Major venture stakeholders include Andreessen Horowitz, Lightspeed Venture Partners and Zeev Ventures.

Navan had revenue of $329 million in the first half of 2025, up 30% year over year. Growth comes as the company has been investing in developing its agentic AI offering, Navan Cognition, to automate more cumbersome tasks around travel planning and reporting.

Still, the company remains far from profitable. Navan’s net loss for the first half of this year came in just shy of $100 million — up about 7% from the year-earlier period. The loss comes amid higher spending on both R&D and sales and marketing — common for companies on the IPO track — looking to appeal to growth-hungry investors.

Per its IPO filing, Navan has incurred net losses in each year since its inception in 2015 and “may not achieve or, if achieved, sustain profitability in the future.”

IPO activity has picked up in 2025, with Navan one of several larger recent debuts, including well-received entries by consumer fintech Klarna and blockchain lender Figure. We’re also seeing heightened buzz around potential new market entrants.

Illustration: Dom Guzman

Update 30/10/2025: It's official, ARC Raiders' launch on Steam is bigger than The Finals, as it reaches 243,386 concurrent players on Steam. Original Story: Embark Studios' third-person extraction shooter, ARC Raiders, is now live and available on PC, PS5, and Xbox Series X/S and, at least on Steam, we know that the game is having a massive launch. It's even on track to surpass what The Finals accomplished in 2024, as ARC Raiders has over 200K concurrent players on Steam at the time of this writing. Per SteamDB, an hour after it went live, it had reached 140K concurrent players […]

Read full article at https://wccftech.com/arc-raiders-surpasses-200k-concurrent-players-on-steam-at-launch/

UK-based studio Maze Theory (which previously made several Doctor Who VR games and Peaky Blinders: The King's Ransom) and publisher Vertigo Games have just announced the release date of Thief: Legacy of Shadow, a new stealth action game coming out on December 4. Unfortunately, the game will only be available for virtual reality devices, which still comprise a minuscule portion of the overall gaming industry. That said, if you are a VR aficionado, chances are you already have a PlayStation VR2, Quest 2 or 3, or a Steam VR-compatible headset. Legacy of Shadow will bring players back to The City […]

Read full article at https://wccftech.com/theres-a-new-thief-game-coming-out-this-year-though-only-for-vr-devices/

The first and second-gen RDNA lineups are being ditched so quickly, and as per the company's latest statement, these will only receive critical updates. AMD Confirms End of Game Optimization and Feature Updates for Radeon RX 5000 and RX 6000 Series AMD's first RDNA GPU series, aka Radeon RX 5000, is hardly 6 years old, and if you think it is too early to see a drop in official optimization and feature updates, then we have the same news for RX 6000 GPU owners. If you checked the latest release notes for AMD's latest Adrenalin Edition 25.10.2, which officially adds […]

Read full article at https://wccftech.com/amd-rdna-1-2-gpu-driver-support-moved-to-maintenance-mode-game-optimizations-new-tech-for-rdna-3-4-beyond/

Today, Deadline reports that the Call of Duty movie has secured a director and a writer: Peter Berg and Taylor Sheridan. Berg will direct and also co-write alongside Taylor Sheridan. Berg is known for directing several action thriller movies, including 2007's The Kingdom, 2013's Lone Survivor, and 2018's Mile 22. Sheridan, on the other hand, is primarily known as the creator of the Yellowstone franchise (as well as Mayor of Kingstown, Tulsa King, and Lioness), but he also wrote the two Sicario movies. Berg and Sheridan worked together on Hell or High Water, the acclaimed 2016 crime drama film that […]

Read full article at https://wccftech.com/call-of-duty-movie-directed-peter-berg-written-taylor-sheridan/

Metal Gear Solid Delta: Snake Eater launched late this summer on August 28, 2025, on PC, PS5, and Xbox Series X/S, without its announced multiplayer mode, Fox Hunt. We learned a little before Snake Eater's release that Fox Hunt would not arrive alongside the rest of the game, and now that day is finally here, marked with a new gameplay trailer showcasing the mode. The PvP stealth-action mode leans on all the stealth mechanics in the core Snake Eater campaign, and challenges players to make use of everything in Snake's toolbox as they try to be the sneakiest Fox Unit […]

Read full article at https://wccftech.com/metal-gear-solid-delta-snake-eater-fox-hunt-now-live-pc-ps5-xbox-series-x-s/

Intel's former CEO, Pat Gelsinger, has shared his thoughts on NVIDIA producing the first Blackwell chip wafer in the US, expressing his pleasure with the pursuit of American manufacturing. Intel's Pat Gelsinger Supports NVIDIA's Efforts to Bring Advanced Product Manufacturing to the US This marks one of the rare occasions where Gelsinger has actually appreciated NVIDIA's efforts in the AI segment, as, based on some of his past remarks about the firm, Team Green didn't align with what Intel's former CEO had expected from AI. On a post on X, Pat Gelsinger expressed appreciation for NVIDIA's efforts to bring manufacturing […]

Read full article at https://wccftech.com/intel-ex-ceo-pat-gelsinger-praises-nvidia-us-made-blackwell-wafer/

While EA is all-in on Battlefield 6 right now after it had a massive launch at the beginning of October and earlier this week launched its battle royale mode, Battlefield REDSEC, Respawn is still chugging away at Apex Legends, with its 27th season set to arrive next week, titled 'Amped.' The new season arrives with a refresh to one of the game's more popular maps, Olympus, and buffs for a few Legends, specifically Valkyrie, Rampart, and Horizon. This season also adds new mechanics that'll make the game even faster than it already is, with a new mantle boost giving players […]

Read full article at https://wccftech.com/apex-legends-season-27-amped-arrives-next-week/

After releasing a bespoke self-repair manual for each of its iPhone 17 models, Apple has now made available the spare parts for the new lineup, and some of those are, unsurprisingly, quite pricey. Apple has now made available the key spare parts for the iPhone 17 lineup via its Self-Service Repair Store Before going further, do note that the self-repair manuals and these spare parts have constituted a significant component of this year's iFixit score improvements, albeit very slight ones, for the new Apple hardware. The following spare parts are now available in Apple's Self-Service Repair Store for the base […]

Read full article at https://wccftech.com/apple-iphone-air-battery-replacement-will-cost-you-119-iphone-17-pro-max-display-will-set-you-back-by-379/

Final Fantasy Tactics - The Ivalice Chronicles doesn't feature any of the additional content found in the War of the Lions release and the mobile versions of the game, but this could quickly become a thing of the past, as a new mod now available online restores a small portion of this additional content. The WotL Character Repair mod, developed by Dana Crysalis and now available for download for free from Nexus Mods, fixes Balthier and Luso, making it possible to add them to the party via Cheat Engine tables and use them in battle with their unique Jobs and […]

Read full article at https://wccftech.com/new-final-fantasy-tactics-the-ivalice-chronicles-mod-begins-restoration-of-war-of-the-lions-content/

Amidst a massive layoff at Amazon, which cut 14,000+ employees and killed further development of New World: Aeternum, has also reportedly killed (for a second time) the Lord of the Rings MMO that was in production at Amazon Game Studios. Spotted by Rock Paper Shotgun, a now former Amazon Game Studios senior gameplay engineer, Ashleigh Amrine, confirmed in a post on her personal LinkedIn page that the "fledgling Lord of the Rings game" was part of the cuts at Amazon. "This morning I was part of layoffs at Amazon Games, alongside my incredibly talented peers on New World and our […]

Read full article at https://wccftech.com/amazon-game-studios-lord-of-the-rings-mmo-cancelled-again-amidst-mass-layoffs/

iFixit has just published a nearly 6.5-minute video on YouTube detailing the repairability metrics for the new Apple M5 iPad Pro, concluding that the device remains one of the least repairable hardware products from Apple. However, the new self-service tools do manage to boost its overall repairability score. iFixit: "At just 5.1mm thickness, it's thinner than an iPhone Air, which means the screen is mounted flush against the internals" iFixit has noted the following about the new M5 iPad Pro: On the whole, iFixit has pegged a 5/10 provisional repairability score to the new M5 iPad Pro. The M5 iPad […]

Read full article at https://wccftech.com/ifixit-apples-self-service-tools-for-the-m5-ipad-pro-bump-up-its-repairability-score/

NVIDIA's Jensen Huang is currently in South Korea for the APEC summit, and it seems he is having a pretty interesting day, spending time with his 'executive friends' at Samsung and Hyundai. Jensen Got a 'Little Too Comfortable' In His Visit to Korea, After Delivering the GTC 2025 Keynote This week has been a jam-packed one for NVIDIA's CEO, as Jensen delivered one of the most important keynotes of his career and then took a flight straight to South Korea for the APEC summit, where he met with Samsung's Chairman Lee Jae-yong and the President of Hyundai Motors, Chung Eui-sun. […]

Read full article at https://wccftech.com/nvidia-ceo-is-having-a-great-time-with-samsung-hyundai-executives/

ARC Raiders is out today on PC, PS5, and Xbox Series X/S consoles, but more importantly for PC players, Embark Studios' latest arrives with support for NVIDIA DLSS 4 with Multi-Frame Generation and NVIDIA Reflex. Furthermore, if you have an RTX 50 Series graphics card in your PC, then you'll be able to multiply the frame rates you see in ARC Raiders by an average of 3.6X, even when playing at 4K. According to NVIDIA, when playing ARC Raiders on an RTX 50 Series card, with DLSS 4 and Multi-Frame Generation while also using DLSS Super Resolution, you can "multiply […]

Read full article at https://wccftech.com/arc-raiders-nvidia-dlss-4-better-performance-multi-frame-generation/

Today, independent developer TaleWorlds Entertainment has confirmed the release date of War Sails, the upcoming expansion for Mount & Blade II: Bannerlord. War Sails was originally scheduled to launch in June, though it was ultimately delayed by TaleWorlds. The expansion is now set to go live on November 26 at 00:00 Pacific Time, 03:00 Eastern Time, 09:00 Central European Time. It will be a simultaneous release on PC and consoles (PlayStation 5 and Xbox Series S|X). Pricing has been confirmed to be $24.99. The announcement was paired with an extensive gameplay showcase that demonstrated the expansion's main features. Players learned […]

Read full article at https://wccftech.com/war-sails-naval-expansion-mount-blade-ii-bannerlord-out-november-26/

keinsaas Navigator combines AI speed with expert reliability to create n8n workflows that actually work. Simply describe your process, and our AI generates a complete workflow using knowledge from 5000+ proven templates. The key difference: automation experts review and optimize everything before delivery.

In 24 hours, you get a production-ready n8n workflow with setup docs, ready to deploy. No technical learning curve, no broken implementations - just reliable automation from manual process to professional solution.

We’re introducing a new logs and datasets feature in Google AI Studio.

We’re introducing a new logs and datasets feature in Google AI Studio.

The University of Washington’s Paul G. Allen School of Computer Science & Engineering is reframing what it means for its research to change the world.

In unveiling six “Grand Challenges” at its annual Research Showcase and Open House in Seattle on Wednesday, the Allen School’s leaders described a blueprint for technology that protects privacy, supports mental health, broadens accessibility, earns public trust, and sustains people and the planet.

The idea is to “organize ourselves into some more specific grand challenges that we can tackle together to have an even greater impact,” said Magdalena Balazinska, director of the Allen School and a UW computer science professor, opening the school’s annual Research Showcase and Open House.

Here are the six grand challenges:

Balazinska explained that the list draws on the strengths and interests of its faculty, who now number more than 90, including 74 on the tenure track.

With total enrollment of about 2,900 students, last year the Allen School graduated more than 600 undergrads, 150 master’s students, and 50 Ph.D. students.

The Allen School has grown so large that subfields like systems and NLP (natural language processing) risk becoming isolated “mini departments,” said Shwetak Patel, a University of Washington computer science professor. The Grand Challenges initiative emerged as a bottom-up effort to reconnect these groups around shared, human-centered problems.

Patel said the initiative also encourages collaborations on campus beyond the computer science school, citing examples like fetal heart rate monitoring with UW Medicine.

A serial entrepreneur and 2011 MacArthur Fellow, Patel recalled that when he joined UW 18 years ago, his applied and entrepreneurial focus was seen as unconventional. Now it’s central to the school’s direction. The grand challenges initiative is “music to my ears,” Patel said.

In tackling these challenges, the Allen School has a unique advantage against many other computer science schools. Eighteen faculty members currently hold what’s known as “concurrent engagements” — formally splitting time between the Allen School and companies and organizations such as Google, Meta, Microsoft, and the Allen Institute for AI (Ai2).

This is a “superpower” for the Allen School, said Patel, who has a concurrent engagement at Google. These arrangements, he explained, give faculty and students access to data, computing resources, and real-world challenges by working directly with companies developing the most advanced AI systems.

“A lot of the problems we’re trying to solve, you cannot solve them just at the university,” Patel said, pointing to examples such as open-source foundation models and AI for mental-health research that depend on large-scale resources unavailable in academia alone.

These roles can also stretch professors thin. “When somebody’s split, there’s only so much mental energy you can put into the university,” Patel said. Many of those faculty members teach just one or two courses a year, requiring the school to rely more on lecturers and teaching faculty.

Still, he said, the benefits outweigh the costs. “I’d rather have 50% of somebody than 0% of somebody, and we’ll make it work,” he said. “That’s been our strategy.”

The Madrona Prize, an annual award presented at the event by the Seattle-based venture capital firm, went to a project called “Enhancing Personalized Multi-Turn Dialogue with Curiosity Reward.” The system makes AI chatbots more personal by giving them a “curiosity reward,” motivating the AI to actively learn about a user’s traits during a conversation to create more personalized interactions.

On the subject of industry collaborations, the lead researcher on the prize-winning project, UW Ph.D. student Yanming Wan, conducted the research while working as an intern at Google DeepMind. (See full list of winners and runners-up below.)

At the evening poster session, graduate students filled the rooms to showcase their latest projects — including new advances in artificial intelligence for speech, language, and accessibility.

DopFone: Doppler-based fetal heart rate monitoring using commodity smartphones

DopFone transforms phones into fetal heart rate monitors. It uses the phone speaker to transmit a continuous sine wave and uses the microphone to record the reflections. It then processes the audio recordings to estimate fetal heart rate. It aims to be an alternative to doppler ultrasounds that require trained staff, which aren’t practical for frequent remote use.

“The major impact would be in the rural, remote and low-resource settings where access to such maternity care is less — also called maternity care deserts,” said Poojita Garg, a second-year PhD student.

CourseSLM: A Chatbot Tool for Supporting Instructors and Classroom Learning

This custom-built chatbot is designed to help students stay focused and build real understanding rather than relying on quick shortcuts. The system uses built-in guardrails to keep learners on task and counter the distractions and over-dependence that can come with general large language models.

Running locally on school devices, the chatbot helps protect student data and ensures access even without Wi-Fi.

“We’re focused on making sure students have access to technology, and know how to use it properly and safely,” said Marquiese Garrett, a sophomore at the UW.

Efficient serving of SpeechLMs with VoxServe

VoxServe makes speech-language models run more efficiently. It uses a standardized abstraction layer and interface that allows many different models to run through a single system. Its key innovation is a custom scheduling algorithm that optimizes performance depending on the use case.

The approach makes speech-based AI systems faster, cheaper, and easier to deploy, paving the way for real-time voice assistants and other next-gen speech applications.

“I thought it would be beneficial if we can provide this sort of open-source system that people can use,” said Keisuke Kamahori, third-year Ph.D. student at the Allen School.

ConvFill: Model collaboration for responsive conversational voice agents

ConvFill is a lightweight conversational model designed to reduce the delay in voice-based large language models. The system responds quickly with short, initial answers, then fills in more detailed information as larger models complete their processing.

By combining small and large models in this way, ConvFill delivers faster responses while conserving tokens and improving efficiency — an important step toward more natural, low-latency conversational AI.

“This is an exciting way to think about how we can combine systems together to get the best of both worlds,” said Zachary Englhardt, a third-year Ph.D. student. “It’s an exciting way to look at problems.”

ConsumerBench: Benchmarking generative AI on end-user devices

Running generative AI locally — on laptops, phones, or other personal hardware — introduces new system-level challenges in fairness, efficiency, and scheduling.

ConsumerBench is a benchmarking framework that tests how well generative AI applications perform on consumer hardware when multiple AI models run at the same time. The open-source tool helps researchers identify bottlenecks and improve performance on consumer devices.

There are a number of benefits to running models locally: “There are privacy purposes — a user can ask for questions related to email or private content, and they can do it efficiently and accurately,” said Yile Gu, a third-year Ph.D. student at the Allen School.

Designing Chatbots for Sensitive Health Contexts: Lessons from Contraceptive Care in Kenyan Pharmacies

A project aimed at improving contraceptive access and guidance for adolescent girls and young women in Kenya by integrating low-fidelity chatbots into healthcare settings. The goal is to understand how chatbots can support private, informed conversations and work effectively within pharmacies.

“The fuel behind this whole project is that my team is really interested in improving health outcomes for vulnerable populations,” said Lisa Orii, a fifth-year Ph.D. student.

See more about the research showcase here. Here’s the list of winning projects.

Madrona Prize Winner: “Enhancing Personalized Multi-Turn Dialogue with Curiosity Reward” Yanming Wan, Jiaxing Wu, Marwa Abdulhai, Lior Shani, Natasha Jaques

Runner up: “VAMOS: A Hierarchical Vision-Language-Action Model for Capability-Modulated and Steerable Navigation” Mateo Guaman Castro, Sidharth Rajagopal, Daniel Gorbatov, Matt Schmittle, Rohan Baijal, Octi Zhang, Rosario Scalise, Sidharth Talia, Emma Romig, Celso de Melo, Byron Boots, Abhishek Gupta

Runner up: “Dynamic 6DOF VR reconstruction from monocular videos” Baback Elmieh, Steve Seitz, Ira-Kemelmacher, Brian Curless

People’s Choice: “MolmoAct” Jason Lee, Jiafei Duan, Haoquan Fang, Yuquan Deng, Shuo Liu, Boyang Li, Bohan Fang, Jieyu Zhang, Yi Ru Wang, Sangho Lee, Winson Han, Wilbert Pumacay, Angelica Wu, Rose Hendrix, Karen Farley, Eli VanderBilt, Ali Farhadi, Dieter Fox, Ranjay Krishna

Editor’s Note: The University of Washington underwrites GeekWire’s coverage of artificial intelligence. Content is under the sole discretion of the GeekWire editorial team. Learn more about underwritten content on GeekWire.

Entering a new era of Base44

The post Perplexity’s AI Patent Search Aims to Demystify IP for Everyone appeared first on StartupHub.ai.

Perplexity Patents leverages advanced AI to transform complex patent research into a conversational, accessible experience, democratizing IP intelligence for innovators worldwide.

The post Perplexity’s AI Patent Search Aims to Demystify IP for Everyone appeared first on StartupHub.ai.

The post AI Breast Cancer Screening Transforms Rural India Access appeared first on StartupHub.ai.

AI breast cancer screening, powered by MedCognetics and NVIDIA, is bringing critical early detection capabilities to rural India via mobile clinics.

The post AI Breast Cancer Screening Transforms Rural India Access appeared first on StartupHub.ai.

The post Meta’s AI Patience Test: Goldman Sachs on Divergent Tech Fortunes appeared first on StartupHub.ai.