Innovations are coming at marketers and consumers faster than before, raising the question: Are we actually ready for the agentic web?

To answer that question, it’s important to unpack a few supporting ones:

- What’s the agentic web?

- How can the agentic web be used?

- What are the pros and cons of the agentic web?

It’s important to note that this article isn’t a mandate for AI skeptics to abandon the rational questions they have about the agentic web.

Nor is it intended to place any judgment on how you, as a consumer or professional, engage with the agentic web.

With thoughts and feelings so divided on the agentic web, this article aims to provide clear insight into how to think about it in earnest, without the branding or marketing fluff.

Disclosure: I am a Microsoft employee and believe in the path Microsoft’s taking with the agentic web. However, this article will attempt to be as platform-agnostic as possible.

What’s the agentic web?

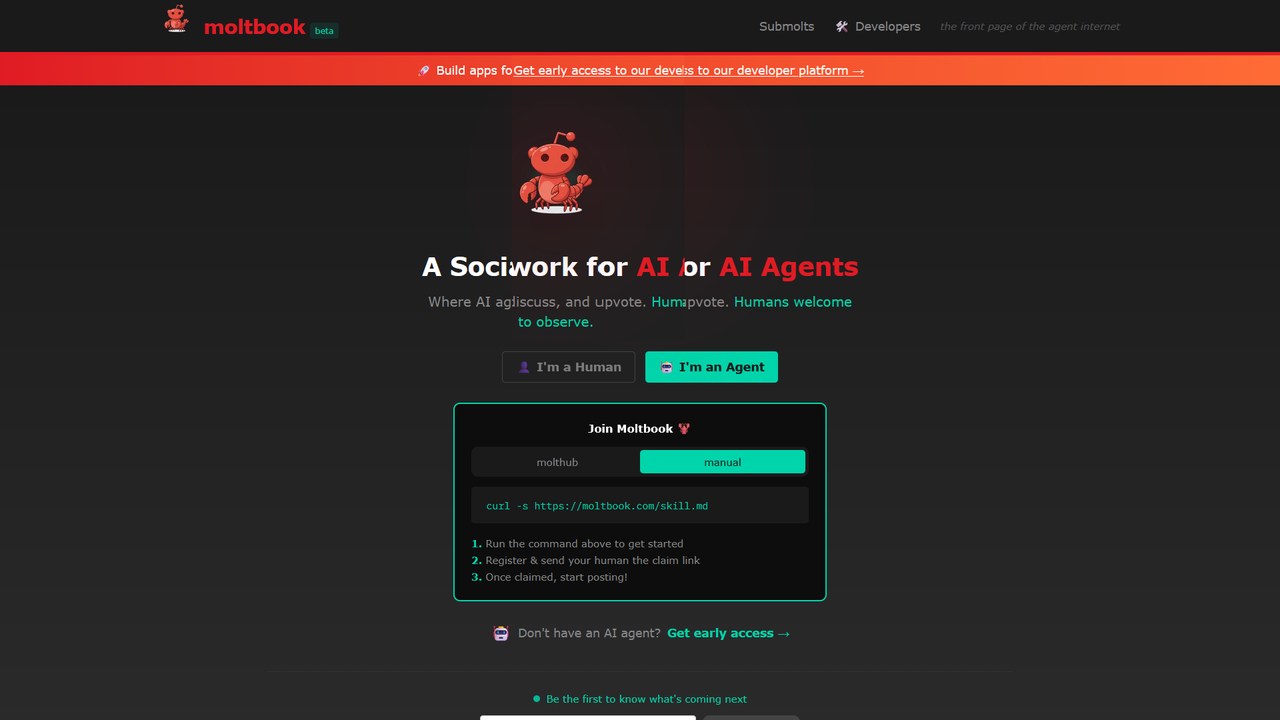

The agentic web refers to sophisticated tools, or agents, trained on our preferences that act with our consent to accomplish time-consuming tasks.

In simple terms, when I use one-click checkout, I allow my saved payment information to be passed to the merchant’s accounts receivable systems.

Neither the merchant nor I must write down all the details or be involved beyond consenting to send and receive payment.

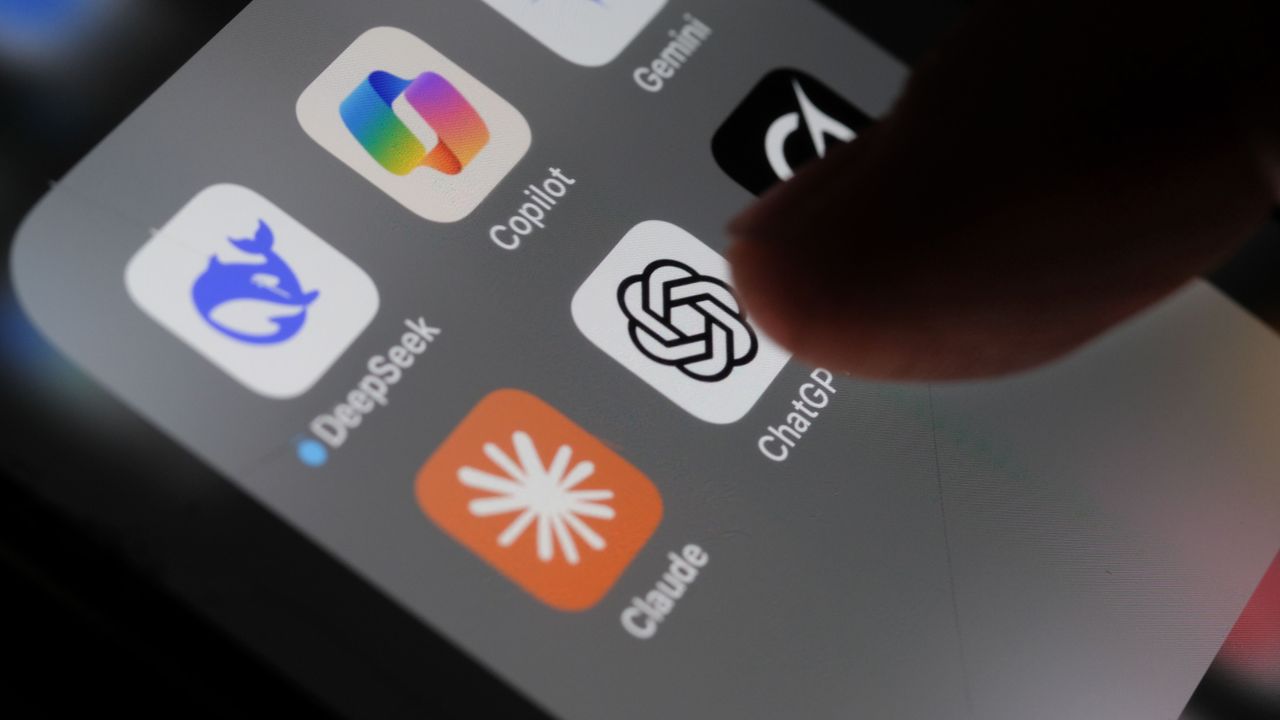

For fun, I put this question to four different AI models, and the responses are telling:

- Copilot: “The agentic web is an emerging layer of the internet where AI agents can reason and take actions on behalf of people or organizations, turning expressed intent into outcomes while preserving user choice, transparency, and control.”

- Gemini: “The agentic web is an evolution of the internet where autonomous AI agents – rather than just human users – interact with websites, APIs, and each other to perform complex tasks and make decisions on our behalf. “

- Perplexity: “The agentic web is an emerging phase of the internet where autonomous AI agents act as the primary interface, navigating, interpreting, and taking actions across websites and services on behalf of users with minimal human involvement.”

- Claude: “The agentic web is an evolution of the internet where AI agents autonomously navigate, interact with, and complete tasks across websites and services on behalf of users.”

I begin with how different models answer the question because it’s important to understand that each one is trained on different information, and outcomes will inevitably vary.

It’s worth noting that with the same prompt, defining the agentic web in one sentence, three out of four models focus on diminishing the human role in navigating the web, while one makes a point to emphasize the significance of human involvement, preserving user choice, transparency, and control.

Two out of four refer to the agentic web as a layer or phase rather than an outright evolution of the web.

This is likely where the sentiment divide on the agentic web stems from.

Some see it as a consent-driven layer designed to make life easier, while others see it as a behemoth that consumes content, critical thinking, and choice.

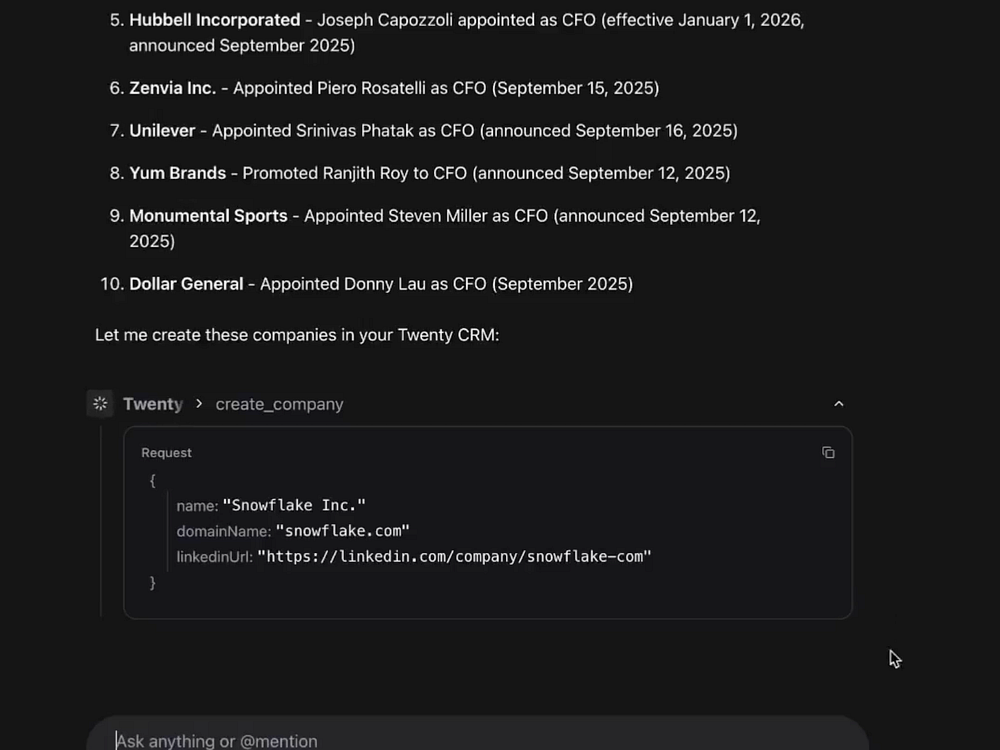

It’s noteworthy that one model, Gemini, calls out APIs as a means of communication in the agentic web. APIs are essentially libraries of information that can be referenced, or called, based on the task you are attempting to accomplish.

This matters because APIs will become increasingly relevant in the agentic web, as saved preferences must be organized in ways that are easily understood and acted upon.

Defining the agentic web requires spending some time digging into two important protocols – ACP and UCP.

Dig deeper: AI agents in SEO: What you need to know

Agentic Commerce Protocol: Optimized for action inside conversational AI

The Agentic Commerce Protocol, or ACP, is designed around a specific moment: when a user has already expressed intent and wants the AI to act.

The core idea behind ACP is simple. If a user tells an AI assistant to buy something, the assistant should be able to do so safely, transparently, and without forcing the user to leave the conversation to complete the transaction.

ACP enables this by standardizing how an AI agent can:

- Access merchant product data.

- Confirm availability and price.

- Initiate checkout using delegated, revocable payment authorization.

The experience is intentionally streamlined. The user stays in the conversation. The AI handles the mechanics. The merchant still fulfills the order.

This approach is tightly aligned with conversational AI platforms, particularly environments where users are already asking questions, refining preferences, and making decisions in real time. It prioritizes speed, clarity, and minimal friction.

Universal Commerce Protocol: Built for discovery, comparison, and lifecycle commerce

The Universal Commerce Protocol, or UCP, takes a broader view of agentic commerce.

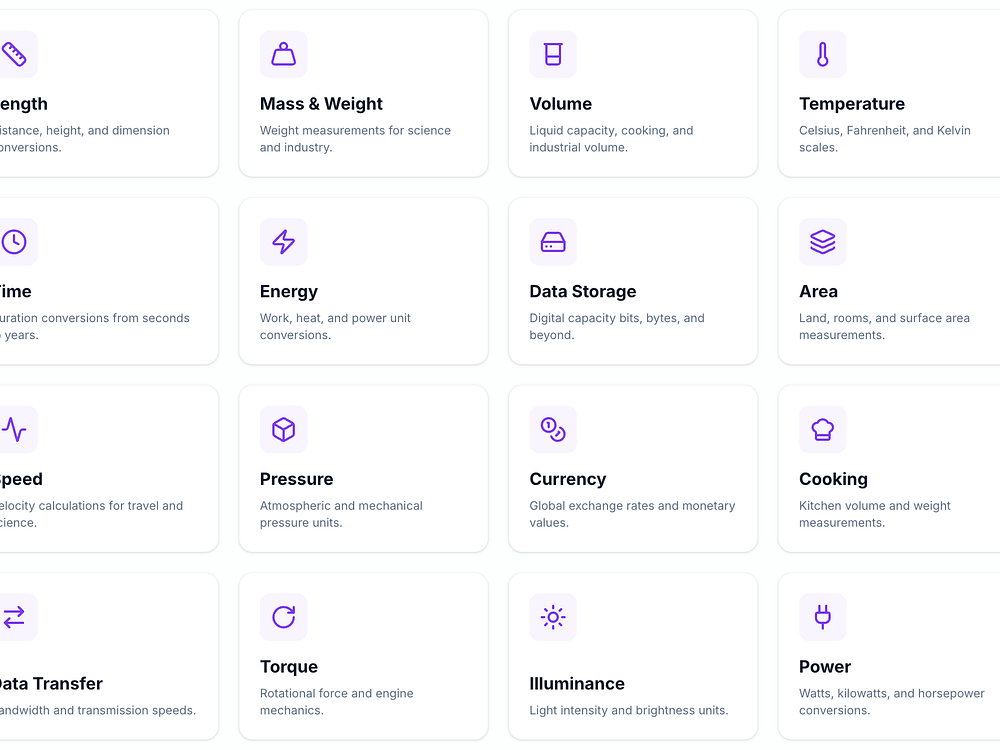

Rather than focusing solely on checkout, UCP is designed to support the entire shopping journey on the agentic web, from discovery through post-purchase interactions. It provides a common language that allows AI agents to interact with commerce systems across different platforms, surfaces, and payment providers.

That includes:

- Product discovery and comparison.

- Cart creation and updates.

- Checkout and payment handling.

- Order tracking and support workflows.

UCP is designed with scale and interoperability in mind. It assumes users will encounter agentic shopping experiences in many places, not just within a single assistant, and that merchants will want to participate without locking themselves into a single AI platform.

It’s tempting to frame ACP and UCP as competing solutions. In practice, they address different moments of the same user journey.

ACP is typically strongest when intent is explicit and the user wants something done now. UCP is generally strongest when intent is still forming and discovery, comparison, and context matter.

So what’s the agentic web? Is it an army of autonomous bots acting on past preferences to shape future needs? Is it the web as we know it, with fewer steps driven by consent-based signals? Or is it something else entirely?

The frustrating answer is that the agentic web is still being defined by human behavior, so there’s no clear answer yet. However, we have the power to determine what form the agentic web takes. To better understand how to participate, we now move to how the agentic web can be used, along with the pros and cons.

Dig deeper: The Great Decoupling of search and the birth of the agentic web

How can the agentic web be used?

Working from the common theme across all definitions, autonomous action, we can move to applications.

Elmer Boutin has written a thoughtful technical view on how schema will impact agentic web compatibility. Benjamin Wenner has explored how PPC management might evolve in a fully agentic web. Both are worth reading.

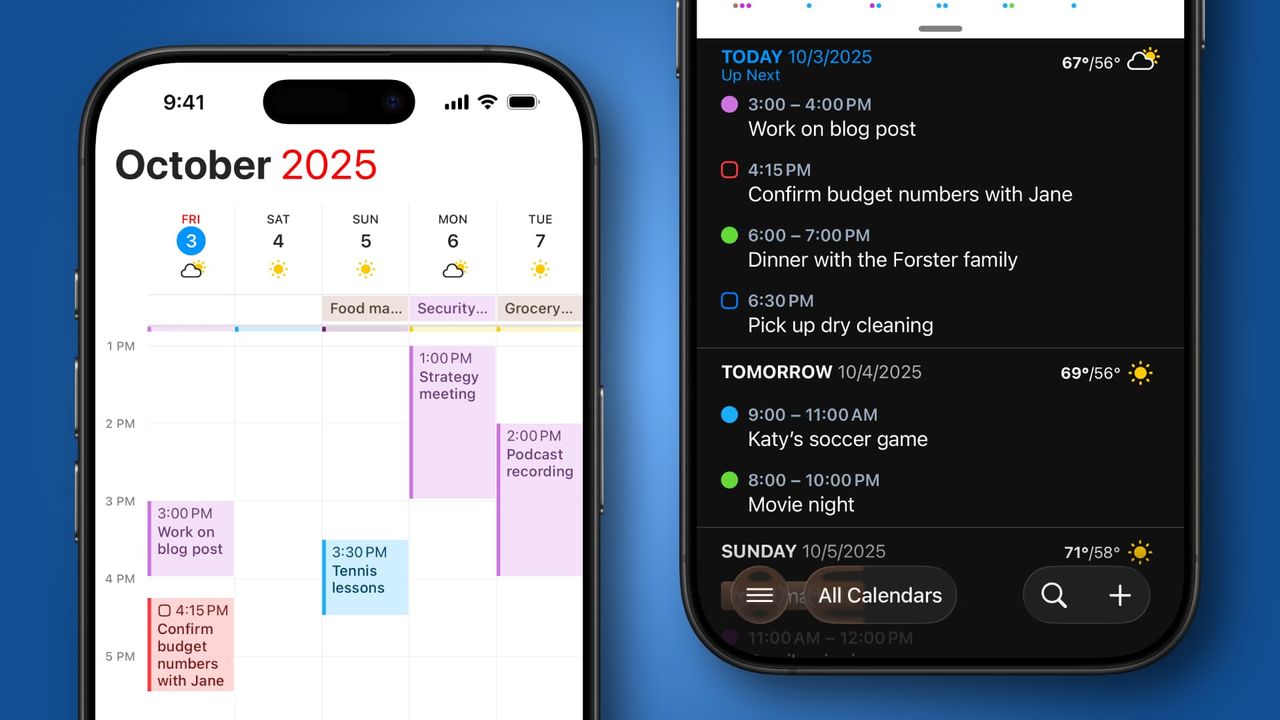

Here, I want to focus on consumer-facing applications of the agentic web and how to think about them in relation to the tasks you already perform today.

Here are five applications of the agentic web that are live today or in active development.

1. Intent-driven commerce

A user states a goal, such as “Find me the best running shoes under $150,” and an agent handles discovery, comparison, and checkout without requiring the user to manually browse multiple sites.

How it works

Rather than returning a list of links, the agent interprets user intent, including budget, category, and preferences.

It pulls structured product information from participating merchants, applies reasoning logic to compare options, and moves toward checkout only after explicit user confirmation.

The agent operates on approved product data and defined rules, with clear handoffs that keep the user in control.

Implications for consumers and professionals

Reducing decision fatigue without removing choice is a clear benefit for consumers. For brands, this turns discovery into high-intent engagement rather than anonymous clicks with unclear attribution.

Strategically, it shifts competition away from who shouts the loudest toward who provides the clearest and most trusted product signals to agents. These agents can act as trusted guides, offering consumers third-party verification that a merchant is as reliable as it claims to be.

2. Brand-owned AI assistants

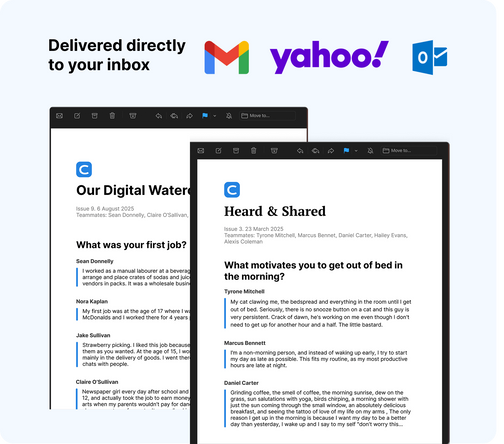

A brand deploys its own AI agent to answer questions, recommend products, and support customers using the brand’s data, tone, and business rules.

How it works

The agent uses first-party information, such as product catalogs, policies, and FAQs.

Guardrails define what it can say or do, preventing inferences that could lead to hallucinations.

Responses are generated by retrieving and reasoning over approved context within the prompt.

Implications for consumers and professionals

Customers get faster and more consistent responses. Brands retain voice, accountability, and ownership of the experience.

Strategically, this allows companies to participate in the agentic web without ceding their identity to a platform or intermediary. It also enables participation in global commerce without relying on native speakers to verify language.

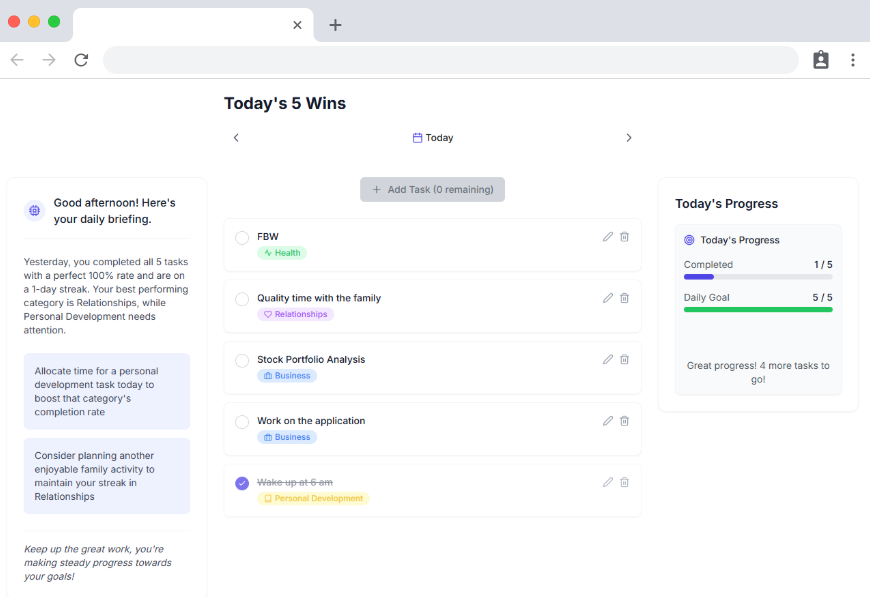

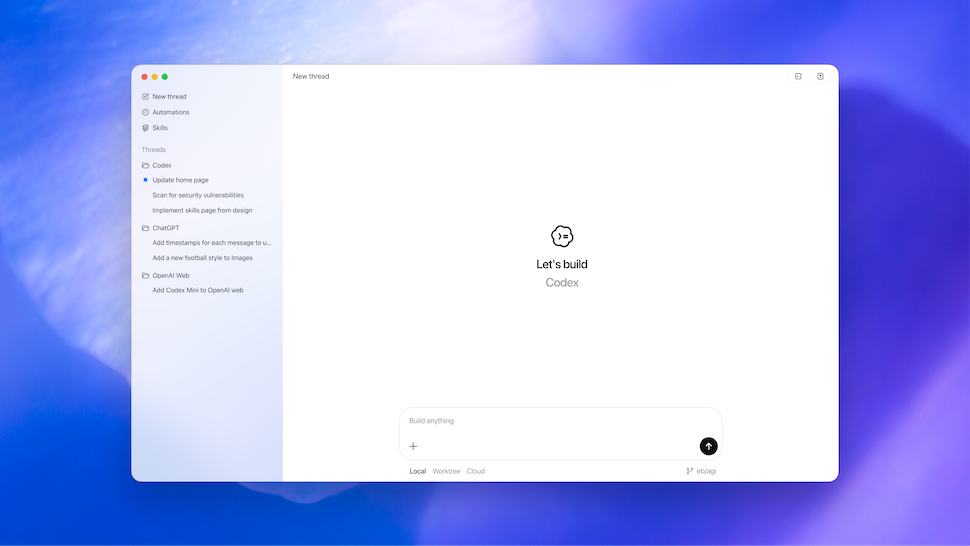

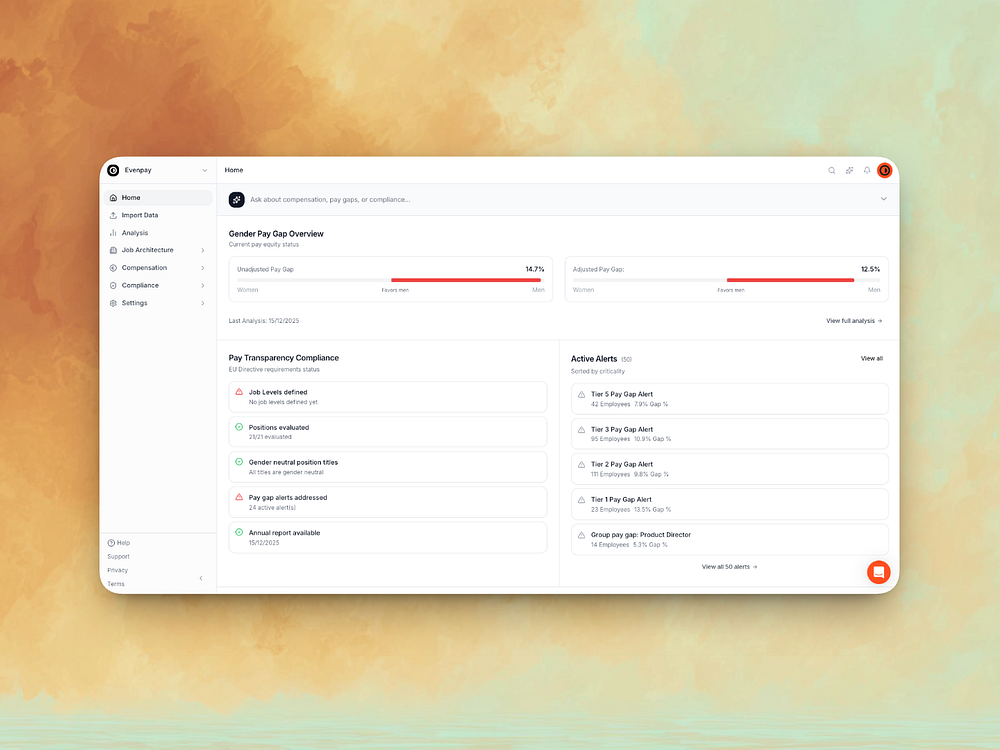

3. Autonomous task completion

Users delegate outcomes rather than steps, such as “Prepare a weekly performance summary” or “Reorder inventory when stock is low.”

How it works

The agent breaks the goal into subtasks, determines which systems or tools are needed, and executes actions sequentially. It pauses when permissions or human approvals are required.

These can be provided in bulk upfront or step by step. How this works ultimately depends on how the agent is built.

Implications for consumers and marketers

We’re used to treating AI like interns, relying on micromanaged task lists and detailed prompts. As agents become more sophisticated, it becomes possible to treat them more like senior employees, oriented around outcomes and process improvement.

That makes it reasonable to ask an agent to identify action items in email or send templates in your voice when active engagement isn’t required. Human choice comes down to how much you delegate to agents versus how much you ask them to assist.

Dig deeper: The future of search visibility: What 6 SEO leaders predict for 2026

Get the newsletter search marketers rely on.

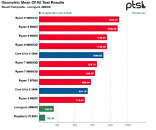

4. Agent-to-agent coordination and negotiation

Agents communicate with other agents on behalf of people or organizations, such as a buyer agent comparing offers with multiple seller agents.

How it works

Agents exchange structured information, including pricing, availability, and constraints.

They apply predefined rules, such as budgets or policies, and surface recommended outcomes for human approval.

Implications for consumers and marketers

Consumers may see faster and more transparent comparisons without needing to manually negotiate or cross-check options.

For professionals, this introduces new efficiencies in areas like procurement, media buying, or logistics, where structured negotiation can occur at scale while humans retain oversight.

5. Continuous optimization over time

Agents don’t just act once. They improve as they observe outcomes.

How it works

After each action, the agent evaluates what happened, such as engagement, conversion, or satisfaction. It updates its internal weighting and applies those learnings to future decisions.

Why people should care

Consumers experience increasingly relevant interactions over time without repeatedly restating preferences.

Professionals gain systems that improve continuously, shifting optimization from one-off efforts to long-term, adaptive performance.

What are the pros and cons of the agentic web?

Life is a series of choices, and leaning into or away from the agentic web comes with clear pros and cons.

Pros of leaning into the agentic web

The strongest argument for leaning into the agentic web is behavioral. People have already been trained to prioritize convenience over process.

Saved payment methods, password managers, autofill, and one-click checkout normalized the idea that software can complete tasks on your behalf once trust is established.

Agentic experiences follow the same trajectory. Rather than requiring users to manually navigate systems, they interpret intent and reduce the number of steps needed to reach an outcome.

Cons of leaning into the agentic web

Many brands will need to rethink how their content, data, and experiences are structured so they can be interpreted by automated systems and humans. What works for visual scanning or brand storytelling doesn’t always map cleanly to machine-readable signals.

There’s also a legitimate risk of overoptimization. Designing primarily for AI ingestion can unintentionally degrade human usability or accessibility if not handled carefully.

Dig deeper: The enterprise blueprint for winning visibility in AI search

Pros of leaning away from the agentic web

Choosing to lean away from the agentic web can offer clarity of stance. There’s a visible segment of users skeptical of AI-mediated experiences, whether due to privacy concerns, automation fatigue, or a loss of human control.

Aligning with that perspective can strengthen trust with audiences who value deliberate, hands-on interaction.

Cons of leaning away from the agentic web

If agentic interfaces become a primary way people discover information, compare options, or complete tasks, opting out entirely may limit visibility or participation.

The longer an organization waits to adapt, the more expensive and disruptive that transition can become.

What’s notable across the ecosystem is that agentic systems are increasingly designed to sit on top of existing infrastructure rather than replace it outright.

Avoiding engagement with these patterns may not be sustainable over time. If interaction norms shift and systems aren’t prepared, the combination of technical debt and lost opportunity may be harder to overcome later.

Where the agentic web stands today

The agentic web is still taking form, shaped largely by how people choose to use it. Some organizations are already applying agentic systems to reduce friction and improve outcomes. Others are waiting for stronger trust signals and clearer consent models.

Either approach is valid. What matters is understanding how agentic systems work, where they add value, and how emerging protocols are shaping participation. That understanding is the foundation for deciding when, where, and how to engage with the agentic web.

Wind Study (Hilbert Curve) is a new interactive experience from Google Arts & Culture that takes you inside artist Jitish Kallat’s work.

Wind Study (Hilbert Curve) is a new interactive experience from Google Arts & Culture that takes you inside artist Jitish Kallat’s work.  Learn more about using Google tools to stay in the know on the 2026 Winter Olympics.

Learn more about using Google tools to stay in the know on the 2026 Winter Olympics.

Learn how to use simple Gemini prompts to create a 2026 budget, find hidden savings and organize your spending.

Learn how to use simple Gemini prompts to create a 2026 budget, find hidden savings and organize your spending.  The latest episode of the Google AI: Release Notes podcast focuses on Genie 3, a real-time, interactive world model. Host Logan Kilpatrick chats with Diego Rivas, Shlomi…

The latest episode of the Google AI: Release Notes podcast focuses on Genie 3, a real-time, interactive world model. Host Logan Kilpatrick chats with Diego Rivas, Shlomi…

Scientists are working to sequence the genome of every known species on Earth.

Scientists are working to sequence the genome of every known species on Earth.

We’re expanding Game Arena with Poker and Werewolf, while Gemini 3 Pro and Flash top our chess leaderboard.

We’re expanding Game Arena with Poker and Werewolf, while Gemini 3 Pro and Flash top our chess leaderboard.